1. Introduction

The question of whether a property/casualty insurance company possesses sufficient capital to continue operating remains one of the most important problems faced by insurance regulators, rating agencies, and company management. The National Association of Insurance Commissioners (NAIC) relies in part upon risk-based capital standards to ensure that a company’s capital remains adequate to support current and past writings. Using conservative statutory accounting valuations, the risk-based capital requirements give regulators a tool to use in justifying prompt regulatory action against a troubled company, action which does not require a court order and helps limit the effect of a potential insolvency (Lewis 1998). Risk-based capital (RBC) results might also be employed by a rating agency as one measure of an insurance company’s financial strength. Yet many times the tool simply has not worked as envisioned, with capital inadequacy not indicated by RBC until it was too late.

The near-collapse of AIG’s United Guaranty represents one of the more famous examples of RBC failure. We use this as a case study for several reasons. First, mortgage insurance is classified as a property-casualty line and covered by RBC. Second, while having certain idiosyncrasies, private mortgage insurance (PMI) is very much a property-casualty line of business, with potentially extreme loss ratios and protracted written premium development. Certain nuances are also found in many other lines, such as warranty insurance, title insurance, and workers compensation insurance, and thus we feel that PMI represents the basis for an interesting and insightful case study. Third, the recent housing crisis impacted PMI companies and is therefore an interesting and relevant indicator of RBC effectiveness.

In the months running up to United Guaranty’s financial nosedive, RBC modeling showed redundant capital levels. Regulators and rating agencies did not understand that the company was financially troubled until it was too late. According to an article published in the Triad Business Journal on June 16, 2008, AIG’s United Guaranty lost $352 million in the first quarter of 2008, and more than $1 billion in the previous four quarters (Triad Business Journal 2008). Moreover, when United Guaranty reorganized, it chose to reinsure business written between 2005–2008 to get it off of their books, suggesting that United Guaranty’s troubles did not happen overnight, but instead over a period of several years, culminating in 2008 (England 2012). Yet on July 9, 2008, Moody’s Investor Service notes, “For UGRIC’s mortgage insurance portfolio overall, capital adequacy on a risk-adjusted basis is consistent with Moody’s double-A metrics, and the company is currently well within regulatory limits” (Moody’s Investors Service 2008). Also the North Carolina Insurance Department did not take regulatory action until United Guaranty’s capital adequacy problems were well known, and AIG had all but collapsed. AIG was able to get a capital infusion and chose to “save” United Guaranty, but many other private mortgage insurance (PMI) companies did not fare as well during the 2008 financial crisis. For example, Triad Guaranty Insurance Corporation also collapsed, and is now winding down as it placed its business in run-off. If RBC were truly an effective indicator, one would have expected it to indicate the possibility of financial troubles sooner.

Most of those studying the topic agree that RBC results present several shortcomings as a way of modeling capital adequacy. Likewise, no agreement exists in the literature about the best way to model capital on a going-concern basis, the basis of most interest to investors, for example.

In this paper, we focus on capital modeling issues. Our purpose is to develop a reasonable capital adequacy model that can be easily implemented using data commonly available to company actuaries. The flexible framework provided can be adapted to meet the needs of rating agencies, company management, and regulators. Our capital adequacy model is also compatible with European Solvency II’s risk-based economic capital framework.

The paper proceeds in the following way. In the section that follows, we preface model development by suggesting alternative ways of defining pricing risk and interest rate risk that better capture the risks presented by these two aspects of RBC. These risks, combined with reserving risk, cannot be treated as independent, and thus we model them together without making assumptions about the correlations between them. Section three includes a framework that links to company reserves and can be updated as needed to accommodate both different reserve parameters and new data. The fourth section presents a two-effects (row and column) model, including postulates and two theorems, while section five applies the model to common capital measures of value at risk and conditional value at risk. A few applications of the model are presented in section six. The seventh and final section concludes the paper.

2. Capital modeling deficiencies

Of the risks considered by most capital adequacy models, pricing, reserving, and interest rate risk seem most important to most property/casualty insurance companies. We examine definitions of these below.

Pricing risk. This refers to the risk that actual losses differ from those anticipated in the rates. Contrary to common perception, the nature of risk faced by insurance companies is not the underlying losses. It is the mismatch between loss provision in rates and the actual losses. Traditional capital models fail to capture this risk due to their focus on underlying losses and their distributions.[1]

Reserving risk. This refers to the risk due to variability in loss reserves. Many models calculate capital levels due to reserving risk with the assumption that a certain method was used to set these reserves. In practice, reserves can be hand selected. Regardless of the methods used to select reserves, the reserving risk must be captured. Many models work well for certain methods of calculating reserves while actuaries often use a combination of models as well as judgment (Rehman and Klugman 2010).

Interest rate risk. Most models calculate the variability of portfolio returns on an asset-by-asset basis and then aggregate the risk. Instead, the risk is the mismatch between investment income offset which underlies rates, and the actual interest income earned on loss reserves and on (the loss portion of) unearned premium reserves. Also, aggregating the risk usually requires measuring a correlation matrix and that introduces measurement errors due to the many extra parameters that are hard to estimate.

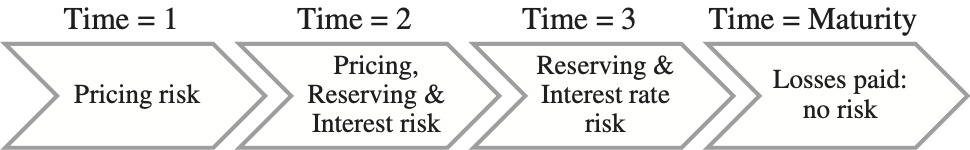

Interdependence. All risks are related through time and are not independent. Many researchers model these three risks separately and then combine them using a correlation matrix. This often requires normality assumptions that are hard to justify in practice. Further, as is the case with interest rate risk, this usually requires measuring a correlation matrix and that introduces measurement errors due to many extra parameters that are hard to estimate. The schematic flow of risks for a 12-month policy year is shown below:

A 12-month policy year spans two accident years and losses from these two incurred accident years lead to uncertainty in loss reserves (reserving risk). The unearned premium and loss reserves are invested at all times and result in interest rate risk. Pricing risk vanishes after 24 months, once all premiums are earned and all losses are incurred. Thus “risks” are correlated at different times. In this paper we model them together, without any explicit assumption of the correlations between them.

Discussion. The current literature on capital modeling does not address the concerns we raise above. In addition, there is currently no consensus on the stochastic methods used to determine capital. For a comprehensive review of existing methods, the reader is referred to Goldfarb (2006).

There are also many papers that deal with the subset of risks that underlie capital. For example, reserving risk is discussed in many papers (e.g., see Mach 1993). These models are often the basis of capital calculation. As mentioned earlier, these papers calculate reserving risk for a particular type of reserving model while actual reserves are likely hand selected and based on the many different methods deployed.

3. Capital framework

In this section, we set forth a framework for estimating required capital. The framework includes a model that links to company reserves and can be updated as needed to accommodate both different reserve parameters and new data. This pragmatic approach fits easily with an actuarial department’s reserving framework.

We consider two versions of the model: an SAP-based[2] model for insurance regulators, and a GAAP-based[3] capital model useful for internal company management and rating agencies. The respective accounting treatments do influence model results, which is why it is important to incorporate them into our discussions. We do that explicitly in sections 3.1 and 3.2.

Before proceeding, we note that for solvency purposes, the “required capital” derived under the two different accounting treatments can each be compared to the company’s balance sheet held capital.

3.1. Risk capital: SAP accounting

The risk-based capital (RBC) formula explicitly incorporates six main risk charges (see Feldblum 1996).[4] These charges are combined additively using the square root rule Here, Ck refers to the capital charge due to risk k. We limit our attention to the three largest risks for most property casualty insurance companies, namely, interest rate risk, pricing risk, and reserving risk. Our framework presents a different way of calculating charges for these three risks, with the following four caveats in mind:

-

We ignore the interest rate risk due to capital charges themselves. In other words, we assume that capital itself is placed in the safest possible investment. This assumption can be removed, and the risk incorporated into the model, but we chose not to do so for the sake of simplicity.

-

All data used to measure risk charges comes from rate filings and annual statements. For example, we use implied link ratios based on held reserves (schedule P Part 2) and not indicated reserves (see Appendix A). These link ratios are used to build data triangles, discussed more completely in section 3.3.

-

To build insurance risk triangles, we need actual portfolio returns by calendar year. Under SAP accounting, these equate to realized portfolio returns.

-

Instead of three charges, a single charge is calculated and replaced in the RBC formula.

3.2. Economic capital: GAAP accounting

In accordance with “going concern” accounting treatment, the insurance risk triangles rely upon indicated link ratios. Portfolio returns, also employed in building insurance risk triangles, reflect both realized and unrealized gains. We again ignore interest rate risk associated with economic capital itself, just as we did with respect to risk capital above.

Also under GAAP accounting, the company should combine capital charges due to other risks (e.g., credit risks) into calculated capital. In most property casualty insurance companies, such charges are typically a much smaller part of total capital. Hence, we do not discuss them further.

Moving forward, our discussion will be common to both types of capital. One can obtain risk capital versus economic capital by simply modifying the dataset. Therefore, we will only use the term “capital” henceforth. We now present the data structure for non-catastrophic lines, and a complete discussion of adjustments needed to put them into catastrophic insurance risk triangles will follow.

3.3. Data structure: Non-catastrophic insurance risk triangles

In Table 1, data is available for M (fixed) policy years i 2, 3 . . . M. Thus row entries in the table can be obtained by varying i. However, for any fixed M the quantity provides only the last (diagonal) column entry for row i. The value on the adjacent diagonal would be We do not introduce a variable for the realized part of the rectangle, as our interest lies in the unknown (missing) cells of the policy year i, and we will be contented with just naming the values by their specific symbols in the realized part. The unrealized part, referenced by adding a subscript, q 1, 2 . . . i 1, such that now references future cells for row i. We explain below the steps to prepare the dataset for any policy year (i.e., the realized part). We jointly model interest rate, pricing, and reserving risks by capturing them in a single data triangle. Prepare the insurance risk triangle, shown in Table 1, which consists of company’s “estimates” of ultimate losses Ui tracked on a policy year basis, by adding the following components:

-

Pricing risk: Let (1-expense load)* written premium = trimmed unearned premium reserves (UEPR),[5] an amount earned over time. At time 0, the insurance company receives premium income, which becomes earned as losses are incurred over the life of the policy. Once all losses incur and premiums become earned, unearned premium reserves for a given policy year go to 0, and pricing risk disappears. Thus the first column of Table 1 is always the UEPR of respective policy years and represents the best estimate of the losses at that time.[6] This estimate changes over time due to incurred losses.

-

Reserving risk: Allocate direct accident year losses, by age, to each policy year. Develop them to ultimate using incurred accident year age to ultimate development factors. These are then added to the UEPR at each respective age. In this manner we state the revised estimates of policy year ultimate losses at each age.

-

Interest rate risk: Begin by recognizing that interest rates impact diagonal values only. Next, we need two additional quantities, namely, the investment income offset that underlies rates due to policyholder supplied funds (by policy year) and the actual earned portfolio interest rate underlying unearned premium and loss reserves. If the actual interest rate proves higher than expected, we decrease the diagonal to reflect the added investment income. The converse is also true. This way, all the diagonals become restated. The last diagonal remains unchanged, since no data for actual interest rate is available at this point. Thus, since the final diagonal remains unchanged, interest rate risk adds uncertainty to cash flow timing only.

In GAAP accounting, capital gains/losses are part of the calculation of “actual earned interest income” underlying insurance risk triangles. For example, if a bond portfolio underlying loss reserves decreases in value due to increase in interest rates, the decrease in value will lower the actual return on the policyholder–supplied funds and thus affect the diagonals of the insurance risk triangles.

Another issue is related to the investment income offset. The offset is set in advance for a policy year and cannot be changed. For example, using new money yields on policyholder supplied funds will affect the actual portfolio return but not the investment income offset itself.

We assume that the triangle of errors contains all policy years underlying the economic cycle contemplated in the return period. Further, the loss data should be available until maturity for those policy years. Define:

-

= Insurance Risk amount for policy year i, estimated at development age M − i + 1

-

= Error for policy year i and development interval (M − i, M − i + 1)

\[ e_{i, M-i+1}=\ln \frac{U_{i, M-i+1}}{U_{i, M-i}}. \]

Below the Insurance Risk triangle presented in Table 1, find a triangle of errors, ei in Table 2 that indicates the extent of under- or over-pricing. The volatility in these ratios reflects the insurance risk faced when writing the underlying policies. Larger absolute values of the natural log ratios imply greater deviations from previous estimates. Signs associated with errors also may prove informative. For example, a finding of negative policy year cumulative errors would imply rate redundancy for the policy year.

Note that the insurance risk triangles depend on charged premiums. They include any market adjustments present in the loss costs underlying rate indications, such as schedule rating credits/debits or experience rating modifications.

The non-catastrophe insurance risk and error triangles described above can be constructed using data commonly available to actuaries. Companies wishing to determine economic capital and rating agencies could prepare them for this purpose. Hence, these triangles prove very pragmatic from an implementation perspective.

From a practical standpoint, insurance risk triangles are not available in annual statements. This is not a shortcoming of the framework, since the true risk faced by an insurance company is on a policy-year basis because it is always “older” than accident year. Current capital models often ignore the lag between the two due to the fact that Schedule P is compiled on an accident year basis and it’s easier to compile data using accident year than mapping losses on a policy-year basis. For precisely this reason, the capital requirements fall short when the lag is significant—especially in lines with significant pricing risk such as property catastrophe. For lines where pricing risk is insignificant, such as auto physical damage and auto liability, one can build insurance risk triangles using accident year basis and ignore pricing risk (approximation). Hence, pragmatically, regulators may limit data calls in the form of insurance risk triangles only from companies suspected to be in trouble or lines of business that have high pricing risk. This discussion ties back to statements we made in section 3.1.

3.4. Incorporating catastrophic losses

Catastrophic losses refer to hit-and-miss events such as hurricanes, where usual triangulation of data would leave missing values. Hence, tornadoes may be non-catastrophic in states such as Iowa where these occur each year but the same would be catastrophic in Maine where they are rare. With this definition, we now show adjustments needed to non-catastrophic insurance risk triangles in order to reflect catastrophic risk:

-

Catastrophic models should be used to determine losses on current exposures due to actual (not modeled) historical catastrophes. This will provide the company with a long enough history of data that captures cycles of such rare events. Still, in many calendar years, one would expect no loss.

-

These losses must be combined with non-catastrophic losses to produce a “total” insurance risk triangle. The non-catastrophic losses should have the same number of years of policy year history as catastrophic losses. This ensures that there are no missing values in the triangle, although in some calendar years we would have “spikes” due to actual catastrophic losses.

-

The starting expected loss (Figure 1, time 0) includes charges for catastrophes.

There are several advantages of this model over the current practice of determining capital for catastrophic losses separately and combining it with non-catastrophic losses. First, it considers errors due to pricing versus actual historical experience. This is in contrast to using modeled losses to determine capital. Second, the model is multivariate and considers covariance effects between catastrophic versus non-catastrophic losses. Even if catastrophes themselves are independent of other non-catastrophic events, the dollar losses may not be independent due to macro and micro economic variables, such as inflation. It is also possible that geographies may create a relationship between catastrophic and non-catastrophic losses simply due to different construction standards. A univariate analysis ignores such covariance effects or at least makes assumptions in quantifying them.

For the rest of the paper, we will assume non-catastrophic insurance risk triangles with the understanding that catastrophic losses can be incorporated into the model.

3.5. Discussion: Insurance risk triangle

In the section above, we consider how to construct insurance risk triangles and error triangles. Other variations exist, and we would like to devote attention to two of these, as well as to reflections on underwriting cycles, before proceeding to a case study.

Instead of using incurred losses, we can use paid losses to construct insurance triangles. When paid losses are used we must use accident year paid ultimate loss development factors as opposed to incurred factors.[7] The use of paid versus incurred depends on the company’s situation. If the company sets case reserves in a reasonable way, then incurred data should be used, as it accurately reflects the company’s reserving situation.

Instead of using direct written premiums and losses to make insurance risk and error triangles, net written premiums and losses may be employed, resulting in a net insurance risk triangle. For such triangles we also use net age to ultimate factors from net reserve reviews. Use of direct or net insurance risk triangles will lead to direct or net capital.

We now turn our attention to the issue of underwriting cycles embedded in the data. If the data contain a complete underwriting cycle (peak and trough) then the parameters measured from those data will encode the strength of the cycle. The data triangles used in this paper will assume that at least one complete underwriting cycle has been observed. All parameters estimated from this data will make the model relative to this fact: the number of underwriting cycles in the data.

Suppose that we are concerned with future underwriting cycles impacting past business written on a policy-year basis. Future underwriting cycles can be driven by several factors, such as changes in laws, external market competition, and the external business environment (e.g., inflationary pressures). Commonly, actuaries adjust for future cyclical effects by incorporating ancillary data. Our methodology permits a way to readily incorporate such data.

3.6. Industry data

For capital modeling purposes, it’s not correct to use industry data. It makes sense to combine estimates using credibility if the estimates are measuring the same quantity. In a capital measurement context, the company’s data is a result of its unique exposures, geography and other variables. Industry parameters are based on a different data set. Also, a low volume of data[8] of the company is not a problem but rather a reality that must impact parameter estimates. Third, the confidence intervals of the parameter estimates involving sample means and variances have reasonably low standard errors due to the strong law of large numbers.

In cases where a complete triangle is not available, such as a company with a rapidly changing business mix for a given line or a new company with no data, the only option may be ad hoc approaches such as using parameters of a peer company of a similar size. However, it’s never feasible to apply industry factors and “adjust” them to a particular company when company-specific data is available. Similarly, industry “factors” can be calculated based on the model and applied to all companies, but such an approach would not be recommended.

3.7. Case study

We end section 3 by presenting data for line X. The purpose of the case study is to help illustrate key computational ideas described earlier in section 3.3, as well as throughout the rest of the paper. As a practical matter, for variance/covariance calculation purposes, an extra row is needed to ensure that the last age (the last column in the triangle) has at least two data points. Thus M = 10 and the policy year 2004 is the extra row added.

4. Two effects model

Real-world changes often take place on a calendar year basis. In Tables 1 and 2, the diagonals represent calendar years. It is clear that any “calendar year effect” will result in dependence of both policy years (rows) and columns (development periods). From a mathematical perspective, we cannot appeal to independence arguments. Instead, we will develop our model with recognition of both policy year (row effect) and development interval (column effect) dependencies.

We present two distinct approaches to capital measurement. The first, value at risk (VaR), provides a percentile measure of risk tolerance. It requires capital to be set so that there is α probability of insolvency. The second, conditional value at risk (CVaR), specifies risk tolerance at a given conditional expected excess loss. The condition here is typically the VaR loss amount. Hence, CVaR is always more conservative than VaR for the same specified percentile α. Conditional value at risk possesses a property of risk measures known as coherence (see Artzner et al. 1999), implying compliance with a set of commonsense axioms. Value at risk, lacking this property, can lead to perverse consequences in some circumstances.

4.1. Model postulates

Table 2, the error triangle, presents half of the values present in a rectangle, the observed half, with the rest of the values considered unobserved. Since our randomness is due to unobserved values, we ignore Table 2’s first row, as it is assumed to be complete.[9] We postulate that the unobserved errors are multivariate normally distributed.[10] Additionally, for estimation purposes, we make the following two assumptions about Table 2:

-

For any given column, the errors have the same marginal variance. This assumption permits the use of a sample variance as an estimate of the marginal population variance.

-

Pairs of errors in any two columns have the same covariance. This permits the use of a sample covariance as an estimate of the population covariance.

The last two postulates are necessary for calibration of the parameters. However, note the following caveats. First, sample estimates should be updated as new data emerges each year. Second, the old “completed rows” from the insurance risk triangle in Table 1 greatly enhance accuracy in estimation and should be retained. We note a third postulate since it will be used to derive Theorem 1:

- For any given column, the errors have the same marginal mean. This permits the use of a sample mean as an estimate of the population mean.

This postulate proves unnecessary later, when we use additional data. Hence, we relax it below in subsection 4.4 when we present Theorem 2, but retain it for Theorem 1, presented in subsection 4.3.

4.2. Column effect: dependence of development periods

Given our postulates, k = 1, 2 . . . i − 1

\[ e_{i, M-i+1+k}=\ln \frac{U_{i, M-i+1+k}}{U_{i, M-i+k}} \sim N\left(\mu_{i, M-i+1+k}, \sigma_{i, M-i+1+k}^{2}\right) \]

Thus, each future entry in the error triangle in Table 2 is postulated as marginally normally distributed and the parameters vary by both rows and columns. In the context of capital, half the rectangle is observed and we are interested in the randomness due to the remaining half of unobserved normally distributed error values.

For column effects, we will keep the policy year i fixed and sum over the unobserved k = 1, 2 . . . i − 1 errors

\[ \begin{array}{l} e_{i}=\sum_{k=1}^{k=i-1} e_{i, M-i+1+k}=\sum_{k=1}^{k=i-1} \ln \frac{U_{i, M-i+1+k}}{U_{i, M-i+k}}=\ln \frac{U_{i, M}}{U_{i, M-i+1}} \\ U_{i, M}=U_{i, M-i+1} * \exp \left(e_{i}\right) \end{array} \tag{1} \]

Note that Ui,M is an unknown final policy year ultimate loss, while is fixed and known. Due to the normality assumption,

\[ e_{i} \sim N\left(\begin{array}{c} \sum_{k=1}^{k=i-1} \mu_{i, M-i+1+k} \\ \sigma_{i}^{2}=\sum_{k=1}^{k=i-1} \sum_{l=1}^{l=i-1} \operatorname{cov}\left(e_{i, M-i+k+1}, e_{i, M-i+l+1}\right) \end{array}\right) \tag{2} \]

For a fixed i the “column covariance matrix,” namely can be estimated using the observed half rectangle of errors (Table 2). The result is shown in Table 5.

For example, the (2–3, 3–4) entry is calculated using Table 4 and taking covariance of columns:

Note that is the sum of all entries in Table 5 except 0–1 row/column; is the sum of all entries after deleting the first two rows and columns, etc. Table 6 shows these results.

Similarly, for a fixed i, the error means can be estimated by averaging each column of observed values from Table 2. Thus accumulates these means for future development periods for a given policy year i. Table 7’s cumulative column shows the results.

From (1) since is considered fixed, Ui,M will have a lognormal distribution,

\[ \ln \frac{U_{i, M}}{U_{i, M-i+1}} \sim N\left(\begin{array}{c} \sum_{k=1}^{k=i-1} \mu_{i, M-i+1+k} \\ \sum_{k=1}^{k=i-1} \sum_{l=1}^{l=i-1} \operatorname{cov}\left(e_{i, M-i+k+1}, e_{i, M-i+l+1}\right) \end{array}\right) \]

\[ \ln U_{i, M} \sim N\left(\begin{array}{c} \ln U_{i, M-i+1}+\sum_{k=1}^{k=i-1} \mu_{i, M-i+1+k}, \\ \sum_{k=1}^{k=i-1} \sum_{l=1}^{l=i-1} \operatorname{cov}\left(e_{i, M-i+k+1}, e_{i, M-i+l+1}\right) \end{array}\right) \tag{3} \]

The above follows from (2). We can therefore estimate the policy year distribution.

4.3. Row effect: Dependence of policy years

Our goal is to sum across all policy years and determine the distribution of

\[ U=\sum_{i=2}^{i=M} U_{i, M}. \]

Since Ui,M is lognormal, we have a sum of lognormal random variables. There is no closed form distribution for U but simulation techniques can be used to determine the exact distribution (Appendix B). We provide closed-form results in this section that are approximations. These approximations are generally quite good. From (1), the total loss for all policy years can be written as

\[ U=\sum_{i=2}^{i=M} U_{i, M}=\sum_{i=2}^{i=M} U_{i, M-i+1} * \exp \left(e_{i}\right) \]

\[ U=V \sum_{i=2}^{i=M} r_{i} \exp \left(e_{i}\right) \]

\[ V=\sum_{i=2}^{i=M} U_{i, M-i+1} \tag{4} \]

\[ r_{i}=\frac{U_{i, M-i+1}}{\sum_{j=2}^{j=M} U_{j, M-j+1}} \tag{5} \]

Here V is the sum of observed values along the diagonal of the insurance risk triangle (Table 1) for all open years and the weights are the relative proportion in each policy year. If the error random variables ei are close to zero, consider the following Taylor series approximations:

\[ \begin{aligned} e & =\ln \frac{U}{V}=\ln \left[\sum_{i=2}^{i=M} r_{i} \exp \left(e_{i}\right)\right] \approx \ln \left[\sum_{i=2}^{i=M} r_{i}\left(1+e_{i}\right)\right] \\ & =\ln \left[1+\sum_{i=2}^{i=M} r_{i} e_{i}\right] \approx \sum_{i=2}^{i=M} r_{i} e_{i} \end{aligned} \]

The approximate log-ratio has a normal distribution and, thus, U has an approximate lognormal distribution. The variance of the normal distribution is

\[ \operatorname{Var}\left(\ln \frac{U}{V}\right) \approx \operatorname{Var}\left(\sum_{i=2}^{i=M} r_{i} e_{i}\right)=\sum_{j=2}^{j=M} \sum_{i=2}^{i=M} r_{i} r_{j} \operatorname{cov}\left(e_{i}, e_{j}\right). \tag{6} \]

From (2),

\[ E\left(\ln \frac{U}{V}\right) \approx E\left(\sum_{i=2}^{i=M} r_{i} e_{i}\right)=\sum_{i=2}^{i=M} r_{i} E\left(e_{i}\right)=\sum_{i=2}^{i=M} r_{i} \sum_{k=1}^{k=i-1} \mu_{i, M-i+1+k}. \tag{7} \]

Now,

\[ \begin{array}{l} \sum_{j=2}^{j=M} \sum_{i=2}^{i=M} r_{i} r_{j} \operatorname{cov}\left(e_{i}, e_{j}\right) \\ = \underset{\begin{array}{c} \text { column } \\ \text { effect } \end{array}}{r_{i}^{2} \operatorname{Var}\left(e_{i}\right)}+\underset{i<j}{2} \sum_{j=2}^{j=M} r_{i} r_{j} \operatorname{cov}\left(e_{i}, e_{j}\right) . \end{array} \tag{8} \]

The estimation of Var(ei) was discussed under column effect. The second term is due to dependence of policy years (row effect). For 2 ≤ i j and using (1),

\[ \begin{aligned} \operatorname{cov}\left(e_{i}, e_{j}\right) & =\operatorname{cov}\left(\sum_{k=1}^{k=i-1} e_{i, M-i+k+1}, \sum_{m=1}^{m=j-1} e_{j, M-j+m+1}\right) \\ & =\sum_{k=1}^{k=i-1} \sum_{m=1}^{m=j-1} \operatorname{cov}\left(e_{i, M-i+k+1}, e_{j, M-j+m+1}\right). \end{aligned} \tag{9} \]

The covariance matrix is not square and hence we cannot assume any symmetry. Similar to the estimation of Var(ei), the double sum is over the unobserved values and can be estimated from the error triangle (Table 2) using column data. For fixed i, j we need to calculate the sum of all possible covariance between future columns of these policy years. To illustrate if M 8, for policy years i 3 and j 4, we would calculate covariance of these pairs of columns and then add {k 1, 2; m 1, 2, 3}:

(i) 2x(6,5), (7,7), 2x(6,7),

(ii) (7,7).

The process is computationally feasible and takes a few seconds on average computers and reasonably sized triangles (to see how this might be executed using R, see Appendix C). Next, to simplify our notation, we will express our result in matrix form. Let

\[ \begin{aligned} i_{i}^{\prime} & =(1, \ldots \ldots 1)_{1 x(i-1)} \\ i_{j}^{\prime} & =(1, \ldots \ldots .1)_{1 x(j-1)} \\ r^{\prime} & =\left(r_{2} \ldots r_{M}\right)_{1 x(M-1)} \\ \mu^{\prime} & =\left(\sum_{k=1}^{k=1} \mu_{2, M-1+k}, \sum_{k=1}^{k=2} \mu_{3, M-2+k}, \ldots, \sum_{k=1}^{k=M-1} \mu_{M, k+1}\right)_{1 x(M-1)} \end{aligned} \]

\[ C_{i j}=\left(\begin{array}{c} \operatorname{cov}\left(e_{i, M-i+2}, e_{j, M-j+2}\right), \ldots \ldots, \\ \operatorname{cov}\left(e_{i, M-i+2}, e_{j, M-j+1+m}\right), \ldots \ldots, \\ \operatorname{cov}\left(e_{i, M-i+2}, e_{j, M}\right) \\ --------------- \\ \operatorname{cov}\left(e_{i, M-i+1+k}, e_{j, M-j+2}\right), \ldots \ldots, \\ \operatorname{cov}\left(e_{i, M-i+1+k}, e_{j, M-j+1+m}\right), \ldots \ldots, \\ \operatorname{cov}\left(e_{i, M-i+1+k}, e_{j, M}\right) \\ ---------------- \\ \operatorname{cov}\left(e_{i, M}, e_{j, M-j+2}\right), \ldots \ldots, \\ \operatorname{cov}\left(e_{i, M}, e_{j, M-j+1+m}\right), \ldots \ldots \ldots \ldots, \\ \operatorname{cov}\left(e_{i, M}, e_{j, M}\right) \end{array}\right)_{\begin{aligned} & (i-1) x(j-1) \\ & \{k \leq i-1, m \leq j-1\} \\ & M \geq[i, j] \geq 2 \end{aligned}} \]

Note that in the matrix notation we can obtain all variances and covariance as follows:

\[ \begin{array}{c} (2 \leq[i, j] \leq M) \\ \operatorname{cov}\left(e_{i}, e_{j}\right)=i_{i}^{\prime} C_{i j} i_{j}. \end{array} \tag{10} \]

The above bilinear form simply sums up all elements of matrix Cij. This form is convenient since when i = j we get variances. Putting this information in a matrix form gives us the covariance matrix of errors by policy year,

\[ \Sigma=\left\{i_{i}^{\prime} C_{i j} i_{j}\right\}_{M \geq[i, j] \geq 2}^{(M-1) x(M-1)}. \tag{11} \]

Table 8 gives an example of the matrix.

The [2011, 2012] entry is explained in Appendix C. Table 7 reported μ′ under “cumulative mu” column and r′ is shown in Table 9. Using equation (4) for the scalar and based on the above we have now shown the following result,

\[ \ln U \sim N\left(\ln \sum_{i=2}^{i=M} U_{i, M-i+1}+r^{\prime} \mu, r^{\prime} \Sigma r\right). \tag{12} \]

4.4. Incorporating future conditions

We discussed postulate (iii) earlier in subsection 4.2. This postulate can be relaxed if we want to consider additional data from outside of the model. This situation may arise, for example, if management wants to incorporate its estimates of future conditions in the capital framework.

Suppose that we know expected policy year losses (Table 9) from standard actuarial techniques or company projections.

\[ E\left(U_{i, M}\right)=L_{i, M} \tag{13} \]

since

\[ \begin{array}{l} \sum_{i=2}^{i=M} L_{i, M}=\sum_{i=2}^{i=M} E\left(U_{i, M}\right)=E \sum_{i=2}^{i=M} U_{i, M}=E(U) \\ E(U)=\sum_{i=2}^{i=M} L_{i, M}. \end{array} \]

We mentioned in Section (2) the usefulness of incorporating future conditions in the capital framework. Here provides a way to incorporate these beliefs. Based on the above we have now shown the following result:

Theorem 2

\[ \ln U \sim N\left(\theta=\ln \sum_{i=2}^{i=M} L_{i, M}-\frac{r^{\prime} \Sigma r}{2}, \omega^{2}=r^{\prime} \Sigma r\right) \tag{14} \]

The above is justified, since

\[ \exp \left(\ln \sum_{i=2}^{i=M} L_{i, M}-\frac{r^{\prime} \Sigma r}{2}+\frac{r^{\prime} \Sigma r}{2}\right)=\sum_{i=2}^{i=M} L_{i, M}=E(U). \]

Comments:

-

The use of Theorem 2 alleviates the need to estimate the vector μ. Henceforth we will use Theorem 2, since in practical cases is available.

-

The variance component r′Σr is based on historical data alone and changes only as the data changes. In the actuarial and regulatory contexts, this situation will usually happen once a year when the model is updated.

5. Capital measurement

The aforementioned property of coherence makes the CVaR approach appealing. However, the VaR lacks this property, laying the user open to counterproductive choices in capital management. This shortcoming seems important to mention because of VaR’s widespread use, as well as the fact that its use is mandated by some proposed regulatory régimes.

5.1. Value at risk (VaR) capital

Using (12), we define

\[ U^{V a R}:=\exp \left(\ln \sum_{i=2}^{i=M} L_{i, M}-\frac{r^{\prime} \Sigma r}{2}+z_{1-\alpha} \sqrt{r^{\prime} \Sigma r}\right) \tag{15} \]

H ≔ Held loss reserves[11] + Held unearned premium reserves (line) + Policy year cumulative paid loss to date

I ≔ Future investment income on held loss and unearned premium reserves

The full value capital under VaR is given by

\[ C^{V a R}:=U^{V a R}-H-I . \tag{16} \]

Note that the future investment income on held loss and unearned premium reserves should be done at the appropriate interest rate. For risk-based capital, we would use SAP accounting rules and for economic capital, we would use GAAP accounting rules. In both cases, we would use the current asset mix.

We assume that capital gets invested in risk-free assets. To find discounted capital, we discount using the risk-free rate and the duration determined from the insurance risk triangle. This duration reflects the runoff of both loss reserves and unearned premium reserves.

The undiscounted capital calculation (z1−α = 1.96) is shown in Table 10, where we have not subtracted I.

The capital is sensitive to the variance parameter. In particular, smaller companies/lines will generate larger variances and larger capital requirements.

5.2. Value at risk (VaR) capital for multiple lines

Suppose that there are two lines of business. Each can be analyzed separately using the method previously outlined. However, it is likely that the results for the lines are not independent.

We can combine the data (losses in Table 1) from the two lines into a single triangle and analyze it using the methods of this paper. When finished, there will be a distribution for each line separately and one for the combined lines, too. The fact that data is “less volatile” in the sense of lower sample variances obtained from the data is not a “problem” but a reality that in many cases the company faces lower volatility in its overall loss experience than any single line. In cases where a line’s mix is changing rapidly, it’s possible to simulate ultimate losses (for all policy years) for that line and then combine it using covariance matrix. We do not provide details in the paper but the calculations are similar to Appendix B. Beliefs about future development of losses on these policy years can be incorporated through while emerging data will capture estimation of variance parameters.

5.3. Capacity

Capacity ≔ Sum of individual line capital − Total capital for all lines combined

Thus, capacity results from diversification across lines. Positive capacity lowers the capital requirement of the company and thus enhances solvency.

5.4. Conditional value at risk (CVaR) capital

The capital can also be measured as a function of conditional expected cost in excess of a given dollar amount d. This is called conditional value at risk (CVaR) capital and is a more general case of tail value at risk (TVaR) capital, which requires a certain choice of d explained below. Note that, as a consequence of the positive lognormal support, U ≥ 0. Using the CVaR definition,

\[ \begin{array}{l} U^{C V a R}:=E(U \mid U>d)=\frac{\int_{d}^{\infty} f_{U}(x) d x}{1-\int_{0}^{d} f_{U}(x) d x}=\frac{\int_{d}^{\infty} f_{U}(x) d x}{1-F_{U}(d)} \\ U^{C V a R}:=\frac{\exp \left(\theta+\frac{\omega^{2}}{2}\right)\left\{1-\Phi\left(\frac{\ln d-\theta-\omega^{2}}{\omega}\right)\right\}}{1-\Phi\left(\frac{\ln d-\theta}{\omega}\right)} . \end{array} \tag{17} \]

For a given d the above denotes the stress value of the loss for all policy years combined. Extension to multiple lines can be accomplished using the total company-wide data. The approach is similar to VaR.

\[ C^{C V a R}:=U^{C V a R}-H-I \tag{18} \]

To illustrate, suppose d is based on VaR-based percentile UVaR given by equation (15). This special case of CVaR is called TVaR and is more conservative than VaR,

\[ d=U^{V a R}=\exp \left(\ln \sum_{i=2}^{i=M} L_{i, M}-\frac{r^{\prime} \Sigma}{2}+z_{1-\alpha} \sqrt{r^{\prime} \Sigma r}\right). \tag{19} \]

A comparison of VaR versus CVaR capital for the same parameters is given in Table 11.

6. Applications

The model presented is versatile and rich in applications to other important areas. We discuss some of these below.

6.1. European Solvency II regulation

European regulation for insurance companies (Solvency II) requires a one-year forward distribution of the ultimate loss. This can be obtained by noting that one-year forward means that k = m = 1 and the matrix Cij reduces to a scalar.

There are no “fixed” models prescribed in Solvency II and a model can be filed for approval. The current model has several advantages. First, it provides a nice way to measure “1 year” risk which is key to Solvency II. Second, the model ties back to reserve reviews, which is important to regulators. Third, the model is mathematically justified, simple to understand and applicable across all types on property liability insurers (including niche lines such as private mortgage insurance) which is also important to regulators. The last point is important, as no special models have to be created for niche lines.

6.2. Loss reserve uncertainty

The model presented represents an enhancement of Rehman and Klugman (2010) where accident years were assumed to be independent. To see the connection with our model, suppose instead of using insurance risk triangles we used accident year triangles where diagonals are ultimate selected losses. Under SAP, these will be Schedule P Part 2 (includes bulk and IBNR reserves) while for GAAP, these will come from historical company reserve reviews.

In this case, the data contains only reserving risk, which means that capital is only due to this single source of risk. Theorems 1 and 2 will provide the distribution of U. The mean of U in Theorem 1 has an interesting interpretation in this case. It corrects the “biases” of the actuary when selecting the ultimate losses. Once the parameters θ and ω are known, we can determine the following measures:

Reserve margins. Under International Accounting Standards Board (IASB) reserve margins are now required. These margins consist of amounts or cushions above held capital amounts. Using CVaR and VaR approaches described above, we can determine the stress value of U. Equation (16) is modified in this case:

H ≔ Held loss reserves[12] + Accident year cumulative paid loss to date.

The full value reserve margin under VaR is given by

\[ C^{V a R}:=U^{V a R}-H . \tag{20} \]

Reserve confidence intervals. These are obtained from the confidence interval of U minus cumulative paid losses.

6.3. Net capital

Net capital ≔ Direct capital − Ceded capital.

If the VAR approach is used to set capital, then zα for the direct and ceded analysis should be the same. Likewise, for the CVaR, d should be calculated using a consistent percentile.

An alternative to the above approach is to conduct a net analysis directly using net written premiums and net losses in the data step. In that case, the net capital can be estimated directly.

7. Conclusion

In this paper, we develop a reasonable capital adequacy framework that can be easily implemented using data commonly available to company actuaries. The flexible framework provided can be adapted to meet the needs of rating agencies, company management and regulators and updated along with annual reserve reviews. Our capital adequacy model is also compatible with European Solvency II’s risk-based economic capital framework.

Our framework captures pricing risk, interest rate risk and reserving risk more accurately than RBC risk charges and we recommend replacing current calculation of RBC risk charges. These risks cannot be treated as independent, and thus we model them together without making assumptions about the correlations between them. We present a two-effects (row and column) model, including postulates and two theorems, as well as common capital measures of value at risk and conditional value at risk.

We follow our discussion of model development with mention of three significant applications: European Solvency II regulation, loss reserve uncertainty and margins under IASB accounting standards—which generalizes the work of Rehman and Klugman (2010), and net capital estimation, which is useful when considering reinsurance program consequences. European Solvency II regulations require a one-year forward distribution of ultimate losses, easily obtained using the model.

The current approach would have resulted in much higher capital requirements for PMI companies, such as AIG United Guaranty. It is due to inherent historical volatility in housing data where home prices undergo a “bad patch” for several years in a row—often regionally and result in extremely large loss ratios for PMI companies. The “bad patch” leads correlated years of losses and the traditional models as well as RBC underestimate this “effect.” Coupled with this, PMI have significant pricing risk as premiums are earned over a long period of time[13] and the insurance risk triangle will capture this pricing risk. In traditional accident year models, this pricing risk would be ignored and hence cause underestimation of true risk.

Current risk-based capital frameworks used by U.S. insurance regulators and rating agencies often fail to signal capital deficiency problems until it is too late. In this paper, we propose an alternative model that relies on data commonly available to actuaries. The framework allows for a modified calculation of risk-based capital amounts under statutory accounting rules, as well as the computation of economic capital amounts using going concern accounting rules. Thus, it should be useful to companies, regulators, and rating agencies alike. The model accommodates the European Solvency II framework as well.

The model relies on construction of incurred insurance risk triangles and corresponding error triangles using direct premiums written to estimate direct capital. However, we also show that, with some minor modifications, paid insurance risk triangles can be used. Also, if net triangles are employed, estimates of net capital can be produced.

The methodology presented permits incorporation of ancillary data, something that actuaries often do to adjust for future changes in laws, external market competition, and inflationary pressures, all of which can drive future underwriting cycles. Thus, the model enables actuaries to adjust past data for future cycles.

To assist the reader in understanding how the model can be implemented, we provide a case study in subsection 3.5. For the same reason, we include four appendices. Appendix A shows how to calculate implied link ratios. Appendix B provides some important model details useful in working with simulated data, if using total line losses, distributed as lognormal, prove problematic. Appendix C gives programming language R code used to estimate covariance matrices by policy year. We hope that these enhancements prove helpful to the reader when implementing the accompanying framework. Finally Appendix D shows capital allocation results for companies that wish to deploy them.

Acknowledgments

We are indebted to Lisa Gardner (Professor, Drake University) for her help with the introduction and literature review of the paper. Lisa also proofread the document and made valuable suggestions.