1. Introduction

Actuaries constantly build models. One of the beauties of mathematics is that it allows us to take random real-world phenomena, build a representation in the language of mathematics, and apply various tools allowing reasonable, and often surprisingly accurate, predictions about future phenomena. Of course, these models are just that—models, estimates of event behavior. If all real phenomena were perfectly predictable the universe would be a rather dull place.

Actuarially speaking, “risk” has a specific definition: it is the potential for future events to deviate from their expectation (Actuarial Standards Board 2012). There are many reasons why this could occur. The most basic reason is simply that such events are random events. The very process which generates the event has inherent uncertainty. Even if the model were perfectly specified, the stochastic nature of the process prevents absolute foreknowledge. In actuarial terminology this is called process risk. Another reason why events may differ from their expectation is that the expectation itself is somehow imperfect. The error arising from an incorrect framework specification, e.g., a lognormal distribution instead of a gamma, is called model risk. A third reason for deviation from expectation is that since the data used to select parameters for the model is inherently finite, the parameter estimators based on this sample may not be the perfect point estimates for the true underlying parameters. This source of risk is what is usually referred to as parameter risk. Sometimes, the more precise term finite-sample parameter risk is used to distinguish it from a separate cause of parameter risk: the possibility that parameters may change over time. This paper will focus on finite-sample parameter risk, and, for convenience, will use the term “parameter risk.” An introduction to these and other examples of potential bias and distortion can be found in Venter and Sahasrabuddhe (2012).

In the remainder of this paper, Section 2 will introduce Van Kampen’s data and bootstrapping method, Section 3 will discuss parameter risk estimation using maximum likelihood, Section 4 will use Bayesian Markov Chain Monte Carlo (MCMC) to estimate the parameter risk, and Section 5 will compare the methods and results. The appendix will bring much of the source code used to fit the models and generate this paper, which is predominantly performed using R (R Core Team 2014).[1] Bringing actual code, instead of only results, is intended to enhance transparency and reproducibility. While basic knowledge of R and MCMC techniques is not required, it would help the reader interested in reproducing or extending the results.

2. Bootstrapping

Van Kampen discusses estimating both a ground up loss ratio and the expected loss for an aggregate stop loss reinsurance contract. Table 1 reproduces the data, a sequence of historical ground-up loss ratios and the corresponding experience to an aggregate stop loss cover of 2.5% excess of 72.5%.

The approach taken by Van Kampen is to make an initial estimate and then create random variates on a grid of parameters near that estimate. All parameters sets which are deemed “close” are marked and used to create a new weighted average expectation. The theory is that the observed data leading to the best estimate of the parameters may have actually come from a different set of parameters. Allowing for the use of many sets of parameters and weighting them by some measure of probability that they were the “true” source will reflect parameter uncertainty. The bootstrap algorithm itself is:

-

Generate a possible μ and σ for the lognormal.

-

Generate 10,000 observations of 10-year blocks.

-

Compare the simulated mean, standard deviation, and skew of each of the simulated blocks with those of the original data. If any 10-year block has all three check values sufficiently close to the best estimate, the parameter set is marked as viable.

-

Assign a weight to each viable parameter set based on the number of its blocks found to be close.

-

Calculate a weighted average expected ground up and aggregate stop loss ratio using all the viable parameter sets to arrive at a final point estimate which now includes the impact of parameter uncertainty.

Based on Van Kampen’s description, the search grids was set as the intersections of 51 data points for µ in the range −1.549–0.226 (50 equispaced points and the addition of −0.45) and 79 equispaced data points for σ in the range 0.0055–0.4345 for a total of 4,029 parameter sets. For each of these sets, 100,000 lognormal variates were generated and placed into a 10,000 by 10 matrix. A viable parameter set was selected based on the same measure of “closeness” used in the original paper (pp. 187–188). For each viable set, the expected ground-up and expected aggregate loss ratios were calculated, and the overall estimate is a frequency-weighted average of the viable results.

In 2003, this procedure took around 8 hours; in 2014, after some optimization described in Section 7.1.2, it took a little under two minutes. Hardware and software advances in the intervening years have created an over 270 times increase in speed! Taking advantage, a more finely-grained grid was investigated. Using the results of the original bootstrap to focus a new grid, a second procedure was run with µ ranging from −0.8 to −0.2 and σ from 0.05 to 0.45, each moving in steps of 0.0025. Bootstrapping these 38,801 pairs took about 17 minutes on the same machine.

Unsurprisingly, the results shown in Table 2 are similar to those in the original paper. While recognizing parameter risk is always important, it is more so when dealing with rare events. Even a small thickening of the tail of a distribution may make a significant difference in the expected results excess of a threshold. When parameter risk is contemplated with this data, there is almost no increase in the ground-up expected loss but a significant increase to the highly tail-sensitive excess layer. Note how the more fine-grained algorithm’s ground-up estimate is only slightly different from that of the original algorithm, but the aggregate stop loss ratio estimate is noticeably larger.

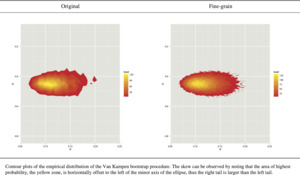

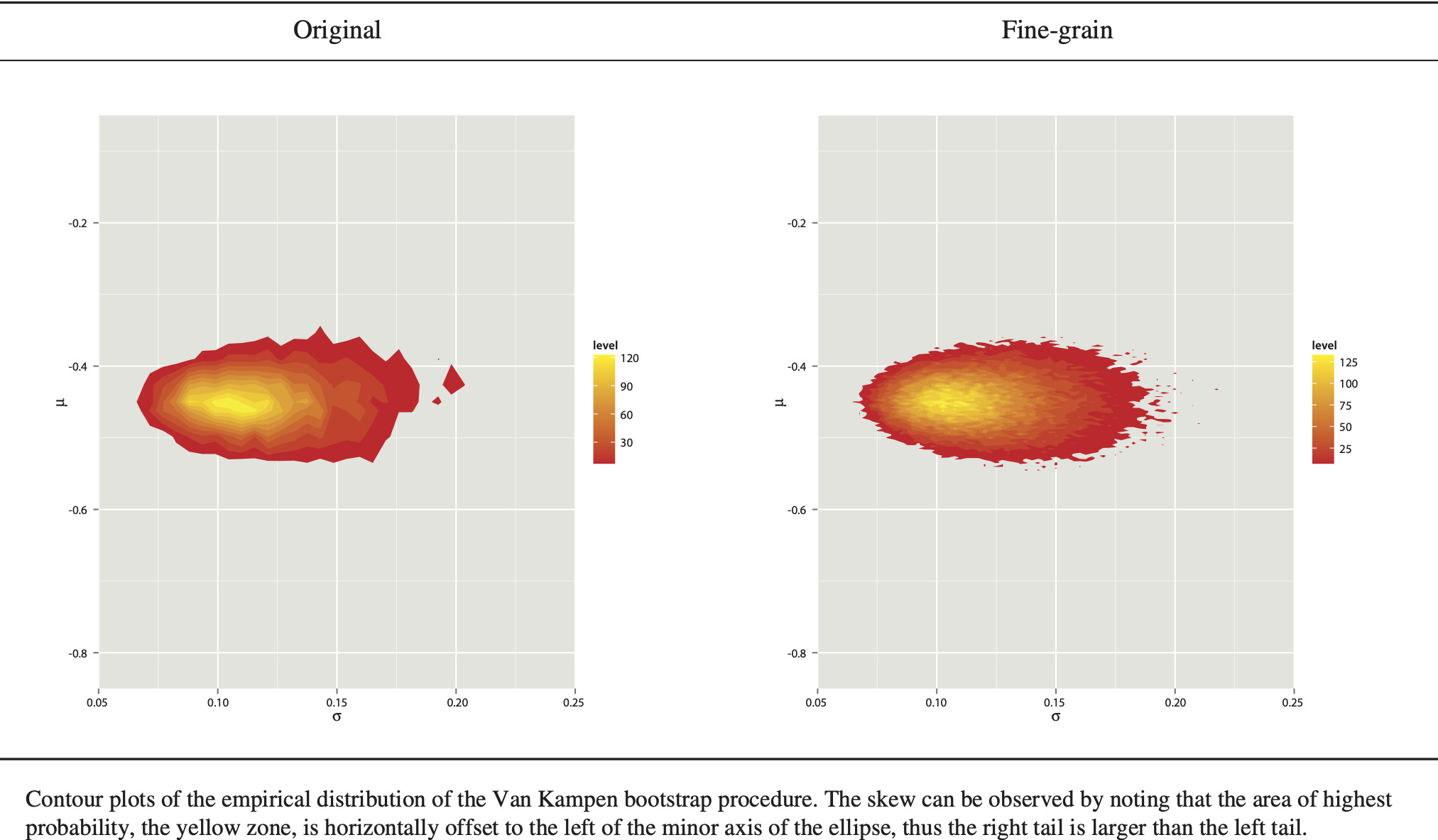

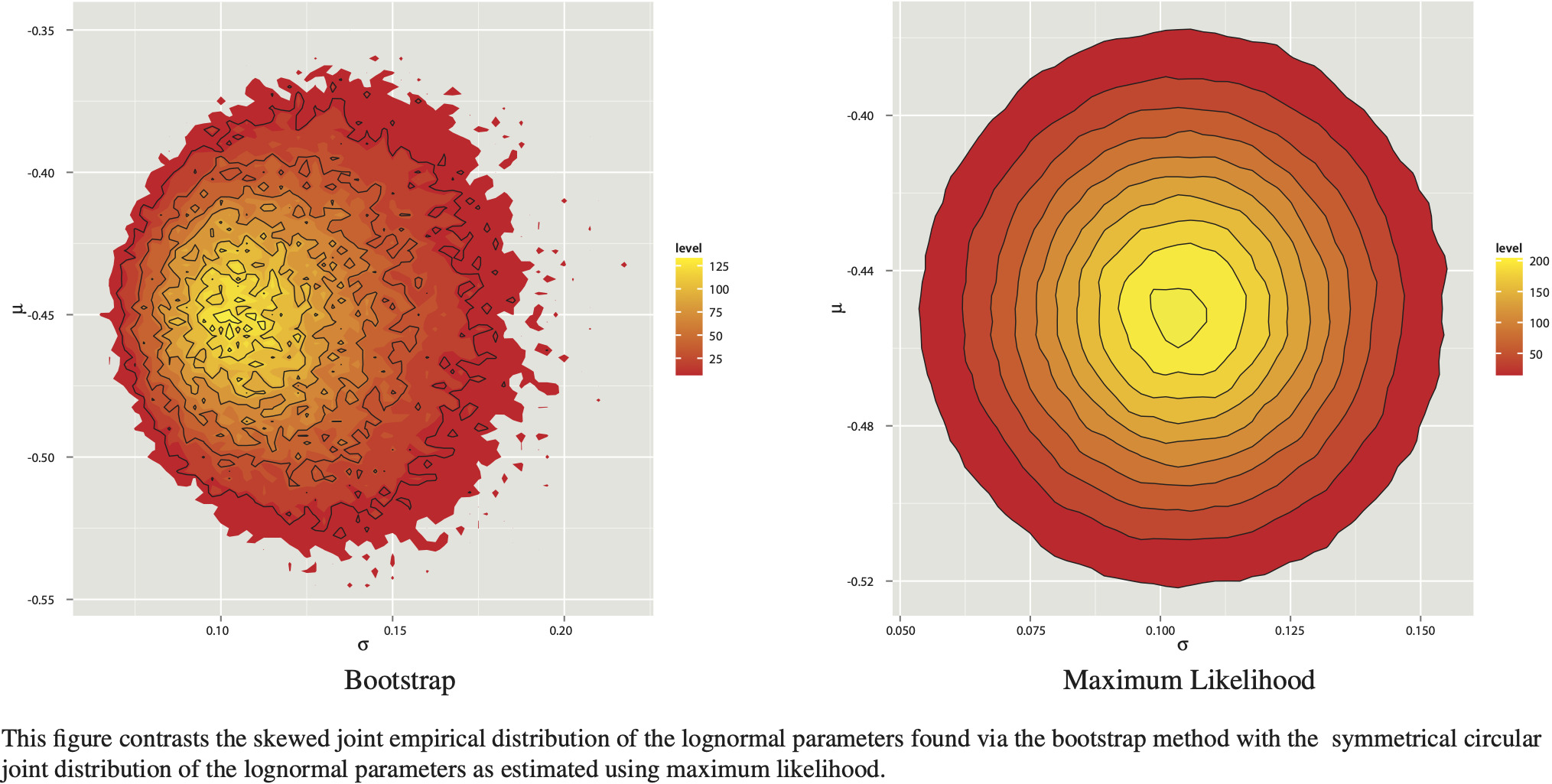

Visual inspection of data is always valuable (Anscombe 1973). When investigating parameter risk for two-parameter distributions, one useful tool is the contour plot—a two-dimensional projection of the three-dimensional surface of the joint distribution of the parameters. Contour images in this paper will reflect the increase in probability of a given set of parameters by a brightening of the color as it shades from red to yellow.

Contour plots of the joint empirical distribution of the close pairs of μ and σ for the original and fine-grained algorithms are plotted in Figure 1.[2] The skew of the distribution is clear.

3. Maximum likelihood

3.1. Introduction

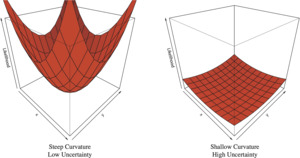

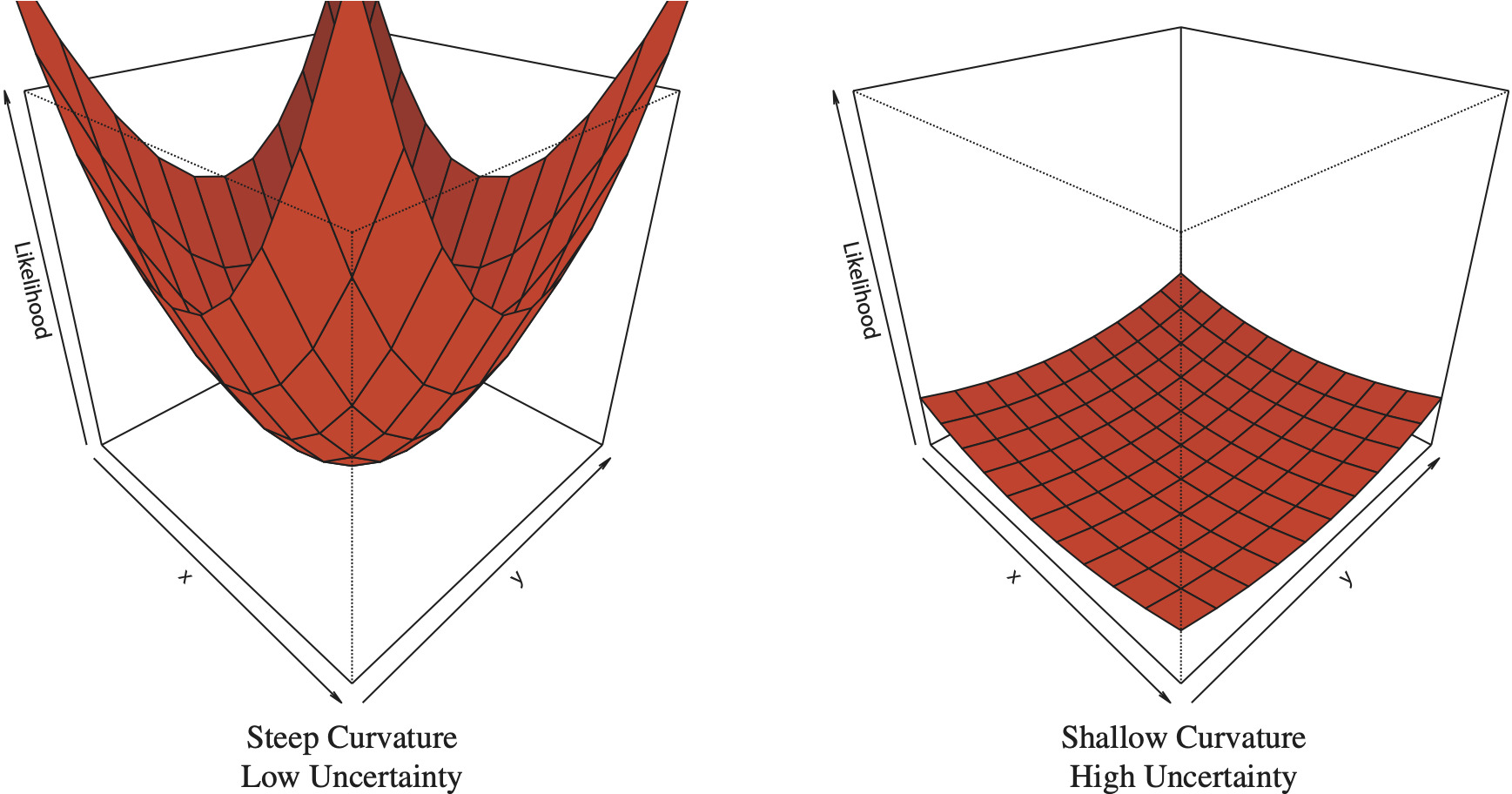

One of the most used tools in the actuarial toolbox is maximum likelihood estimation (MLE). Among the properties of MLE is that the estimated parameters have asymptotic normality—under general conditions, as the sample size increases, the distribution of the maximum likelihood estimate of the parameters tends to the multivariate normal with covariance matrix equal to the inverse of the Fisher information matrix (Klugman, Panjer, and Willmot 1998, §2.5.1). Assuming this normality, when performing MLE, the Hessian matrix at the point of convergence can be calculated and its inverse used to estimate parameter variances and covariances. An intuitive explanation for the relationship between the curvature of the log-likelihood and parameter uncertainty is as follows. The estimation process looks for a minimum for the negative log-likelihood by following the joint log-likelihood’s (hyper)surface. The Hessian matrix describes the curvature of a multivariate function, in this case, the log-likelihood. In a bivariate case, imagine the procedure as a marble rolling around on the log-likelihood surface. Compare the two surfaces in Figure 2. If the gradient at the found minimum is low, that would represent a shallow depression and it would be easy to “jostle” the marble to a different point nearby. This represents significant parameter uncertainty, as the true point could easily have been elsewhere. Conversely, if the gradient is high, the depression is deep and it would be difficult to move the marble. This implies the convergence to the found point is strong, and there is less parameter uncertainty.

3.2. Estimation and simulation

Given the assumption of normality, once a set of parameters is estimated by maximum likelihood, the Hessian can be used to generate the parameter variance-covariance matrix. Using these parameter means and covariances, sets of correlated parameters can be generated using a multivariate normal distribution—the asymptotic limiting distribution of maximum likelihood estimators. These correlated parameter sets are then used to draw one observation each from the distribution of interest. The collected observations now represent a sample with explicit recognition of parameter uncertainty, as the parameters generating the observations themselves were treated as random variables. Care needs to be taken, however, as the multivariate normal will sometimes generate parameters which themselves are illegal for the distribution in question. For example, the σ of a lognormal distribution must be greater than 0, yet a multivariate normal may generate a value less than 0. Similarly, a value very close to 0 may be generated when its reciprocal is needed, resulting in an astronomical value. There are a few ways to address this problem, the simplest being to ignore generated values which cause illegal results. It is also prudent, at times, to trim the mean to prevent edge cases from dominating the results. In all the MLE instances below, the mean is trimmed by 0.1%. As 1,000,000 samples are drawn for each of the MLE stochastic simulations, this means that the top and bottom 500 observations are removed and the statistics are calculated on the remaining 999,000 samples.

Continuing with the lognormal assumption, the results of solving for the parameters using MLE on the sample data are shown in Table 3.[3] A stochastic simulation was used to estimate the effects of parameter risk. Pairs of correlated μ and σ parameters were generated, each of which was then used for a stochastic lognormal draw of the observed ground-up loss ratio.[4] Given the drawn ground-up loss ratio, the resulting aggregate stop loss (ASL) ratio was simply the loss in a layer 2.5% excess of 72.5%. All means are trimmed as described above.

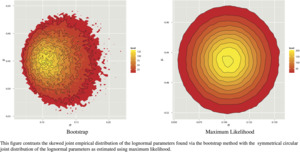

The simulated results, shown in Table 4, indicate almost no change from the original. This is more easily understood visually. Figure 3 compares re-scaled bootstrap and MLE-based contour plots, and also has explicitly plotted density isolines. When the plotted ranges are no longer restricted to the ranges in the original paper, the plot clearly contrasts the symmetrical uncorrelated nature of the MLE-based parameters with the skewed bootstrapped parameters. The isolines, along which each parameter set is equiprobable, further demonstrate the circular nature of the MLE-based results. This is predominantly due to the parameters being uncorrelated with each other.[5] When pairs of multivariate normal variables are uncorrelated (or ρ = 0 in the bivariate case), that is sufficient to imply their independence (Melnick and Tenenbein 1982). Therefore, under the lognormal assumption, since μ and σ are independent and uncorrelated, any pair of parameters which would result in a loss above the overall expectation is balanced by one equally probable which would cause an equally lower loss. When the parameters exhibit correlation, and possibly even more strongly in a skewed distribution, it is possible to obtain an observation which cannot be balanced by its equal and opposite “across the circle of probability.”

3.3. Other distributions

To investigate how correlation between the parameters may affect the results, the MLE fitting was done using other distributional families: the two parameter gamma and Weibull distributions and the three parameter Burr and inverse Burr distributions.[6] Information criteria, such as the Akaike Information Criteria (AICc)[7] are often used to compare models. When using information criteria, two points must be kept in mind. First, the criteria can only be compared between models based on the exact same data. Second, it is not the magnitude of the value, but the difference between the lowest value and all the others, which is important (Burnham and Anderson 2002, chap. 2). When used appropriately, accepted rules of thumb are that a difference of 0–2 implies model similarity, a difference of 4–7 shows that the model with the higher value has little support with respect to the model with the lower value, and larger differences imply that the higher-valued model should really not be considered at all (Burnham and Anderson 2002, 70–71; Spiegelhalter et al. 2002).

The best fits, as shown in Table 5, are the lognormal and the gamma, with the Weibull being acceptable. The Burr and inverse Burr are each more than 4 away from the minimum, showing little support. Nevertheless, it is instructive to see the difference between using a better model and a worse one. Don’t all the models arise from reasonable analyses and the same processes? The goodness-of-fit measures don’t really look that bad, do they? The AICc difference not even 5, right? What if the AICc’s were 18,204.3 and 18,208.9, wouldn’t that be “close enough”? These are questions the actuary may receive, if not ask of him or herself. Often, actuaries are exposed to “good” results and do not always have a yardstick for “bad” ones. Being able to recognize when processes fail and how that can affect analyses is an important part of actuarial analysis. Therefore, the stochastic parameter uncertainty process was applied to each of these models as well to provide a sense of poor performance.

Using the fitted parameter means and covariance matrix for each distribution, one million correlated observations were generated. Since using a multivariate normal to generate observations makes it possible to obtain negative values, the same filtering and trimming used for the lognormal were used for these distributions as well. Unfortunately, even with the trimming, the multivariate normal generated too many unacceptable parameter sets at times. This was due not only to “illegal” parameter sets but also to parameter sets which generated unreasonable results, such as ground-up loss ratios greater than 10196% for the inverse Burr. This further highlights the need for actuarial judgment, which should always temper raw algorithmic output, in any analysis. One of the largest contributions actuaries make is to understand, and provide the context for, the statistics which will be used. Yes, 10196% may be one observation out of one million that can mathematically arise from a random draw of underlying inverse Burr parameters, but it should it be considered a possible event? Certainly not! Therefore, not only were illegal parameters sets removed, but a judgment-based reasonability cap for the ground-up loss ratios of 300% was implemented as well. For the inverse Burr, there were 234,511 parameter sets which were removed from the analysis due to being invalid or in excess of the cap. For the Burr, there were 187,233 such observations.

Table 6 shows the results of the stochastic simulations for these distributions, and the inferences made using all, and then the 765,489 and 812,767 remaining reasonable, observations respectively. The “small” difference in the AICc of the parameters manifests as a very significant difference in the modeled results including parameter uncertainty. The results for the three-parameter distributions, even when trimmed, are just plain ridiculous. Even among the models which only reflect “realistic” observations, the results seem extreme when compared to the distributions with acceptable AICc measures.

3.4. Estimation and simulation on a log scale

Another common way to prevent negative parameters in a simulation is to reparameterize the distributions so that the optimization solves for an extremum of the log of the parameters, and this value is then exponentiated for use in simulation. This transformation also affects the shape of the joint distribution of the parameters in normal space, as parameters exhibiting symmetry on a log scale will not be symmetric on a normal scale. Table 7 displays the results of the simulations including the effects of parameter uncertainty using parameters fitted on a log scale. For the three distributions with acceptable goodness-of-fits, the lognormal continues to show little effect from parameter risk, the gamma now shows almost none as well, and the aggregate stop loss under the Weibull also demonstrates a smaller effect.

4. Bayesian analysis

4.1. Introduction

A third way to incorporate parameter risk is through a Bayesian model using Markov Chain Monte Carlo (MCMC) techniques. Roughly speaking, the Bayesian approach is that there exists an a priori estimate of the distribution of the parameters, which, when convoluted with the data, allows for the calculation of an a posteriori distribution of those parameters (Gelman et al. 2014, Chap. 1.3). The Bayesian model can be made even more sophisticated through explicit recognition of parameter dependency through hyperparameters. A model in which there exists a hierarchical structure of parameter dependency is called, understandably, a hierarchical model (Kruschke 2015, 221–223). Creating a model with such structure allows the joint probability model to reflect potential dependencies between the parameters (Gelman et al. 2014, 101). Both simple and hierarchical models explicitly recognize parameter risk by generating a posterior distribution around the parameters. Moreover, distributions of the statistics of interest, such as loss rations, can also be easily generated with explicit reflection of parameter risk.

While the Bayesian concept is relatively simple, for almost two centuries its application was difficult, as the convolution often required the calculation of integrals without nice analytic solutions. In the past few decades, advances in both algorithms and computer hardware have made these computations much simpler. The method used in this paper, MCMC, takes advantage of certain properties of Markov chains that allow it to converge to the posterior distribution without fully calculating some of the more difficult integrals. For more explanation, the interested reader is directed to Kruschke (2015, chap. 7) and Gelman et al. (2014, chap. 11).

4.2. Simple models

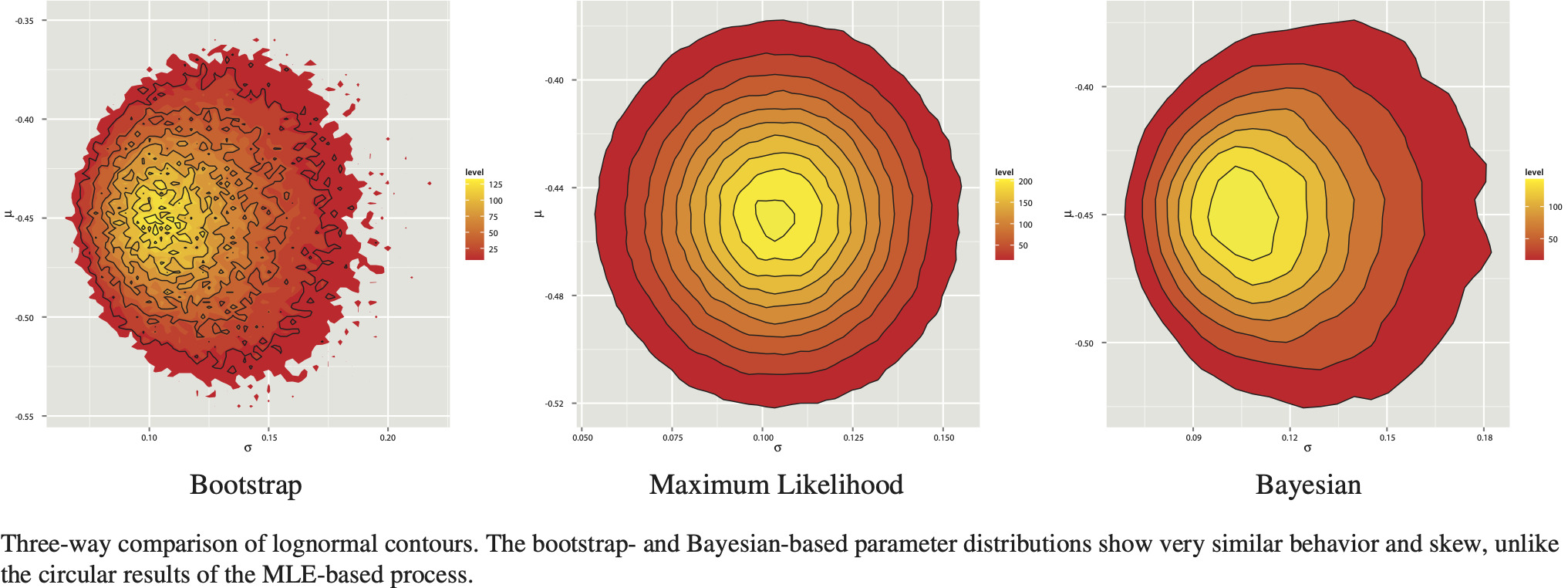

Initially, a simple model was used. This model placed a weakly informative prior on both μ and σ and allowed the data to “lead” the posterior. More specifically, using JAGS (Plummer 2003), not only were samples of μ and σ generated, but a stochastic ground-up loss observation based on the generated μ and σ was drawn as well.[8] The corresponding aggregate stop loss was calculated as a layer of 2.5% excess of 72.5%. For the lognormal distribution, the generated μ and σ parameters remain effectively uncorrelated—about −0.6672%. Nevertheless, the effect of parameter uncertainty on the aggregate stop loss, as shown in the first section of Table 8, is significant. Looking at a contour plot, the reason becomes clear. The skew that was seen using the bootstrap routine of Section 2 has returned, as shown in Figure 4.

For completeness, similar Bayesian analyses were done for the other distributions brought in Section 3.3.[9] As in the MLE case, comparing the models requires a goodness-of-fit measure. It remains an open question as to which measure to use to robustly compare Bayesian models. One of the more accepted measures is the deviance information criterion (DIC), a Bayesian analogue to the Akaike information criterion (Spiegelhalter et al. 2002). However, a more recently defined measure, the widely applicable information criterion (Watanabe 2013), also called the Watanabe-Akaike information criteria (sharing the acronym WAIC), has been gaining traction as being a more fully Bayesian measure than DIC (Gelman et al. 2014, 173–174). This paper will use WAIC as the goodness-of-fit measure. As shown in the remainder of Table 8, when using the simple model framework, the spread in both results and goodness-of-fit statistics for most families is smaller than when using maximum likelihood—all models are within the acceptable rule of thumb—but the lognormal distribution remains the model of choice. The most surprising result is the gamma model, which now shows the counterintuitive result of a reduced expected aggregate stop loss ratio! What is also clear is that the Burr and inverse Burr no longer result in unacceptable results.

4.3. Hierarchical models

Another benefit of the Bayesian procedure is the capability to express a hierarchical structure in the model. Instead of weakly informative priors directly on μ and σ, a multivariate normal distribution, itself with a weakly informed prior on the mean and the covariance matrix, was used to generate correlated pairs (μ, σ) which were then used to generate the lognormal draws. Unfortunately, when adding the multivariate normal structure into JAGS, which uses Gibbs sampling, the generated parameters were so auto-correlated that convergence was not achieved in reasonable time—even using thinning rates of over 1000. This is a property of Gibbs sampling in that it does not deal well with highly correlated posteriors (Stan Development Team 2014b, vi). Therefore, the model was rebuilt in Stan (Stan Development Team 2014a)—a Bayesian modeling language whose sampler is not Gibbs but Hamiltonian Monte Carlo (Gelman et al. 2014 p. 12.4–12.6).[10] The No-U-Turn-Sampler used by Stan is much more robust to highly autocorrelated parameters (Hoffman and Gelman 2014). To provide reassurance that the model was built correctly, the non-hierarchical lognormal model was also programmed in Stan to compare the fitted results with those of JAGS.

The ability to expressly consider a hierarchical structure becomes more valuable when contemplating the gamma distribution. The simple Bayesian gamma model showed the counter-intuitive reduced aggregate stop loss ratio. As the gamma parameters are extremely correlated with each other—99.7272% using maximum likelihood and 99.7251% in the simple Bayesian model—it makes sense to consider a hierarchical Bayesian model to directly generate correlated gamma parameters.

Table 9 shows that adding hierarchical hyperpriors to expressly model correlation between the lognormal μ and σ reduces the effect of parameter uncertainty in the aggregate stop loss for the lognormal distribution with almost no change to the goodness-of-fit as measured by WAIC. Expressly modeling the correlation in the gamma distribution had a much larger effect. Now, the effect of parameter risk much more in line with expectations—close to that of the hierarchical lognormal—and the goodness-of-fit measure is greatly improved and much closer to that of the lognormal. A comparison of the contour plots of the joint distributions of the parameter pairs between the simple and hierarchical models is shown in Figure 5.

5. Comparison and conclusions

5.1. Comparisons

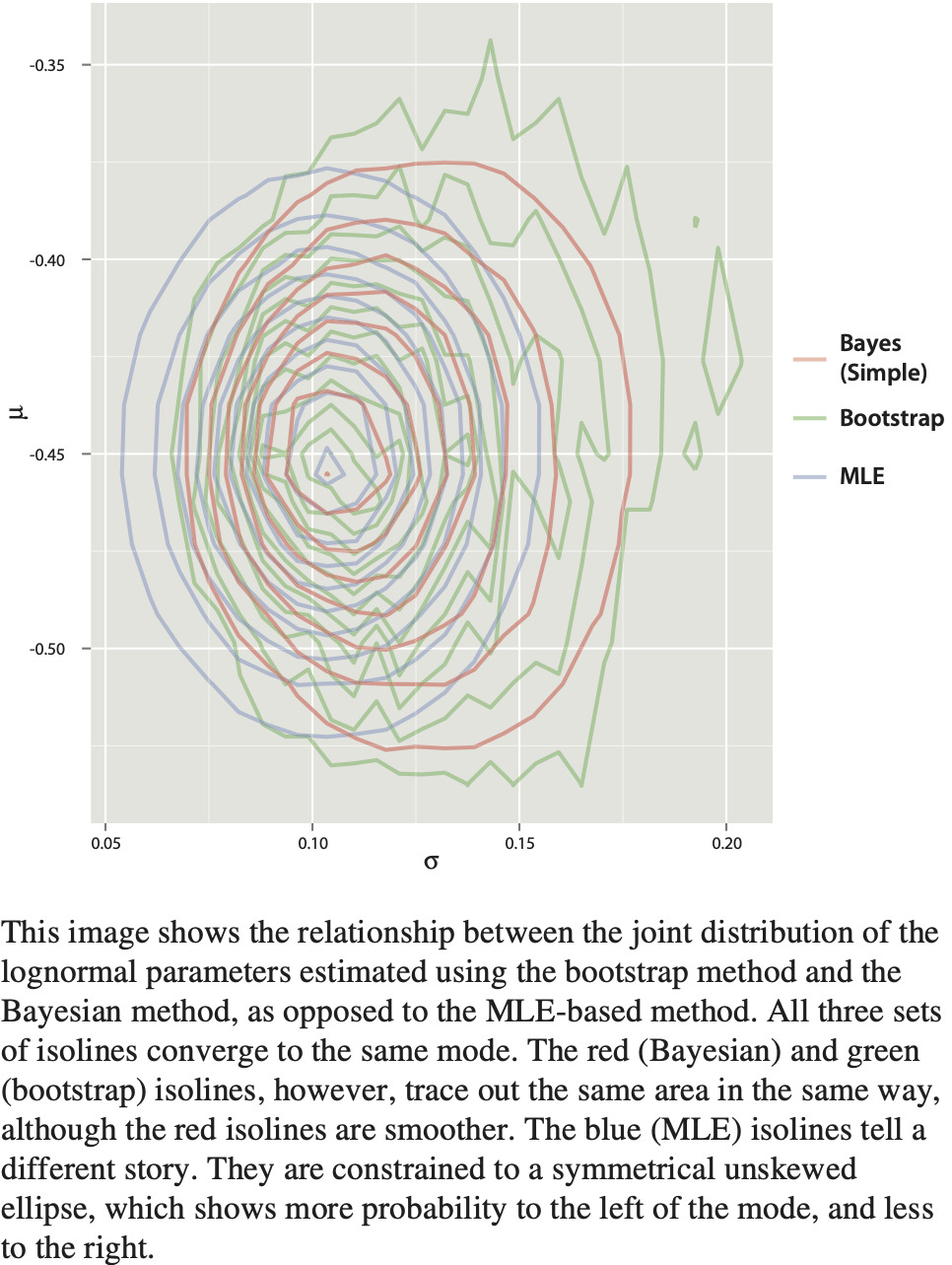

One of the first observations that can be made is that the bootstrap results appear very similar to the Bayesian results in both magnitude and distribution—especially with the simple model. Overlaying the contours of the distributed parameters, as shown in Figure 6, highlights the relationship between the methods. The area of highest density is shared by all three methods. However, while the Bayesian contours show the same skew and coverage as the bootstrap contours, the maximum likelihood contours are constrained to a symmetric ellipse centered on the area of highest density.

This near-identicality is not surprising. Van Kampen’s bootstrap is actually an example of Approximate Bayesian Computation (ABC). Described in theory by Rubin (1984), and named by Beaumont, Zhang, and Balding (2002), ABC comprises methods where approximations to Bayesian posteriors are calculated by simulating outcomes based on parameters selected from their prior distributions, rejecting parameter sets whose outcomes lie outside an accepted tolerance, and estimating the posterior distribution based on the remaining “accepted” samples.[11] This method circumvents the need to calculate any likelihood; all that is needed is the prior, summary statistics, and a tolerance. This is precisely what Van Kampen’s bootstrap does: sample from the uniform grid (prior on parameters), reject outcomes whose summary statistics are not close (rejection outside tolerance), and calculate results based on weighted averages of the remaining samples (posterior estimation).

Nevertheless, the truly Bayesian procedure has at least two benefits: 1) studies have shown that given data sets allowing for true MCMC, the MCMC results are more accurate than those of rejection-based methods (Beaumont, Zhang, and Balding 2002; Bornn et al. 2014); and 2) it allows for easier to expression and investigation of hierarchical relationships (Turner and Van Zandt 2014, 6–7). Therefore, where an analytic expression of the needed likelihood(s) are not that difficult to calculate, it makes sense to use the fully Bayesian technique.

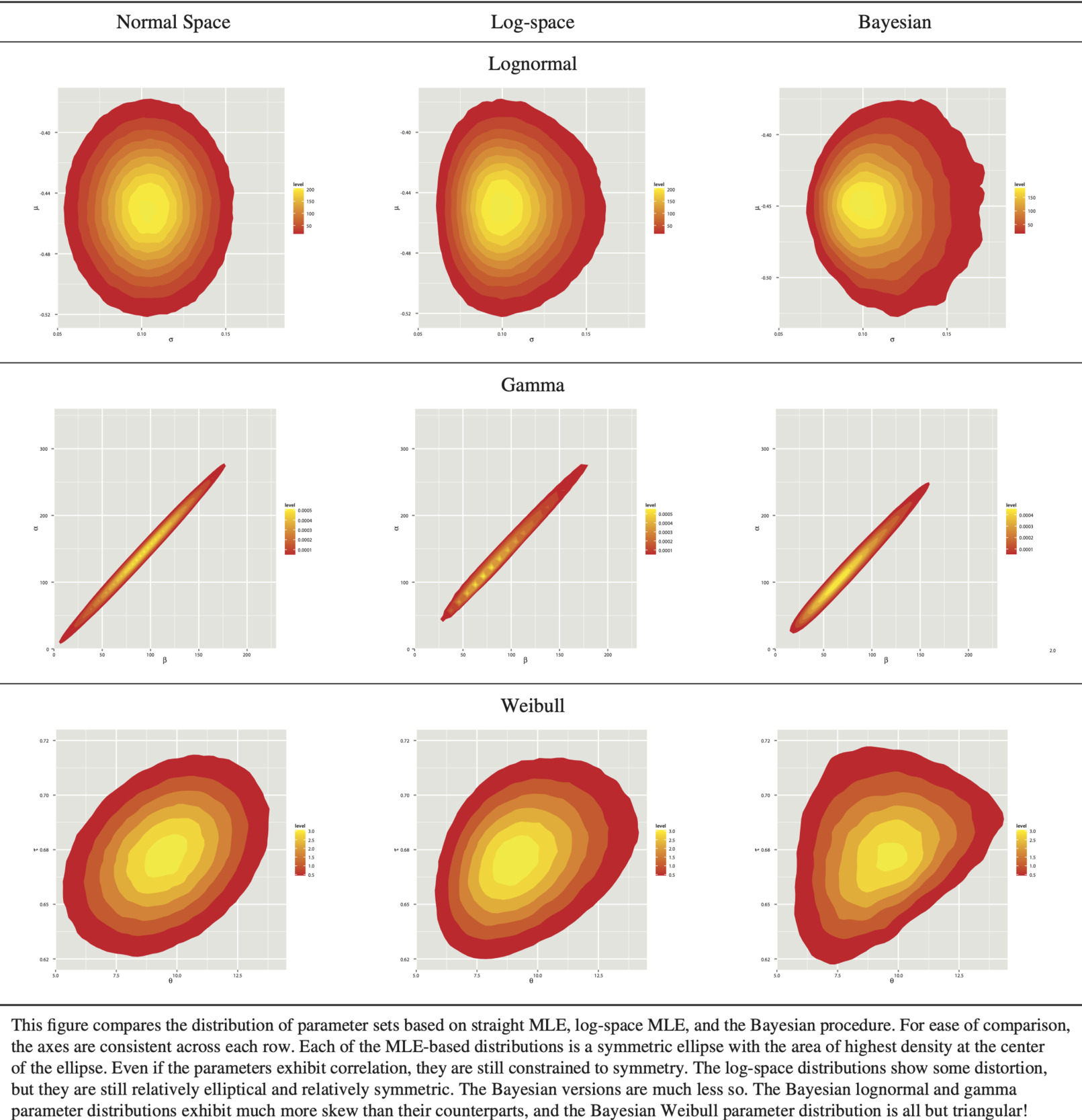

Figure 7 compares the joint distribution of the generated parameter sets under MLE, log-space MLE, and the Bayesian framework for the two-parameter distributions. The joint distribution of the parameters generated by maximum likelihood methods are constrained to a symmetric elliptical distribution. Even when solving for parameters in log-space, the resulting normal space parameters are still mainly elliptical with some distortion. The Bayesian models, however, are not constrained to symmetric ellipticity.

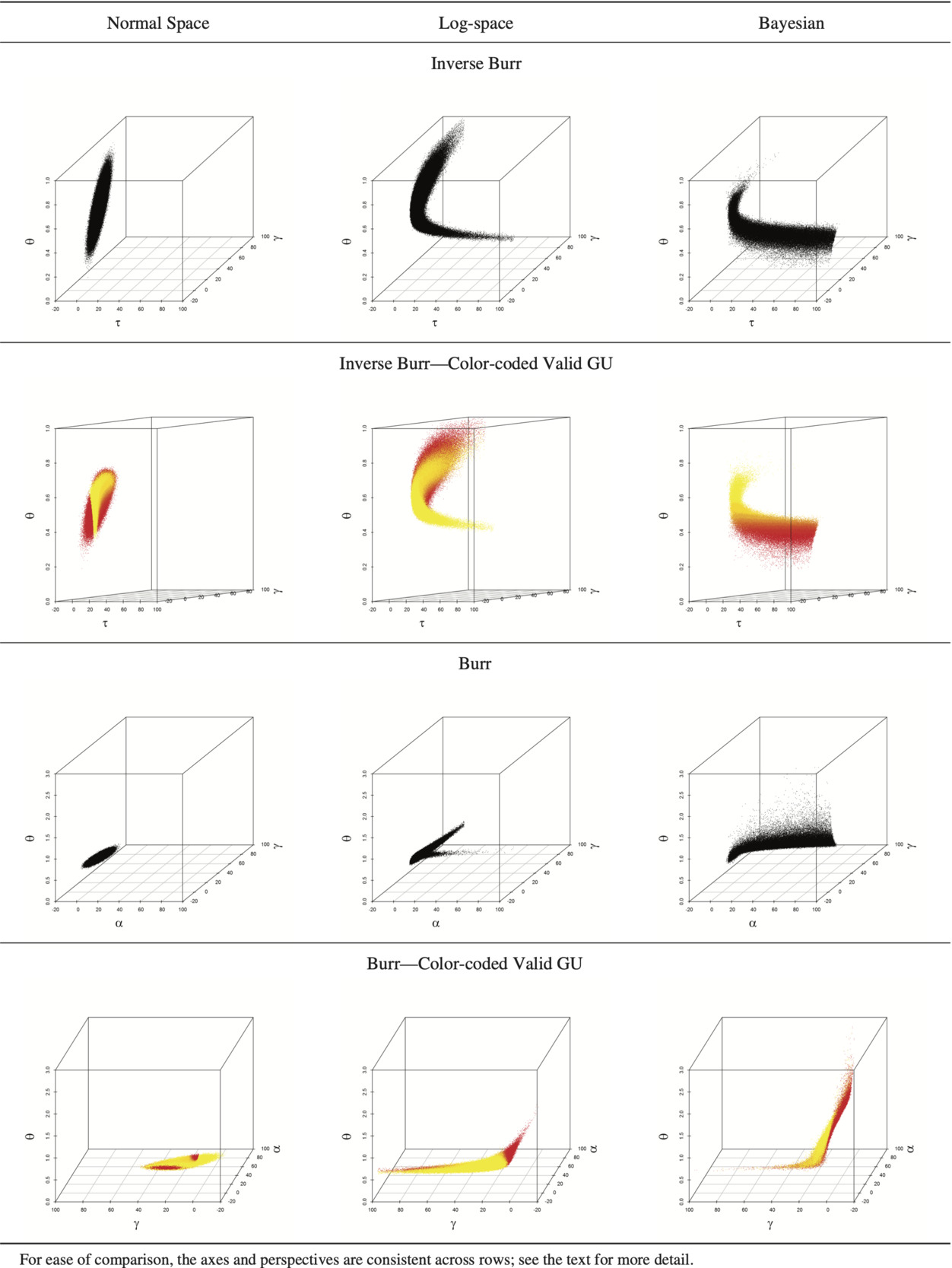

This is even clearer for the three-parameter distributions. Figure 8 compares the parameter clouds of the distributions; each point representing a parameter triplet. The colored version of the clouds have fewer points than the monochrome versions, as they are composed only of the points which led to valid values. For these plots, the brightness and color of the points reflect the magnitude of the resulting value; e.g., bright yellow triplets will have a correspondingly larger expected ground-up loss ratio than the dull red triplets.

There are two important takeaways from Figure 8. The first regards the structure of the clouds. The normal space MLE clouds are clearly constrained to be ellipsoids. The log-space MLE clouds break from that constraint but seem to be more “stretched” and “bent” ellipsoids than entirely different shapes. The Bayesian process, however, allows the data to determine the shape of the posterior distribution and results in strangely-shaped surfaces which expand to fill the parameter space. The inverse Burr traces a sheet that inhabits different sections of θ and γ space than the other two inverse Burr clouds. The Burr cloud is more a sheet with a small tail that extends down γ space. The second takeaway is the relationship between parameter and value. In the normal ellipsoid it is difficult to see. In the log-space versions it is somewhat clearer to see a relationship between the parameter triplet’s position and resulting mean value. However, the relationship between structure and value appear clearest in the Bayesian clouds. The inverse Burr’s expectation’s relationship with θ is clearly seen. For the Burr, most of the parameter cloud is a thin sheet, yellow on one side and red on the other.

Looking at a summary of results, shown in Table 10, the Bayesian process allows the fit for each family to affect the ground-up loss ratio minimally yet show similar leveraged effects on the aggregate stop loss. Also, even the families with worse fits were not as unreasonable as they were using maximum likelihood. Another key difference is in identifying the “best” family to use. Using maximum likelihood estimation, it was difficult to choose between the lognormal and the gamma distributions based on the goodness-of-fit. This was especially troubling as the effect of parameter risk was markedly different between the two. Using the Bayesian process the decision is clearer. While the WAIC metrics for both the hierarchical lognormal and hierarchical gamma are very close, so too are their effects on both the ground-up and aggregate losses. In hindsight, for the maximum likelihood method, it is almost as if the distortion caused by symmetry of the lognormal was balanced by the distortion caused by the extreme correlation of the gamma, leading to the similar AICc values. This is less of an issue with the Bayesian process, as the area of interest could be more fully investigated without being subject to the elliptical constraints.

5.2. Conclusions

Reviewing the three methods discussed, the bootstrap is an example of approximate Bayesian computation. Comparing the maximum likelihood and Bayesian techniques, while basic MLE may be simpler to explain, it cannot escape the “prison of ellipticity,” even when using log scale transformations of the parameters. With the distribution of the parameters being only asymptotically normal, and especially when dealing with small ratios of observations to parameters, MLE’s ellipticity prevents it from fully investigating potential parameter sets and causes it to generate illegal values if it is too close to a boundary condition. The Bayesian method’s ability to “trace out” a proper posterior based on the supplied data, and for the true Bayesian procedure to easily expressly consider dependence structures within the data and parameters, makes a strong case for actuaries to increase their use of Bayesian techniques when analyzing risk. In cases where the likelihood is difficult to construct, using an approximate Bayesian computation would seem a worthwhile endeavor.

For the specific data in this paper, the results of the MLE method are inferior to the Bayesian method. The lognormal and gamma models with similar goodness-of-fit metrics provide different outcomes, and the other families do not supply an acceptable fit. The Bayesian methods, especially the hierarchical models, provide clearer and more consistent indications as to the presence and magnitude of parameter risk. It seems clear that for this data set parameter risk plays a significant role, and estimates which do not contemplate it will understate the risk in the aggregate stop loss contract.

One area of future research would be in enhancing the MLE method. As discussed in Venter and Sahasrabuddhe (2012, §3.2.3), with few data points, the asymptotic properties of maximum likelihood estimation do not manifest. Their suggestion is to generate the parameters via a gamma distributions with the correlation structure of the multivariate normal. Using a normal copula with gamma marginals is one way to achieve this. Other research opportunities include comparing data sets with different properties and explicitly modeling a temporal component to reflect parameter risk resulting from changes over time.

Acknowledgments

The author wishes to thank Glenn Meyers, Andrew Gelman, Bob Carpenter, Ben Goodrich, Michael Betancourt, and the anonymous reviewers for their helpful comments and suggestions. Any errors in the paper are solely the author’s own.