1. Introduction and motivation

1.1. Claims reserving for several correlated run-off subportfolios

Often, claim reserves are the largest position on the liability side of the balance sheet of a non-life insurance company. Therefore, given the available information about the past development, the prediction of adequate claim reserves as well as the quantification of the uncertainties in these reserves is a major task in actuarial practice and science (e.g., Wüthrich and Merz (2008), Casualty Actuarial Society (2001), or Teugels and Sundt (2004)).

In this paper we consider the claim reserving problem in a multivariate context. More precisely, we consider a portfolio consisting of several correlated run-off subportfolios. On some subportfolios we use the chain-ladder (CL) method and on the other subportfolios we use the additive loss reserving (ALR) method to estimate the claim reserves. Since in actuarial practice the conditional mean square error of prediction (MSEP) is the most popular measure to quantify the uncertainties, we provide an MSEP estimator for the overall reserves. This means that we provide a first step towards an estimate of the overall MSEP for the predictor of the ultimate claims for aggregated subportfolios using different claims reserving methods for different subportfolios. These studies of uncertainties are crucial in the development of new solvency guidelines where one exactly quantifies the risk profiles of the different insurance companies.

1.2. Multivariate claims reserving methods

The simultaneous study of several correlated run-off subportfolios is motivated by the fact that:

-

In practice it is quite natural to subdivide a non-life run-off portfolio into several correlated subportfolios, such that each subportfolio satisfies certain homogeneity properties (e.g., the CL assumptions or the assumptions of the ALR method).

-

It addresses the problem of dependence between run-off portfolios of different lines of business (e.g., bodily injury claims in auto liability and in general liability business).

-

The multivariate approach has the advantage that by observing one run-off subportfolio we learn about the behavior of the other run-off subportfolios (e.g., subportfolios of small and large claims).

-

It resolves the problem of additivity (i.e., the estimators of the ultimate claims for the whole portfolio are obtained by summation over the estimators of the ultimate claims for the individual run-off subportfolios).

Holmberg (1994) was probably one of the first to investigate the problem of dependence between run-off portfolios of different lines of business. Braun (2004) and Merz and Wüthrich (2007, 2008) generalized the univariate CL model of Mack (1993) to the multivariate CL case by incorporating correlations between several run-off subportfolios. Another feasible multivariate claims reserving method is given by the multivariate ALR method proposed by Hess, Schmidt, and Zocher (2006) and Schmidt (2006b) which is based on a multivariate linear model. Under the assumptions of their multivariate ALR model Hess, Schmidt, and Zocher (2006) and Schmidt (2006b) derived a formula for the Gauss-Markov predictor for the nonobservable incremental claims which is optimal in terms of the classical optimality criterion of minimal expected squared loss. Merz and Wüthrich (2009) derived an estimator for the conditional MSEP in the multivariate ALR method using the Gauss-Markov predictor proposed by Hess, Schmidt, and Zocher (2006) and Schmidt (2006b).

1.3. Combination of the multivariate CL and ALR methods

In the sequel we provide a framework in which we combine the multivariate CL model and the multivariate ALR model into one multivariate model. The use of different reserving methods for different subportfolios is motivated by the fact that

-

in general not all subportfolios satisfy the same homogeneity assumptions; and/or

-

sometimes we have a priori information (e.g., premium, number of contracts, external knowledge from experts, data from similar portfolios, market statistics) for some selected subportfolios which we want to incorporate into our claims reserving analysis.

That is, we use the CL method for a subset of subportfolios on the one hand and we use the ALR method for the complementary subset of subportfolios on the other hand. From this point of view it is interesting to note that the CL method and the ALR method are very different in some aspects and therefore exploit differing features of the data belonging to the individual subportfolios:

-

The CL method is based on cumulative claims whereas the ALR method is applied to incremental claims.

-

Unlike the CL method, the ALR method combines past observations in the upper triangle with external knowledge from experts or with a priori information.

-

The ALR method is more robust to outliers in the observations than the CL method.

Organization of this paper. In Section 2 we provide the notation and data structure for our multivariate framework. In Section 3 we define the combined model and derive the properties of the estimators for the ultimate claims within the framework of the combined method. In Section 4 we give an estimation procedure for the conditional MSEP in the combined method and our main results are presented in Estimator 4.7 and Estimator 4.8. Section 5 is dedicated to the estimation of the model parameters, and, finally, in Section 6 we give an example. An interested reader will find proofs of the results in Section 7.

2. Notation and multivariate framework

We assume that the subportfolios consist of N ≥ 1 run-off triangles of observations of the same size. However, the multivariate CL method and the multivariate ALR method can also be applied to other shapes of data (e.g., run-off trapezoids). In these N triangles the indices

-

n, 1 ≤ n ≤ N, refer to subportfolios (triangles),

-

i, 0 ≤ i ≤ I, refer to accident years (rows),

-

j, 0 ≤ j ≤ J = I, refer to development years (columns).

The incremental claims (i.e., incremental payments, change of reported claim amounts or number of newly reported claims) of run-off triangle for accident year and development year are denoted by and cumulative claims (i.e., cumulative payments, claims incurred or total number of reported claims) are given by

C(n)i,j=j∑k=0X(n)i,k.

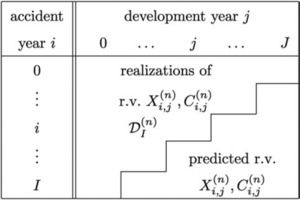

Figure 1 shows the claims data structure for N individual claims development triangles described above.

Usually, at time I, we have observations

D(n)I={C(n)i,j;i+j≤I},

for all run-off subportfolios n ∈ {1, . . . , N}. This means that at time I (calendar year I) we have a total of observations over all subportfolios given by

DNI=N⋃n=1D(n)I,

and we need to predict the random variables in its complement

DN,cI={C(n)i,j;i≤I,i+j>I,1≤n≤N}.

In the sequel we assume without loss of generality that we use the multivariate CL method for the first K (i.e., K ≤ N) run-off triangles n = 1, . . . , K and the multivariate ALR method for the remaining n = K + 1, . . . , N triangles. Therefore, we introduce the following vector notation

CCLi,j=(C(1)i,j⋮C(K)i,j),XCLi,j=(X(1)i,j⋮X(K)i,j),CADi,j=(C(K+1)i,j⋮C(N)i,j) and XADi,j=(X(K+1)i,j⋮X(N)i,j)

for all i ∈ {0, . . . , I} and j ∈ {0, . . . , J}. In particular, this means that the cumulative/incremental claims of the whole portfolio are given by the vectors

Ci,j=(CCLi,jCADi,j) and Xi,j=(XCLi,jXADi,j).

We define the first k + 1 columns of CL observations by

BKk={CCLi,j;i+j≤I and 0≤j≤k}

for Finally, we define -dimensional column vectors for consisting of ones by and denote by

D(a)=(a10⋱0aL) and D(c)b=(cb10⋱0cbL)

the -diagonal matrices of the -dimensional vectors and where and

3. Combined multivariate CL and ALR method

The following model is a combination of the multivariate CL model and the multivariate ALR model presented in Merz and Wüthrich (2008) and Merz and Wüthrich (2009), respectively.

(CombinedCLandALRmodel)

-

Incremental claims of different accident years are independent.

-

There exist -dimensional constants

\begin{aligned} \mathbf{f}_{j} & =\left(f_{j}^{(1)}, \ldots, f_{j}^{(K)}\right)^{\prime} \quad \text { and } \\ \boldsymbol{\sigma}_{j}^{\mathrm{CL}} & =\left(\sigma_{j}^{(1)}, \ldots, \sigma_{j}^{(K)}\right)^{\prime} \end{aligned} \tag{9}

with and -dimensional random variables

\varepsilon_{i, j+1}^{\mathrm{CL}}=\left(\varepsilon_{i, j+1}^{(1)}, \ldots, \varepsilon_{i, j+1}^{(K)}\right)^{\prime}, \tag{10}

such that for all i ∈ {0, . . . , I} and j ∈ {0, . . . , J − 1} we have

\mathbf{C}_{i, j+1}^{\mathrm{CL}}=\mathrm{D}\left(\mathbf{f}_{j}\right) \cdot \mathbf{C}_{i, j}^{\mathrm{CL}}+\mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2} \cdot \mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{CL}}\right) \cdot \sigma_{j}^{\mathrm{CL}} . \tag{11}

-

There exist (N − K)-dimensional constants

\begin{aligned} \mathbf{m}_{j} & =\left(m_{j}^{(1)}, \ldots, m_{j}^{(N-K)}\right)^{\prime} \\ \sigma_{j-1}^{\mathrm{AD}} & =\left(\sigma_{j-1}^{(K+1)}, \ldots, \sigma_{j-1}^{(N)}\right)^{\prime}, \end{aligned} \tag{12}

with and -dimensional random variables

\varepsilon_{i, j}^{\mathrm{AD}}=\left(\varepsilon_{i, j}^{(K+1)}, \ldots, \varepsilon_{i, j}^{(N)}\right)^{\prime}, \tag{13}

such that for all i ∈ {0, . . . , I} and j ∈ {1, . . . , J} we have

\mathbf{X}_{i, j}^{\mathrm{AD}}=\mathrm{V}_{i} \cdot \mathbf{m}_{j}+\mathrm{V}_{i}^{1 / 2} \cdot \mathrm{D}\left(\varepsilon_{i, j}^{\mathrm{AD}}\right) \cdot \boldsymbol{\sigma}_{j-1}^{\mathrm{AD}}, \tag{14}

where are deterministic positive definite symmetric matrices.

-

The N-dimensional random variables

\varepsilon_{i, j+1}=\binom{\varepsilon_{i, j+1}^{\mathrm{CL}}}{\varepsilon_{i, j+1}^{\mathrm{AD}}} \quad \text { and } \quad \varepsilon_{k, l+1}=\binom{\varepsilon_{k, l+1}^{\mathrm{CL}}}{\varepsilon_{k, l+1}^{\mathrm{AD}}}

are independent for or with and

\begin{aligned} \operatorname{Cov} & \left(\varepsilon_{i, j+1}, \varepsilon_{i, j+1}\right) \\ & =E\left[\varepsilon_{i, j+1} \cdot \varepsilon_{i, j+1}^{\prime}\right] \\ & =\left(\begin{array}{ccccc} 1 & \rho_{j}^{(1,2)} & \cdots & \cdots & \rho_{j}^{(1, N)} \\ \rho_{j}^{(2,1)} & 1 & \cdots & \cdots & \rho_{j}^{(2, N)} \\ \vdots & \vdots & \ddots & & \vdots \\ \vdots & \vdots & & \ddots & \vdots \\ \rho_{j}^{(N, 1)} & \rho_{j}^{(N, 2)} & \cdots & \cdots & 1 \end{array}\right), \end{aligned} \tag{15}

for fixed for

We introduce the notation

\begin{aligned} \sigma_{j} & =\left(\sigma_{j}^{\mathrm{CL}}, \sigma_{j}^{\mathrm{AD}}\right)^{\prime}, \\ \Sigma_{j} & =E\left[\mathrm{D}\left(\varepsilon_{i, j+1}\right) \cdot \sigma_{j} \cdot \sigma_{j}^{\prime} \cdot \mathrm{D}\left(\varepsilon_{i, j+1}\right)\right], \\ \Sigma_{j}^{(C)} & =E\left[\mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{CL}}\right) \cdot \sigma_{j}^{\mathrm{CL}} \cdot\left(\sigma_{j}^{\mathrm{CL}}\right)^{\prime} \cdot \mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{CL}}\right)\right], \\ \Sigma_{j}^{(A)} & =E\left[\mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{AD}}\right) \cdot \sigma_{j}^{\mathrm{AD}} \cdot\left(\sigma_{j}^{\mathrm{AD}}\right)^{\prime} \cdot \mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{AD}}\right)\right], \end{aligned} \tag{16}

\begin{aligned} \Sigma_{j}^{(C, A)} & =E\left[\mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{CL}}\right) \cdot \sigma_{j}^{\mathrm{CL}} \cdot\left(\sigma_{j}^{\mathrm{AD}}\right)^{\prime} \cdot \mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{AD}}\right)\right], \\ \Sigma_{j}^{(A, C)} & =E\left[\mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{AD}}\right) \cdot \sigma_{j}^{\mathrm{AD}} \cdot\left(\sigma_{j}^{\mathrm{CL}}\right)^{\prime} \cdot \mathrm{D}\left(\varepsilon_{i, j+1}^{\mathrm{CL}}\right)\right] \\ & =\left(\Sigma_{j}^{(C, A)}\right)^{\prime} . \end{aligned} \tag{17}

Thus, we have

\small{ \Sigma_{j}=\left(\begin{array}{ccccc} \left(\sigma_{j}^{(1)}\right)^{2} & \sigma_{j}^{(1)} \sigma_{j}^{(2)} \rho_{j}^{(1,2)} & \cdots & \cdots & \sigma_{j}^{(1)} \sigma_{j}^{(N)} \rho_{j}^{(1, N)} \\ \sigma_{j}^{(2)} \sigma_{j}^{(1)} \rho_{j}^{(2,1)} & \left(\sigma_{j}^{(2)}\right)^{2} & \cdots & \cdots & \sigma_{j}^{(2)} \sigma_{j}^{(N)} \rho_{j}^{(2, N)} \\ \vdots & \vdots & \ddots & & \vdots \\ \vdots & \vdots & & \ddots & \vdots \\ \sigma_{j}^{(N)} \sigma_{j}^{(1)} \rho_{j}^{(N, 1)} & \sigma_{j}^{(N)} \sigma_{j}^{(2)} \rho_{j}^{(N, 2)} & \cdots & \cdots & \left(\sigma_{j}^{(N)}\right)^{2} \end{array}\right)=\left(\begin{array}{cc} \Sigma_{j}^{(C)} & \Sigma_{j}^{(C, A)} \\ \Sigma_{j}^{(A, C)} & \Sigma_{j}^{(A)} \end{array}\right) . \tag{18}}

The Multivariate Model 3.1 is suitable for portfolios of correlated subportfolios in which the first subportfolios satisfy the homogeneity assumptions of the CL method, and the other subportfolios satisfy the homogeneity assumptions of the ALR method. Under Model Assumptions 3.1, the properties of the cumulative claims and the incremental claims are consistent with the assumptions of the multivariate CL time series model (see Merz and Wüthrich (2008)) and the multivariate ALR model (see Merz and Wüthrich (2009)). In particular for and Model Assumptions 3.1 reduce to the model assumptions of the multivariate CL time series model and the multivariate ALR model, respectively.

Remark 3.2

-

The factors are called -dimensional development factors, CL factors, age-to-age factors or link-ratios. The -dimensional constants are called incremental loss ratios and can be interpreted as a multivariate scaled expected reporting/cashflow pattern over the different development years.

-

In most practical applications, is chosen to be diagonal so as to represent a volume measure of accident year a priori known (e.g., premium, number of contracts, expected number of claims, etc.) or external knowledge from experts, similar portfolios or market statistics. Since we assume that is a positive definite symmetric matrix, there is a well-defined positive definite symmetric matrix (called square root of ) satisfying

-

Within the CL and ALR framework, Braun (2004) and Merz and Wüthrich (2007, 2008, 2009) proposed the development year-based correlations given by (15). Often correlations between different run-off triangles are attributed to claims inflation. Under this point of view it may seem more reasonable to allow for correlation between the cumulative or incremental claims of the same calender year (diagonals of the claims development triangles). This would introduce dependencies between accident years. However, at the moment it is not mathematically tractable to treat such year-based correlations within the CL and ALR framework. That is, all calender year-based dependencies should be removed from the data before calculating the reserves with the CL or ALR method. However, after correcting the data for the calender year-based correlations, further direct and indirect sources for correlations between different run-off triangles of a portfolio exist and should be taken into account (cf. Houltram (2003)). This is exactly what our model does.

-

Matrix reflects the correlation structure between the cumulative claims of development year within the first subportfolios and matrix the correlation structure between the incremental claims of development year within the last subportfolios. The matrices and reflect the correlation structure between the cumulative claims of development year in the first subportfolios and the incremental claims of development year in the last subportfolios.

-

There may occur difficulties about positivity in the time-series definition (11), which can be solved in a mathematically correct way. We omit these derivations since they do not lead to a deeper understanding of the model. Refer to Wüthrich, Merz, and Bühlmann (2008) for more details.

-

The indices for σ and ε differ by 1, since it simplifies the comparability with the derivations and results in Merz and Wüthrich (2008, 2009).

We obtain for the conditionally expected ultimate claim :

Lemma 3.3 Under Model Assumptions 3.1 we have for all :

a)

\begin{aligned} E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathcal{D}_{I}^{N}\right] & =E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathbf{C}_{i, I-i}\right]=E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathbf{C}_{i, I-i}^{\mathrm{CL}}\right] \\ & =\prod_{j=I-i}^{J-1} \mathrm{D}\left(\mathbf{f}_{j}\right) \cdot \mathbf{C}_{i, I-i}^{\mathrm{CL}}, \end{aligned}

b)

\begin{aligned} E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right] & =E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathbf{C}_{i, I-i}\right]=E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathbf{C}_{i, I-i}^{\mathrm{AD}}\right] \\ & =\mathbf{C}_{i, I-i}^{\mathrm{AD}}+\mathrm{V}_{i} \cdot \sum_{j=I-i+1}^{J} \mathbf{m}_{j} . \end{aligned}

ProofThis immediately follows from Model Assumptions 3.1.

This result motivates an algorithm for estimating the outstanding claims liabilities, given the observations If the -dimensional CL factors and the ( )-dimensional incremental loss ratios are known, the outstanding claims liabilities of accident year for the first and the last correlated run-off triangles are predicted by

E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathcal{D}_{I}^{N}\right]-\mathbf{C}_{i, I-i}^{\mathrm{CL}}=\prod_{j=I-i}^{J-1} \mathrm{D}\left(\mathbf{f}_{j}\right) \cdot \mathbf{C}_{i, I-i}^{\mathrm{CL}}-\mathbf{C}_{i, I-i}^{\mathrm{CL}} \tag{19}

and

E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right]-\mathbf{C}_{i, I-i}^{\mathrm{AD}}=\mathrm{V}_{i} \cdot \sum_{j=I-i+1}^{J} \mathbf{m}_{j}, \tag{20}

respectively. However, in practical applications we have to estimate the parameters and from the data in the upper triangles. Pröhl and Schmidt (2005) and Schmidt (2006b) proposed the multivariate CL factor estimates for

\begin{aligned} \hat{\mathbf{f}}_{j}= & \left(\hat{f}_{j}^{(1)}, \ldots, \hat{f}_{j}^{(K)}\right)^{\prime} \\ = & \left(\sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2}\left(\Sigma_{j}^{(C)}\right)^{-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2}\right)^{-1} \\ & \cdot \sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2}\left(\Sigma_{j}^{(C)}\right)^{-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{-1 / 2} \cdot \mathbf{C}_{i, j+1}^{\mathrm{CL}} . \end{aligned} \tag{21}

In the framework of the multivariate ALR method Hess, Schmidt, and Zocher (2006) and Schmidt (2006b) proposed the multivariate estimates for the incremental loss ratios

\begin{aligned} \hat{\mathbf{m}}_{j}= & \left(\hat{m}_{j}^{(1)}, \ldots, \hat{m}_{j}^{(N-K)}\right)^{\prime} \\ = & \left(\sum_{i=0}^{I-j} \mathrm{~V}_{i}^{1 / 2} \cdot\left(\Sigma_{j-1}^{(A)}\right)^{-1} \cdot \mathrm{~V}_{i}^{1 / 2}\right)^{-1} \\ & \cdot \sum_{i=0}^{I-j} \mathrm{~V}_{i}^{1 / 2} \cdot\left(\Sigma_{j-1}^{(A)}\right)^{-1} \cdot \mathrm{~V}_{i}^{-1 / 2} \cdot \mathbf{X}_{i, j}^{\mathrm{AD}} . \end{aligned} \tag{22}

-

In the case K = 1 (i.e., only one CL run-off subportfolio) the estimator (21) coincides with the classical univariate CL estimator of Mack (1993). Analogously, in the case N − K =1 (i.e., only one additive run-off subportfolio) the estimator (22) coincides with the univariate incremental loss ratio estimates \hat{m}_{j}=\sum_{i=0}^{I-j} \frac{X_{i, j}}{\sum_{k=0}^{I-j} V_{k}} \tag{23} with deterministic one-dimensional weights Vi (see, e.g., Schmidt (2006b, 2006a)).

-

With respect to the criterion of minimal expected squared loss the multivariate CL factor estimates (21) are optimal unbiased linear estimators for (cf. Pröhl and Schmidt (2005) and Schmidt (2006b)) and the multivariate incremental loss ratio estimates (22) are optimal unbiased linear estimators for (cf. Hess, Schmidt, and Zocher (2006) and Schmidt (2006b)).

-

For uncorrelated cumulative and incremental claims in the different run-off subportfolios (i.e., we set where denotes the identity matrix) we obtain the (unbiased) estimators for and

\hat{\mathbf{f}}_{j}^{(0)}=\left(\sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)\right)^{-1} \cdot \sum_{i=0}^{I-j-1} \mathbf{C}_{i, j+1}^{\mathrm{CL}} \tag{24}

and

\hat{\mathbf{m}}_{j}^{(0)}=\left(\sum_{i=0}^{I-j} \mathrm{~V}_{i}\right)^{-1} \cdot \sum_{i=0}^{I-j} \mathbf{X}_{i, j}^{\mathrm{AD}} . \tag{25}

For a given both and as well as and are unbiased estimators for the multivariate CL factor and multivariate incremental loss ratio respectively (see Lemma 3.6 below). However, only and are optimal in the sense that they have minimal expected squared loss; see the second bullet of these remarks.

In the sequel we predict the cumulative claims of the first run-off triangles and the cumulative claims of the last run-off triangles for by the multivariate CL predictors

\begin{aligned} \widehat{\mathbf{C}_{i, j}} \mathrm{CL} & \left.=\widehat{\left(\widehat{C}_{i, j}^{(1)}\right.} \mathrm{CL}, \ldots, \widehat{C_{i, j}^{(K)}}\right)^{\mathrm{CL}}=\hat{E}\left[\mathbf{C}_{i, j}^{\mathrm{CL}} \mid \mathcal{D}_{I}^{N}\right] \\ & =\prod_{l=I-i}^{j-1} \mathrm{D}\left(\hat{\mathbf{f}}_{l}\right) \cdot \mathbf{C}_{i, I-i}^{\mathrm{CL}} \end{aligned} \tag{26}

and the multivariate ALR predictors

\begin{aligned} \widehat{\mathbf{C}_{i, j}} \mathrm{AD} & =\left(\widehat{C}_{i, j}^{(\widehat{K+1})} \mathrm{AD}, \ldots,{\widehat{C_{i, j}^{(N)}}}^{\mathrm{AD}}\right)^{\prime}=\hat{E}\left[\mathbf{C}_{i, j}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right] \\ & =\mathbf{C}_{i, I-i}^{\mathrm{AD}}+\mathrm{V}_{i} \cdot \sum_{l=I-i+1}^{j} \hat{\mathbf{m}}_{l}. \end{aligned} \tag{27}

This means that we predict the -dimensional ultimate claims by

\widehat{\mathbf{C}_{i, J}}=\binom{{\widehat{\mathbf{C}_{i, J}}}^{\mathrm{CL}}}{\widehat{\mathbf{C}_{i, J}} \mathrm{AD}}. \tag{28}

Estimator 3.5 (Combined CL and ALR estimator) The combined and estimator for is for given by

\widehat{\mathbf{C}_{i, j}}=\hat{E}\left[\mathbf{C}_{i, j} \mid \mathcal{D}_{I}^{N}\right]=\binom{{\widehat{\mathbf{C}_{i, j}}}^{\mathrm{CL}}}{{\widehat{\mathbf{C}_{i, j}}}^{\mathrm{AD}}}.

The following lemma collects results from Lemma 3.5 in Merz and Wüthrich (2008) as well as from Property 3.4 and Property 3.7 in Merz and Wüthrich (2009).

Lemma 3.6 Under Model Assumptions 3.1 we have:

a) is, given an unbiased estimator for i.e.,

b) and are uncorrelated for i.e.,

c) is an unbiased estimator for i.e.,

d) and are independent for

e)

f) is, given an unbiased estimator for i.e.,

Remark 3.7

-

Note that Lemma 3.6 f) shows that we have unbiased estimators of the conditionally expected ultimate claim Moreover, it implies that the estimator of the aggregated ultimate claims for accident year \begin{aligned}& \sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}} \\& \quad=\mathbf{1}^{\prime} \cdot{\widehat{\mathbf{C}_{i, J}}}^{\prime}=\mathbf{1}_K^{\prime} \cdot{\widehat{\mathbf{C}_{i, J}}}^{\mathrm{CL}}+\mathbf{1}_{N-K}^{\prime} \cdot{\widehat{\mathbf{C}_{i, J}}}^{\mathrm{AD}}\end{aligned}

is, given an unbiased estimator for

-

Note that the parameters for the CL method are estimated independently from the observations belonging to the ALR method and vice versa. That is, here we could even go one step beyond and learn from ALR method observations when estimating CL parameters and vice versa. We omit these derivations since formulas get more involved and neglect the fact that one may even improve estimators. Our goal here is to give an estimate for the overall MSEP for the parameter estimators (21) and (22).

4. Conditional MSEP

In this section we consider the prediction uncertainty of the predictors

\begin{array}{l} \sum_{n=1}^{K} \widehat{C_{i, J}^{(n)}} \mathrm{CL}+\sum_{n=K+1}^{N} \widehat{C_{i, J}^{(n)}} \mathrm{AD} \qquad \text{and}\\ \sum_{i=1}^{I}\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right), \end{array}

given the observations 𝒟IN, for the ultimate claims. This means our goal is to derive an estimate of the conditional MSEP for single accident years i ∈ {1, . . . , I} which is defined as

\begin{array}{l} \operatorname{msep}_{\sum_{n=1}^{N} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K} \widehat{C_{i, J}^{(n)}}+\sum_{n=K+1}^{N} \widehat{C_{i, J}^{(n)}} \mathrm{AD}\right) \\ \quad=E\left[\left(\sum_{n=1}^{K} \widehat{C_{i, J}^{(n)}}+\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=1}^{N} C_{i, J}^{(n)}\right)^{2} \mid \mathcal{D}_{I}^{N}\right], \end{array} \tag{29}

as well as an estimate of the conditional MSEP for aggregated accident years given by

\begin{array}{c} \operatorname{msep}_{\Sigma_{i, n} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{i=1}^{I} \sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{i=1}^{I} \sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right) \\ =E\left[\left(\sum_{i=1}^{I} \sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{i=1}^{I} \sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right.\right. \\ \left.\left.-\sum_{i=1}^{I} \sum_{n=1}^{N} C_{i, J}^{(n)}\right)^{2} \mid \mathcal{D}_{I}^{N}\right] . \end{array} \tag{30}

4.1. Conditional MSEP for single accident years

We choose i ∈ {1, . . . , I}. The conditional MSEP (29) for a single accident year i decomposes as

\begin{array}{l} \operatorname{msep}_{\Sigma_{n=1}^{N} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right) \\ \quad= \operatorname{msep}_{\Sigma_{n=1}^{K} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}\right) \\ \quad+\operatorname{msep}_{\Sigma_{n=K+1}^{N} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right) \\ +2 \cdot E\left[\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^{K} C_{i, J}^{(n)}\right)\right. \\ \left.\cdot\left(\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^{N} C_{i, J}^{(n)}\right) \mid \mathcal{D}_{I}^{N}\right] . \end{array} \tag{31}

The first two terms on the right-hand side of (31) are the conditional MSEP for single accident years i if we use the multivariate CL method for the first K run-off triangles (numbered by n = 1, . . . , K) and the multivariate ALR method for the last N − K run-off triangles (numbered by n = K + 1, . . . , N), respectively. Estimators for these two conditional MSEPs are derived in Merz and Wüthrich (2008, 2009) and are given by Estimator 4.1 and Estimator 4.2, below.

Estimator 4.1 (MSEP for single accident years, CL method, cf. Merz and Wüthrich (2008)) Under Model Assumptions 3.1 we have the estimator for the conditional MSEP of the ultimate claims in the first K run-off triangles for a single accident year i ∈ {1, . . . , I}

\begin{array}{l} \widehat{\operatorname{msep}}_{\Sigma_{n=1}^{K} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K} \widehat{C_{i, J}^{(n)}} \mathrm{CL}\right) \\ = \mathbf{1}_{K}^{\prime} \cdot\left(\sum_{l=I-i+1}^{J} \prod_{k=l}^{J-1} \mathrm{D}\left(\hat{\mathbf{f}}_{k}\right) \cdot \hat{\Sigma}_{i, l-1}^{\mathbf{C}} \cdot \prod_{k=l}^{J-1} \mathrm{D}\left(\hat{\mathbf{f}}_{k}\right)\right) \cdot \mathbf{1}_{K} \\ +\mathbf{1}_{K}^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\Delta}_{i, J}^{(n, m)}\right)_{1 \leq n, m \leq K} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot \mathbf{1}_{K}, \end{array} \tag{32}

with

\hat{\Sigma}_{i, l-1}^{\mathrm{C}}=\mathrm{D}\left({\widehat{\mathbf{C}_{i, l-1}}}^{\mathrm{CL}}\right)^{1 / 2} \cdot \hat{\Sigma}_{l-1}^{(C)} \cdot \mathrm{D}\left({\widehat{\mathbf{C}_{i, l-1}}}^{\mathrm{CL}}\right)^{1 / 2}, \tag{33}

\begin{aligned} \hat{\Delta}_{i, J}^{(n, m)}= & \prod_{l=I-i}^{J-1}\left(\hat{f}_{l}^{(n)} \cdot \hat{f}_{l}^{(m)}+\sum_{k=0}^{I-l-1} \hat{\mathbf{a}}_{n \mid l}^{k} \cdot \hat{\Sigma}_{l}^{(C)} \cdot\left(\hat{\mathbf{a}}_{m \mid l}^{k}\right)^{\prime}\right) \\ & -\prod_{l=I-i}^{J-1} \hat{f}_{l}^{(n)} \cdot \hat{f}_{l}^{(m)}, \end{aligned} \tag{34}

where and are the nth and mth row of

\begin{aligned} \hat{\mathbf{A}}_{l}^{k}= & \left(\sum_{i=0}^{I-l-1} \mathrm{D}\left(\mathbf{C}_{i, l}^{\mathrm{CL}}\right)^{1 / 2} \cdot\left(\hat{\Sigma}_{l}^{(C)}\right)^{-1} \cdot \mathrm{D}\left(\mathbf{C}_{i, l}^{\mathrm{CL}}\right)^{1 / 2}\right)^{-1} \\ & \cdot \mathrm{D}\left({\widehat{\mathbf{C}_{k, l}}}^{\mathrm{CL}}\right)^{1 / 2} \cdot\left(\hat{\Sigma}_{l}^{(C)}\right)^{-1} \end{aligned} \tag{35}

and the parameter estimates are given in Section 5.

Estimator 4.2 (MSEP for single accident years, ALR method, cf. Merz and Wüthrich (2009)) Under Model Assumptions 3.1 we have the estimator for the conditional MSEP of the ultimate claims in the last N − K run-off triangles for a single accident year i ∈ {1, . . . , I}

\begin{array}{l} \widehat{\operatorname{msep}_{\Sigma_{n=K+1}^{N}} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=K+1}^{N} \widehat{C_{i, J}^{(n)}} \mathrm{AD}\right) \\ = \mathbf{1}_{N-K}^{\prime} \cdot \mathrm{V}_{i}^{1 / 2} \cdot \sum_{j=I-i+1}^{J} \hat{\Sigma}_{j-1}^{(A)} \cdot \mathrm{V}_{i}^{1 / 2} \cdot \mathbf{1}_{N-K} \\ +\mathbf{1}_{N-K}^{\prime} \cdot \mathrm{V}_{i} \\ \cdot \sum_{j=I-i+1}^{J}\left(\sum_{l=0}^{I-j} \mathrm{~V}_{l}^{1 / 2} \cdot\left(\hat{\Sigma}_{j-1}^{(A)}\right)^{-1} \cdot \mathrm{~V}_{l}^{1 / 2}\right)^{-1} \\ \cdot \mathrm{~V}_{i} \cdot \mathbf{1}_{N-K}, \end{array} \tag{36}

where the parameter estimates are given in Section 5.

-

The first terms on the right-hand side of (32) and (36) are the estimators of the conditional process variances and the second terms are the estimators of the conditional estimation errors, respectively.

-

For K = 1 Estimator 4.1 reduces to the estimator of the conditional MSEP for a single run-off triangle in the univariate CL time series model of Buchwalder et al. (2006).

-

For N − K = 1 Estimator 4.2 reduces to the estimator of the conditional MSEP for a single run-off triangle in the univariate ALR model (see Mack (2002)).

In addition to Estimators 4.1 and 4.2 we have to estimate the cross product terms between the CL estimators and the ALR method estimators, namely (see (31))

\begin{array}{l} E\left[\left(\sum_{n=1}^{K} \widehat{C_{i, J}^{(n)}}-\sum_{n=1}^{K} C_{i, J}^{(n)}\right)\right. \\ \left.\quad \cdot\left(\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^{N} C_{i, J}^{(n)}\right) \mid \mathcal{D}_{I}^{N}\right] \\ \quad=\mathbf{1}_{K}^{\prime} \cdot \operatorname{Cov}\left(\mathbf{C}_{i, J}^{\mathrm{CL}}, \mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right) \cdot \mathbf{1}_{N-K} \\ \quad+\mathbf{1}_{K}^{\prime} \cdot\left({\widehat{\mathbf{C}_{i, J}}}^{\mathrm{CL}}-E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathcal{D}_{I}^{N}\right]\right) \\ \quad \cdot\left({\widehat{\mathbf{C}_{i, J}}}^{\mathrm{AD}}-E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right]\right)^{\prime} \cdot \mathbf{1}_{N-K}. \end{array} \tag{37}

That is, this cross product term, again, decouples into a process error part and an estimation error part (first and second term on the right-hand side of (37)).

4.1.1. Conditional cross process variance

In this subsection we provide an estimate of the conditional cross process variance. The following result holds

Lemma 4.4 (Cross process variance for single accident years) Under Model Assumptions 3.1 the conditional cross process variance for the ultimate claims of accident year given the observations is given by

\begin{array}{l} \mathbf{1}_{K}^{\prime} \cdot \operatorname{Cov}\left(\mathbf{C}_{i, J}^{\mathrm{CL}}, \mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right) \cdot \mathbf{1}_{N-K} \\ \quad=\mathbf{1}_{K}^{\prime} \cdot \sum_{j=I-i+1}^{J} \prod_{l=j}^{J-1} \mathrm{D}\left(\mathbf{f}_{l}\right) \cdot \Sigma_{i, j-1}^{\mathrm{CA}} \cdot \mathbf{1}_{N-K}, \end{array} \tag{38}

where

\Sigma_{i, j-1}^{\mathrm{CA}}=E\left[\mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2} \cdot \Sigma_{j-1}^{(C, A)} \mid \mathbf{C}_{i, I-i}\right] \cdot \mathrm{V}_{i}^{1 / 2}. \tag{39}

Proof See appendix, Section 7.1.

If we replace the parameters and in (38) by their estimates (cf. Section 5), we obtain an estimator of the conditional cross process variance for a single accident year.

4.1.2. Conditional cross estimation error

In this subsection we deal with the second term on the right-hand side of (37). Using Lemma 3.3 as well as definitions (26) and (27), we obtain for the cross estimation error of accident year i ∈ {1, . . . , I} the representation

\begin{aligned} \mathbf{1}_{K}^{\prime} \cdot & \left({\widehat{\mathbf{C}_{i, J}}}^{\mathrm{CL}}-E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathcal{D}_{I}^{N}\right]\right) \cdot\left({\widehat{\mathbf{C}_{i, J}}}^{\mathrm{AD}}-E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_{I}^{N}\right]\right)^{\prime} \\ \cdot \mathbf{1}_{N-K} & \\ = & \mathbf{1}_{K}^{\prime} \cdot\left(\prod_{j=I-i}^{J-1} \mathrm{D}\left(\hat{\mathbf{f}}_{j}\right)-\prod_{j=I-i}^{J-1} \mathrm{D}\left(\mathbf{f}_{j}\right)\right) \cdot \mathbf{C}_{i, I-i}^{\mathrm{CL}} \\ & \cdot\left(\sum_{j=I-i+1}^{J}\left({\widehat{\mathbf{X}_{i, j}}}^{\mathrm{AD}}-E\left[\mathbf{X}_{i, j}^{\mathrm{AD}}\right]\right)\right)^{\prime} \cdot \mathbf{1}_{N-K} \\ = & \mathbf{1}_{K}^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\mathbf{g}}_{i \mid J}-\mathbf{g}_{i \mid J}\right) \\ & \cdot\left(\sum_{j=I-i+1}^{J}\left(\hat{\mathbf{m}}_{j}-\mathbf{m}_{j}\right)\right)^{\prime} \cdot \mathrm{V}_{i} \cdot \mathbf{1}_{N-K}, \end{aligned} \tag{40}

where and are defined by

\begin{array}{l} \hat{\mathbf{g}}_{i \mid J}=\mathrm{D}\left(\hat{\mathbf{f}}_{I-i}\right) \cdot \ldots \cdot \mathrm{D}\left(\hat{\mathbf{f}}_{J-1}\right) \cdot \mathbf{1}_{K}, \\ \mathbf{g}_{i \mid J}=\mathrm{D}\left(\mathbf{f}_{I-i}\right) \cdot \ldots \cdot \mathrm{D}\left(\mathbf{f}_{J-1}\right) \cdot \mathbf{1}_{K} . \end{array} \tag{41}

In order to derive an estimator of the conditional cross estimation error we would like to calculate the right-hand side of (40). Observe that the realizations of the estimators and are known at time but the “true” CL factors and the incremental loss ratios are unknown. Hence (40) cannot be calculated explicitly. In order to determine the conditional cross estimation error we analyze how much the “possible” CL factor estimators and the incremental loss ratio estimators fluctuate around their “true” mean values and In the following, analogously to Merz and Wüthrich (2008), we measure these volatilities of the estimators and by means of resampled observations for and For this purpose we use the conditional resampling approach presented in Buchwalder et al. (2006), Section 4.1.2, to get an estimate for the term (40). By conditionally resampling the observations for and given the upper triangles we take into account the possibility that the observations for and could have been different from the observed values. This means that, given we generate “new” observations and for and using the formulas (conditional resampling)

\tilde{\mathbf{C}}_{i, j+1}^{\mathrm{CL}}=\mathrm{D}\left(\mathbf{f}_{j}\right) \cdot \mathbf{C}_{i, j}^{\mathrm{CL}}+\mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2} \cdot \mathrm{D}\left(\tilde{\varepsilon}_{i, j+1}^{\mathrm{CL}}\right) \cdot \sigma_{j}^{\mathrm{CL}} \tag{42}

and

\tilde{\mathbf{X}}_{i, j+1}^{\mathrm{AD}}=\mathrm{V}_{i} \cdot \mathbf{m}_{j+1}+\mathrm{V}_{i}^{1 / 2} \cdot \mathrm{D}\left(\tilde{\varepsilon}_{i, j+1}^{\mathrm{AD}}\right) \cdot \sigma_{j}^{\mathrm{AD}}, \tag{43}

With

\tilde{\varepsilon}_{i, j+1}=\binom{\tilde{\varepsilon}_{i, j+1}^{\mathrm{CL}}}{\tilde{\varepsilon}_{i, j+1}^{\mathrm{AD}}}, \quad \varepsilon_{i, j+1}=\binom{\varepsilon_{i, j+1}^{\mathrm{CL}}}{\varepsilon_{i, j+1}^{\mathrm{AD}}} \tag{44}

are independent and identically distributed copies.

We define

\mathrm{W}_{j}=\left(\sum_{k=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{k, j}^{\mathrm{CL}}\right)^{1 / 2}\left(\Sigma_{j}^{(C)}\right)^{-1} \mathrm{D}\left(\mathbf{C}_{k, j}^{\mathrm{CL}}\right)^{1 / 2}\right)^{-1}

and

\mathrm{U}_{j}=\left(\sum_{k=0}^{I-j-1} \mathrm{~V}_{k}^{1 / 2}\left(\Sigma_{j}^{(A)}\right)^{-1} \mathrm{~V}_{k}^{1 / 2}\right)^{-1} .

The resampled representations for the estimates of the multivariate CL factors and the incremental loss ratios are then given by (see (21) and (22))

\hat{\mathbf{f}}_{j}=\mathbf{f}_{j}+\mathrm{W}_{j} \sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2}\left(\Sigma_{j}^{(C)}\right)^{-1} \mathrm{D}\left(\tilde{\varepsilon}_{i, j+1}^{\mathrm{CL}}\right) \sigma_{j}^{\mathrm{CL}}, \tag{45}

and

\hat{\mathbf{m}}_{j+1}=\mathbf{m}_{j+1}+\mathrm{U}_{j} \sum_{i=0}^{I-j-1} \mathrm{~V}_{i}^{1 / 2}\left(\Sigma_{j}^{(A)}\right)^{-1} \mathrm{D}\left(\tilde{\varepsilon}_{i, j+1}^{\mathrm{AD}}\right) \sigma_{j}^{\mathrm{AD}} . \tag{46}

Note, in (45) and (46) as well as in the following exposition, we use the previous notations and for the resampled estimates of the multivariate CL factors and the incremental loss ratios respectively, to avoid an overloaded notation. Furthermore, given the observations we denote the conditional probability measure of these resampled multivariate estimates by For a more detailed discussion of this conditional resampling approach we refer to Merz and Wüthrich (2008). We obtain the following lemma:

Lemma 4.5 Under Model Assumptions 3.1 and resampling assumptions (42)-(44) we have:

a) are independent under are independent under and and are independent under if

b) and for and

c)

where is the entry of the ( )-matrix

\begin{aligned} \mathrm{T}_{j}= & \mathrm{W}_{j} \sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2}\left(\Sigma_{j}^{(C)}\right)^{-1} \Sigma_{j}^{(C, A)} \\ & \cdot\left(\Sigma_{j}^{(A)}\right)^{-1} \mathrm{~V}_{i}^{1 / 2} \mathrm{U}_{j}. \end{aligned} \tag{47}

Proof See appendix, Section 7.2.

Using Lemma 4.5 we choose for the conditional cross estimation error (40) the estimator

\begin{array}{l} \mathbf{1}_{K}^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot E_{\mathcal{D}_{I}^{N}}^{*}\left[\left(\hat{\mathbf{g}}_{i \mid J}-\mathbf{g}_{i \mid J}\right) \cdot\left(\sum_{j=I-i+1}^{J}\left(\hat{\mathbf{m}}_{j}-\mathbf{m}_{j}\right)\right)^{\prime}\right] \\ \cdot \mathrm{V}_{i} \cdot \mathbf{1}_{N-K} \\ \quad=\mathbf{1}_{K}^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot \operatorname{Cov}_{\mathcal{D}_{I}^{N}}^{*}\left(\hat{\mathbf{g}}_{i \mid J}, \sum_{j=I-i+1}^{J} \hat{\mathbf{m}}_{j}\right) \\ \quad \cdot \mathrm{V}_{i} \cdot \mathbf{1}_{N-K}. \end{array} \tag{48}

We define the matrix

\begin{aligned} \Psi_{k, i} & =\left(\Psi_{k, i}^{(m, n)}\right)_{m, n}=\operatorname{Cov}_{\mathcal{D}_{I}^{N}}^{*}\left(\hat{\mathbf{g}}_{k \mid J}, \sum_{j=I-i+1}^{J} \hat{\mathbf{m}}_{j}\right) \\ & =\sum_{j=I-i+1}^{J} \operatorname{Cov}_{\mathcal{D}_{I}^{N}}^{*}\left(\hat{\mathbf{g}}_{k \mid J}, \hat{\mathbf{m}}_{j}\right) \end{aligned} \tag{49}

for all The following result holds for its components :

Lemma 4.6 Under Model Assumptions 3.1 and resampling assumptions (42)–(44) we have for m = 1, . . . , K and n = 1, . . . , N − K

\Psi_{k, i}^{(m, n)}=\sum_{j=(I-i+1) \vee(I-k+1)}^{J} \prod_{r=I-k}^{J-1} f_{r}^{(m)} \frac{1}{f_{j-1}^{(m)}} \mathrm{T}_{j-1}(m, n) .

Proof See appendix, Section 7.3.

Putting (31), (37), (38) and (48) together and replacing the parameters by their estimates we motivate the following estimator for the conditional MSEP of a single accident year in the multivariate combined method:

Estimator 4.7 (MSEP for single accident years, combined method) Under Model Assumptions 3.1 we have the estimator for the conditional MSEP of the ultimate claims for a single accident year

\begin{array}{l} \widehat{\operatorname{msep}}_{\Sigma_{n=1}^{N} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right) \\ =\widehat{\operatorname{msep}}_{\Sigma_{n=1}^{K} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}\right) \\ +\widehat{\operatorname{msep}}_{\Sigma_{n=K+1}^{N} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=K+1}^{N}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right) \\ +2 \cdot \mathbf{1}_{K}^{\prime} \cdot \sum_{j=I-i+1}^{J} \prod_{l=j}^{J-1} \mathrm{D}\left(\hat{\mathbf{f}}_{l}\right) \cdot \hat{\Sigma}_{i, j-1}^{\mathrm{CA}} \cdot \mathbf{1}_{N-K} \\ +2 \cdot \mathbf{1}_{K}^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\Psi}_{i, i}^{(m, n)}\right)_{m, n} \cdot \mathrm{~V}_{i} \cdot \mathbf{1}_{N-K}, \end{array} \tag{50}

with

\hat{\Sigma}_{i, j-1}^{\mathbf{C A}}=\mathrm{D}\left(\widehat{\mathbf{C}_{i, j-1}}{ }^{\mathrm{CL}}\right)^{1 / 2} \cdot \hat{\Sigma}_{j-1}^{(C, A)} \cdot \mathrm{V}_{i}^{1 / 2}, \tag{51}

\hat{\Psi}_{k, i}^{(m, n)}=\hat{g}_{k \mid J}^{(m)} \sum_{j=(I-i+1) \vee(I-k+1)}^{J} \frac{1}{\hat{f}_{j-1}^{(m)}} \hat{\mathrm{T}}_{j-1}(m, n) . \tag{52}

Thereby, the first two terms on the right-hand side of (50) are given by (32) and (36), denotes the mth coordinate of (cf. (41)) and the parameter estimates as well as (entry of the estimate for the -matrix are given in Section 5.

4.2. Conditional MSEP for aggregated accident years

Now, we derive an estimator of the conditional MSEP (30) for aggregated accident years. To this end we consider two different accident years We know that the ultimate claims and are independent but we also know that we have to take into account the dependence of the estimators and The conditional MSEP for two aggregated accident years and is given by

\small{ \begin{array}{l} \operatorname{msep}_{\Sigma_{n=1}^{N}\left(C_{i, J}^{(n)}+C_{l, J}^{(n)}\right) \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K} \widehat{C_{i, J}^{(n)}}+\sum_{n=K+1}^{N} \widehat{C_{i, J}^{(n)}} \mathrm{AD}+\sum_{n=1}^{K}{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^{N} \widehat{C_{l, J}^{(n)}} \mathrm{AD}\right) \\ \quad = \operatorname{msep}_{\Sigma_{n=1}^{N} C_{i, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^{N} \widehat{C_{i, J}^{(n)}} \mathrm{AD}\right)\\ \qquad +\operatorname{msep}_{\Sigma_{n=1}^{N} C_{l, J}^{(n)} \mid \mathcal{D}_{I}^{N}}\left(\sum_{n=1}^{K} \widehat{C_{l, J}^{(n)}} \mathrm{CL}+\sum_{n=K+1}^{N} \widehat{C_{l, J}^{(n)}} \mathrm{AD}\right) \\ \qquad +2 \cdot E\left[\left(\sum_{n=1}^{K}{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+{\widehat{\sum_{n=K+1}^{N}}}^{\mathrm{AD}}{\widehat{C_{i, J}^{(n)}}}^{N}-\sum_{n=1} C_{i, J}^{(n)}\right)\\ \qquad \cdot\left(\sum_{n=1}^{K}{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}+{\widehat{\sum_{n=K+1}^{N}}}^{\mathrm{AD}}-{\widehat{C_{l, J}^{(n)}}}^{N} C_{l, J}^{(n)}\right) \mid \mathcal{D}_{I}^{N}\right] . \end{array} \tag{53}}

The first two terms on the right-hand side of (53) are the conditional prediction errors for the two single accident years 1 ≤ I ≤ l ≤ I, respectively, which we estimate by Estimator 4.7. For the third term on the right-hand side of (53) we obtain the decomposition

\begin{align} &E\left[\left(\sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=1}^N C_{i, J}^{(n)}\right)\right. \\ &\quad\left.\cdot\left(\sum_{n=1}^K{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}+\sum_{n=K+1}^N{\widehat{C_{l, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=1}^N C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad=E\left[\left(\sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{i, J}^{(n)}\right)\right. \\ &\qquad\qquad\left.\cdot\left(\sum_{n=K+1}^N{\widehat{C_{l, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad\quad+E\left[\left(\sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{i, J}^{(n)}\right)\right. \\ &\qquad\qquad\left.\cdot\left(\sum_{n=1}^K{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad\quad+E\left[\left(\sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{i, J}^{(n)}\right)\right. \\ &\qquad\qquad\left.\cdot\left(\sum_{n=1}^K{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad\quad+E\left[\left(\sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{i, J}^{(n)}\right)\right. \\ &\qquad\qquad\left.\cdot\left(\sum_{n=K+1}^N{\widehat{C_{l, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right]. \end{align} \tag{54}

Using the independence of different accident years we obtain for the first two terms on the right-hand side of (54)

\begin{aligned} & E\left[\left(\sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{i, J}^{(n)}\right)\right. \\ & \left.\quad \cdot\left(\sum_{n=K+1}^N{\widehat{C_{l, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ & \quad=\mathbf{1}_K^{\prime} \cdot\left(\widehat{\mathbf{C}}_{i, J}^{\mathrm{CL}}-E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathcal{D}_I^N\right]\right) \\ & \qquad \cdot\left(\widehat{\mathbf{C}}_{l, J}^{\mathrm{AD}}-E\left[\mathbf{C}_{l, J}^{\mathrm{AD}} \mid \mathcal{D}_I^N\right]\right)^{\prime} \cdot \mathbf{1}_{N-K} \\ & \quad=\mathbf{1}_K^{\prime} \cdot\left(\prod_{j=I-i}^{J-1} \mathrm{D}\left(\hat{\mathbf{f}}_j\right)-\prod_{j=I-i}^{J-1} \mathrm{D}\left(\mathbf{f}_j\right)\right)^{\prime} \cdot \mathbf{C}_{i, I-i}^{\mathrm{CL}} \\ & \qquad \cdot\left(\sum_{j=I-l+1}^J\left(\widehat{\mathbf{X}}_{l, j}^{\mathrm{AD}}-E\left[\mathbf{X}_{l, j}^{\mathrm{AD}}\right]\right)\right)^{\prime} \cdot \mathbf{1}_{N-K} \\ & =\mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\mathbf{g}}_{i \mid J}-\mathbf{g}_{i \mid J}\right) \\ & \qquad \cdot\left(\sum_{j=I-l+1}^J\left(\hat{\mathbf{m}}_j-\mathbf{m}_j\right)\right)^{\prime} \cdot \mathrm{V}_l \cdot \mathbf{1}_{N-K}, \end{aligned} \tag{55}

and analogously

\begin{aligned} & E\left[\left(\sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{i, J}^{(n)}\right)\right. \\ &\quad \left.\cdot\left(\sum_{n=1}^K{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad =\mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{l, I-l}^{\mathrm{CL}}\right) \cdot\left(\hat{\mathbf{g}}_{l \mid J}-\mathbf{g}_{l \mid J}\right) \\ &\qquad \quad \cdot\left(\sum_{j=I-i+1}^J\left(\hat{\mathbf{m}}_j-\mathbf{m}_j\right)\right)^{\prime} \cdot \mathrm{V}_i \cdot \mathbf{1}_{N-K} \cdot \end{aligned} \tag{56}

Under the conditional resampling measure these two terms are estimated by (see also Lemma 4.6), and

\begin{aligned} & \mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{s, I-s}^{\mathrm{CL}}\right) \cdot E_{\mathcal{D}_I^N}^*\left[\left(\hat{\mathbf{g}}_{s \mid J}-\mathbf{g}_{s \mid J}\right) \cdot\left(\sum_{j=I-t+1}^J\left(\hat{\mathbf{m}}_j-\mathbf{m}_j\right)\right)^{\prime}\right] \\ & \cdot \mathrm{V}_t \cdot \mathbf{1}_{N-K} \\ & \quad=\mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{s, I-s}^{\mathrm{CL}}\right) \cdot\left(\Psi_{s, t}^{(m, n)}\right)_{m, n} \cdot \mathrm{~V}_t \cdot \mathbf{1}_{N-K} \cdot \end{aligned}

Now we consider the third term on the right hand side of (54). Again, using the independence of different accident years we obtain

\begin{aligned} & E\left[\left(\sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{i, J}^{(n)}\right)\right. \\ &\quad \left.\cdot\left(\sum_{n=1}^K{\widehat{C_{l, J}^{(n)}}}^{\mathrm{CL}}-\sum_{n=1}^K C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad =\mathbf{1}_K^{\prime} \cdot\left(\widehat{\mathbf{C}}_{i, J}^{\mathrm{CL}}-E\left[\mathbf{C}_{i, J}^{\mathrm{CL}} \mid \mathcal{D}_I^N\right]\right) \\ &\qquad \quad \cdot\left({\widehat{\mathbf{C}_{l, J}}}^{\mathrm{CL}}-E\left[\mathbf{C}_{l, J}^{\mathrm{CL}} \mid \mathcal{D}_I^N\right]\right)^{\prime} \cdot \mathbf{1}_K \\ &\qquad =\mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\mathbf{g}}_{i \mid J}-\mathbf{g}_{i \mid J}\right) \\ &\qquad \quad \cdot\left(\hat{\mathbf{g}}_{l \mid J}-\mathbf{g}_{l \mid J}\right)^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{l, I-l}^{\mathrm{CL}}\right) \cdot \mathbf{1}_K . \end{aligned} \tag{57}

This term is estimated by

\begin{aligned} & \mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot E_{D_I^N}^*\left[\left(\hat{\mathbf{g}}_{i \mid J}-\mathbf{g}_{i \mid J}\right)\right. \\ & \left.\cdot\left(\hat{\mathbf{g}}_{l \mid J}-\mathbf{g}_{l \mid J}\right)^{\prime}\right] \cdot \mathrm{D}\left(\mathbf{C}_{l, I-l}^{\mathrm{CL}}\right) \cdot \mathbf{1}_K \\ &\quad =\mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\Delta_{i, J}^{(n, m)}\right)_{1 \leq n, m \leq K} \\ & \qquad \cdot \mathrm{D}\left(\mathbf{C}_{l, I-l}^{\mathrm{CL}}\right) \cdot \prod_{k=I-l}^{I-i-1} \mathrm{D}\left(\mathbf{f}_k\right) \cdot \mathbf{1}_K, \end{aligned} \tag{58}

where is estimated by

\begin{aligned} \hat{\Delta}_{i, J}^{(n, m)}= & \prod_{l=I-i}^{J-1}\left(\hat{f}_l^{(n)} \cdot \hat{f}_l^{(m)}+\sum_{k=0}^{I-l-1} \hat{\mathbf{a}}_{n \mid l}^k \cdot \hat{\Sigma}_l^{(C)} \cdot\left(\hat{\mathbf{a}}_{m \mid l}^k\right)^{\prime}\right) \\ & -\prod_{l=I-i}^{J-1} \hat{f}_l^{(n)} \cdot \hat{f}_l^{(m)}. \end{aligned} \tag{59}

The parameter estimates and are the th and th row of (35) and the parameter estimate is given in Section 5 (see also Merz and Wüthrich (2008)).

Finally, we obtain for the last term on the right-hand side of (54)

\begin{aligned} E & {\left[\left(\sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{i, J}^{(n)}\right)\right.} \\ &\quad \left.\cdot\left(\sum_{n=K+1}^N{\widehat{C_{l, J}^{(n)}}}^{\mathrm{AD}}-\sum_{n=K+1}^N C_{l, J}^{(n)}\right) \mid \mathcal{D}_I^N\right] \\ &\qquad =\mathbf{1}_{N-K}^{\prime} \cdot\left(\widehat{\mathbf{C}}_{i, J}^{\mathrm{AD}}-E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_I^N\right]\right) \\ &\qquad \quad \cdot\left(\widehat{\mathbf{C}}_{l, J}^{\mathrm{AD}}-E\left[\mathbf{C}_{l, J}^{\mathrm{AD}} \mid \mathcal{D}_I^N\right]\right)^{\prime} \cdot \mathbf{1}_{N-K}, \end{aligned} \tag{60}

which is estimated by (see also Merz and Wüthrich (2009))

\begin{aligned} & \mathbf{1}_{N-K}^{\prime} \cdot E\left[\left({\widehat{\mathbf{C}_{i, J}}}^{\mathrm{AD}}-E\left[\mathbf{C}_{i, J}^{\mathrm{AD}} \mid \mathcal{D}_I^N\right]\right)\right. \\ &\quad \left.\cdot\left({\widehat{\mathbf{C}_{l, J}}}^{\mathrm{AD}}-E\left[\mathbf{C}_{l, J}^{\mathrm{AD}} \mid \mathcal{D}_I^N\right]\right)^{\prime}\right] \cdot \mathbf{1}_{N-K} \\ &\qquad =\mathbf{1}_{N-K}^{\prime} \cdot \mathrm{V}_i \\ & \qquad \quad \cdot\left[\sum_{j=I-i+1}^J\left(\sum_{k=0}^{I-j} \mathrm{~V}_k^{1 / 2} \cdot\left(\Sigma_{j-1}^{(A)}\right)^{-1} \cdot \mathrm{~V}_k^{1 / 2}\right)^{-1}\right] \\ & \qquad \quad \cdot \mathrm{V}_l \cdot \mathbf{1}_{N-K}. \end{aligned} \tag{61}

Putting all the terms together and replacing the parameters by their estimates we obtain the following estimator for the conditional MSEP of aggregated accident years in the multivariate combined method:

Estimator 4.8 (MSEP for aggregated accident years, combined method) Under Model Assumptions 3.1 we have the estimator for the conditional MSEP of the ultimate claims for aggregated accident years

\begin{aligned} &\widehat{\operatorname{msep}}_{\Sigma_i} \Sigma_n C_{i, J}^{(n)} \mid \mathcal{D}_I^N\left(\sum_{i=1}^I \sum_{n=1}^K{\widehat{C_{i, J}^{(n)}}}^{\mathrm{CL}}+\sum_{i=1}^I \sum_{n=K+1}^N{\widehat{C_{i, J}^{(n)}}}^{\mathrm{AD}}\right) & \\ &\quad= \sum_{i=1}^I \widehat{\operatorname{msep}}_{\Sigma_n} C_{i, J}^{(n)} \mid \mathcal{D}_I^N\left(\sum_{n=1}^K \widehat{C_{i, J}^{(n)}}+\sum_{n=K+1}^N \widehat{C_{i, J}^{(n)}} \mathrm{AD}\right) \\ &\qquad +2 \cdot \sum_{1 \leq i<l \leq I} \mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\Psi}_{i, l}^{(m, n)}\right)_{m, n} \cdot \mathrm{~V}_l \cdot \mathbf{1}_{N-K} \\ &\qquad +2 \cdot \sum_{1 \leq i<l \leq I} \mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{l, I-l}^{\mathrm{CL}}\right) \cdot\left(\hat{\Psi}_{l, i}^{(m, n)}\right)_{m, n} \cdot \mathrm{~V}_i \cdot \mathbf{1}_{N-K} \\ & \qquad+2 \cdot \sum_{1 \leq i<l \leq I} \mathbf{1}_K^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, I-i}^{\mathrm{CL}}\right) \cdot\left(\hat{\Delta}_{i, J}^{(m, n)}\right)_{m, n} \\ &\qquad \cdot \mathrm{D}\left(\mathbf{C}_{l, I-l}^{\mathrm{CL}}\right) \cdot \prod_{j=I-l}^{I-i-1} \mathrm{D}\left(\hat{\mathbf{f}}_j\right) \cdot \mathbf{1}_K \\ &\qquad +2 \cdot \sum_{1 \leq i<l \leq I} \mathbf{1}_{N-K}^{\prime} \cdot \mathrm{V}_i \\ &\qquad \cdot \sum_{j=I-i+1}^J\left(\sum_{k=0}^{I-j} \mathrm{~V}_k^{1 / 2} \cdot\left(\hat{\Sigma}_{j-1}^{(A)}\right)^{-1} \cdot \mathrm{~V}_k^{1 / 2}\right)^{-1} \\ &\qquad \cdot \mathrm{~V}_l \cdot \mathbf{1}_{N-K} \cdot \end{aligned} \tag{62}

4.3. Conditional MSEP with and

In some cases, it may be more convenient to use estimators (24) and (25) to estimate and respectively, instead of (21) and (22). Estimators (24) and (25) do not reflect the correlation among subportfolios and are thus simpler to calculate, but being less than optimal, will have greater MSEP than estimators (21) and (22).

The changes that occur when estimators (24) and (25) are used are noted here. In Estimator 4.1, (35) becomes

\hat{\mathbf{A}}_l^k=\left(\sum_{i=0}^{I-l-1} \mathrm{D}\left(\mathbf{C}_{i, l}^{\mathrm{CL}}\right)\right)^{-1} \cdot \mathrm{D}\left({\widehat{\mathbf{C}_{k, l}}}^{\mathrm{CL}}\right)^{1 / 2}.

In Estimator 4.2, the last term of (36) becomes

\begin{aligned} \mathbf{1}_{N-K}^{\prime} \cdot \mathrm{V}_i \cdot[ & \sum_{j=I-i+1}^J\left(\sum_{l=0}^{I-j} \mathrm{~V}_l\right)^{-1} \\ & \left.\cdot\left(\sum_{l=0}^{I-j} \mathrm{~V}_l^{1 / 2} \cdot \hat{\Sigma}_{j-1}^{(A)} \cdot \mathrm{V}_l^{1 / 2}\right) \cdot\left(\sum_{l=0}^{I-j} \mathrm{~V}_l\right)^{-1}\right] \\ & \cdot \mathrm{V}_i \cdot \mathbf{1}_{N-K}. \end{aligned}

becomes

\left(\sum_{k=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{k, j}^{\mathrm{CL}}\right)\right)^{-1},

becomes

\left(\sum_{k=0}^{I-j-1} \mathrm{~V}_{k}\right)^{-1},

and becomes

\mathrm{W}_{j} \sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2} \Sigma_{j}^{(C, A)} \mathrm{V}_{i}^{1 / 2} \mathrm{U}_{j},

with analogous changes to their estimators. The right-hand side of (61) and the expression to the right of the first summation sign in the last term of (62) become

\begin{aligned} \mathbf{1}_{N-K}^{\prime} \cdot \mathrm{V}_{i} \cdot & {\left[\sum_{j=I-i+1}^{J}\left(\sum_{k=0}^{I-j} \mathrm{~V}_{k}\right)^{-1}\right.} \\ & \left.\cdot\left(\sum_{k=0}^{I-j} \mathrm{~V}_{k}^{1 / 2} \cdot \hat{\Sigma}_{j-1}^{(A)} \cdot \mathrm{V}_{k}^{1 / 2}\right) \cdot\left(\sum_{k=0}^{I-j} \mathrm{~V}_{k}\right)^{-1}\right] \\ & \cdot \mathrm{V}_{l} \cdot \mathbf{1}_{N-K} . \end{aligned}

5. Parameter estimation

Estimation of and As starting values for the iteration we use the unbiased estimators and defined by (24) and (25) for From and we derive the estimates and of the covariance matrices and for (see estimators (64) and (67) below). Then these estimates and are used to determine and

\begin{aligned} \hat{\mathbf{f}}_{j-1}^{(s)}= & \left(\sum_{i=0}^{I-j} \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2}\left(\hat{\Sigma}_{j-1}^{(C)(s)}\right)^{-1} \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2}\right)^{-1} \\ & \cdot \sum_{i=0}^{I-j} \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2}\left(\hat{\Sigma}_{j-1}^{(C)(s)}\right)^{-1} \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{-1 / 2} \cdot \mathbf{C}_{i, j}^{\mathrm{CL}} \end{aligned} \tag{63}

and

\begin{aligned} \hat{\mathbf{m}}_{j}^{(s)}= & \left(\sum_{i=0}^{I-j} \mathrm{~V}_{i}^{1 / 2} \cdot\left(\hat{\Sigma}_{j-1}^{(A)(s)}\right)^{-1} \cdot \mathrm{~V}_{i}^{1 / 2}\right)^{-1} \\ & \cdot \sum_{i=0}^{I-j} \mathrm{~V}_{i}^{1 / 2} \cdot\left(\hat{\Sigma}_{j-1}^{(A)(s)}\right)^{-1} \cdot \mathrm{~V}_{i}^{-1 / 2} \cdot \mathbf{X}_{i, j}^{\mathrm{AD}} . \end{aligned}

This algorithm is then iterated until it has sufficiently converged.

Estimation of and The covariance matrices and are estimated iteratively from the data for For the covariance matrices we use the estimator proposed by Merz and Wüthrich (2008)

\begin{aligned} \hat{\Sigma}_{j-1}^{(C)(s)}= & Q_{j} \odot \sum_{i=0}^{I-j} \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2} \cdot\left(\mathbf{F}_{i, j}^{\mathrm{CL}}-\hat{\mathbf{f}}_{j-1}^{(s-1)}\right) \\ & \cdot\left(\mathbf{F}_{i, j}^{\mathrm{CL}}-\hat{\mathbf{f}}_{j-1}^{(s-1)}\right)^{\prime} \cdot \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2}, \end{aligned} \tag{64}

where ⊙ denotes the Hadamard product (entrywise product of two matrices),

\begin{aligned} \mathbf{F}_{i, j}^{\mathrm{CL}} & =\mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{-1} \cdot \mathbf{C}_{i, j}^{\mathrm{CL}} \quad \text { and } \\ Q_{j} & =\left(\frac{1}{I-j-1+w_{j}^{(n, m)}}\right)_{1 \leq n, m \leq K} \end{aligned} \tag{65}

with

w_{j}^{(n, m)}=\frac{\left(\sum_{l=0}^{I-j} \sqrt{C_{l, j-1}^{(n)}} \cdot \sqrt{C_{l, j-1}^{(m)}}\right)^{2}}{\sum_{l=0}^{I-j} C_{l, j-1}^{(n)} \cdot \sum_{l=0}^{I-j} C_{l, j-1}^{(m)}} . \tag{66}

For more details on this estimator see Merz and Wüthrich (2008), Section 5.

For the covariance matrices we use the iterative estimation procedure suggested by Merz and Wüthrich (2009) ( )

\begin{aligned} \hat{\Sigma}_{j-1}^{(A)(s)}= & \frac{1}{I-j} \cdot \sum_{i=0}^{I-j} \mathrm{~V}_{i}^{-1 / 2} \cdot\left(\mathbf{X}_{i, j}^{\mathrm{AD}}-\mathrm{V}_{i} \cdot \hat{\mathbf{m}}_{j}^{(s-1)}\right) \\ & \cdot\left(\mathbf{X}_{i, j}^{\mathrm{AD}}-\mathrm{V}_{i} \cdot \hat{\mathbf{m}}_{j}^{(s-1)}\right)^{\prime} \cdot \mathrm{V}_{i}^{-1 / 2}. \end{aligned} \tag{67}

For more details on this estimator see Merz and Wüthrich (2009), Section 5.

Motivated by estimators (64) and (67) for matrices and we propose for the covariance matrix estimator

\begin{aligned} \hat{\Sigma}_{j-1}^{(C, A)}= & \frac{1}{I-j} \cdot \sum_{i=0}^{I-j} \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2} \cdot\left(\mathbf{F}_{i, j}^{\mathrm{CL}}-\hat{\mathbf{f}}_{j-1}\right) \\ & \cdot\left(\mathbf{X}_{i, j}^{\mathrm{AD}}-\mathrm{V}_{i} \cdot \hat{\mathbf{m}}_{j}\right)^{\prime} \cdot \mathrm{V}_{i}^{-1 / 2}. \end{aligned} \tag{68}

Estimation of and With these estimates we obtain as estimates of the matrices and

\begin{aligned} {\widehat{\Sigma_{i, j}}}^{\mathbf{C A}}= & \mathrm{D}\left({\widehat{\mathbf{C}_{i, j}}}^{\mathrm{CL}}\right)^{1 / 2} \hat{\Sigma}_{j}^{(C, A)} \mathrm{V}_{i}^{1 / 2}, \\ \hat{\mathrm{~T}}_{j}= & \hat{\mathrm{W}}_{j} \sum_{i=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{i, j}^{\mathrm{CL}}\right)^{1 / 2} \\ & \cdot\left(\hat{\Sigma}_{j}^{(C)}\right)^{-1} \hat{\Sigma}_{j}^{(C, A)}\left(\hat{\Sigma}_{j}^{(A)}\right)^{-1} \mathrm{~V}_{i}^{1 / 2} \hat{\mathrm{U}}_{j}, \end{aligned}

where

\hat{\mathrm{W}}_{j}=\left(\sum_{k=0}^{I-j-1} \mathrm{D}\left(\mathbf{C}_{k, j}^{\mathrm{CL}}\right)^{1 / 2}\left(\hat{\Sigma}_{j}^{(C)}\right)^{-1} \mathrm{D}\left(\mathbf{C}_{k, j}^{\mathrm{CL}}\right)^{1 / 2}\right)^{-1}

and

\hat{\mathrm{U}}_{j}=\left(\sum_{k=0}^{I-j-1} \mathrm{~V}_{k}^{1 / 2}\left(\hat{\Sigma}_{j}^{(A)}\right)^{-1} \mathrm{~V}_{k}^{1 / 2}\right)^{-1} .

The matrices and are the resulting estimates in the iterative estimation procedure for the parameters and (cf. (64) and (67)).

Remark 5.1

-

For a more detailed motivation of the estimates for the different covariance matrices see Merz and Wüthrich (2008, 2009) and Sections 8.2.5 and 8.3.5 in Wüthrich and Merz (2008).

-

If we have enough data (i.e., ), we are able to estimate the parameters and by (64), (67) and (68) respectively. Otherwise, if we do not have enough data to estimate the last covariance matrices. In such cases we can use the estimates of the elements of for (i.e., is an estimate of cf. (16)) to derive estimates of the elements of for all For example, this can be done by extrapolating the usually decreasing series \left|\hat{\varphi}_{0}^{(m, n)}\right|, \ldots,\left|\hat{\varphi}_{J-2}^{(m, n)}\right| \tag{69} by one additional member for Analogously, we can derive estimates for and (see Merz and Wüthrich (2008, 2009) and the example below). However, in all cases it is important to verify that the estimated covariance matrices are positive definite.

-

Observe that the -dimensional estimate is singular if since in this case the dimension of the linear space generated by any realizations of the dimensional random vectors \mathrm{D}\left(\mathbf{C}_{i, j-1}^{\mathrm{CL}}\right)^{1 / 2} \cdot\left(\mathbf{F}_{i, j}^{\mathrm{CL}}-\hat{\mathbf{f}}_{j-1}^{(s-1)}\right) \quad \text { with } \quad i \in\{0, \ldots, I-j\} \tag{70} is at most -1 . Analogously, the dimensional estimate is singular when Furthermore, the random matrix and/or may be ill-conditioned for some and respectively. Therefore, in practical application it is important to verify whether the estimates and are well-conditioned or not and to modify those estimates (e.g., by extrapolation as in the example below) which are ill-conditioned (see also Merz and Wüthrich (2008, 2009)).

6. Example

To illustrate the methodology, we consider two correlated run-off portfolios A and B (i.e., N = 2) which contain data of general and auto liability business, respectively. The data is given in Tables 1 and 2 in incremental and cumulative form, respectively. This is the data used in Braun (2004) and Merz and Wüthrich (2007, 2008, 2009). The assumption that there is a positive correlation between these two lines of business is justified by the fact that both run-off portfolios contain liability business; that is, certain events (e.g., bodily injury claims) may influence both run-off portfolios, and we are able to learn from the observations from one portfolio about the behavior of the other portfolio.

In contrast to Merz and Wüthrich (2008) (multivariate CL method for both portfolios) and Merz and Wüthrich (2009) (multivariate ALR method for both portfolios) we use different claims reserving methods for the two portfolios A and B. We now assume that we only have estimates of the ultimate claims for portfolio and use the ALR method for portfolio A . The CL method is applied for portfolio This means we have and the parameters as well as the a priori estimates of the ultimate claims in the different accident years in portfolio A are now scalars. Moreover, it holds that and

Table 3 shows the estimates of the ultimate claims for the two subportfolios A and B as well as the estimates for the whole portfolio consisting of both subportfolios.

Since we do not have enough data to derive estimates of the parameters and by means of the proposed estimators. Therefore, we use the extrapolations

\begin{aligned} \hat{\Sigma}_{12}^{(C)} & =\min \left\{\hat{\Sigma}_{10}^{(C)},\left(\hat{\Sigma}_{11}^{(C)}\right)^{2} / \hat{\Sigma}_{10}^{(C)}\right\}, \\ \hat{\Sigma}_{12}^{(A)} & =\min \left\{\hat{\Sigma}_{10}^{(A)},\left(\hat{\Sigma}_{11}^{(A)}\right)^{2} / \hat{\Sigma}_{10}^{(A)}\right\}, \quad \text { and } \\ \hat{\Sigma}_{12}^{(C, A)} & =\hat{\Sigma}_{12}^{(A, C)}=\min \left\{\left|\hat{\Sigma}_{10}^{(C, A)}\right|,\left(\hat{\Sigma}_{11}^{(C, A)}\right)^{2} /\left|\hat{\Sigma}_{10}^{(C, A)}\right|\right\} \end{aligned} \tag{71}

to derive estimates of and Moreover, so that and are positive definite, we estimate and by

\begin{aligned} \hat{\Sigma}_{11}^{(A)} & =\min \left\{\hat{\Sigma}_{9}^{(A)},\left(\hat{\Sigma}_{10}^{(A)}\right)^{2} / \hat{\Sigma}_{9}^{(A)}\right\}, \\ \hat{\Sigma}_{11}^{(C, A)} & =\hat{\Sigma}_{11}^{(A, C)}=\min \left\{\left|\hat{\Sigma}_{9}^{(C, A)}\right|,\left(\hat{\Sigma}_{10}^{(C, A)}\right)^{2} /\left|\hat{\Sigma}_{9}^{(C, A)}\right|\right\} . \end{aligned} \tag{72}

Table 4 shows the estimates for the parameters. The one-dimensional estimates and are the parameter estimates used in the univariate ALR method applied to the individual subportfolio A. Analogously, the one-dimensional estimates and are the parameter estimates used in the univariate CL method applied to the individual subportfolio B. From the estimates of the covariances we obtain estimates of the correlation coefficients by

Note: Since both the CL method and the ALR method are applied to one-dimensional triangles, the parameter estimates and can be calculated directly (using the univariate methods) and one can omit the iteration described in Section 5.

The first two columns of Table 5 show for each accident year the reserves for subportfolios A and B estimated by the (univariate) ALR method and the (univariate) CL method, respectively. The last column, denoted by “Portfolio Reserves Total,” shows the estimated reserves for the entire portfolio.

Table 6 shows for each accident year the estimates for the conditional process standard deviations and the corresponding estimates for the coefficients of variation. The first two columns contain the values for the individual subportfolios A and B calculated by the (univariate) ALR method and the (univariate) CL method, respectively. The last column, denoted by “Portfolio Total,” shows the values for the entire portfolio.

The same overview is generated for the square roots of the estimated conditional estimation errors in Table 7.

And finally the first three columns in Table 8 give the same overview for the estimated prediction standard errors.

Moreover, the last two columns in Table 8 contain the results for the estimated prediction standard errors assuming no correlation and perfect positive correlation between the corresponding claims reserves of the two subportfolios A and B. These values are calculated by

\begin{aligned} \widehat{\operatorname{msep}}_{\mathbf{C}_{i, J} \mid \mathcal{D}_{I}^{N}}= & \widehat{\operatorname{msep}} C_{i, J}^{(1)}\left|\mathcal{D}_{I}^{N}+\widehat{\operatorname{msep}} C_{i, J}^{(2)}\right| \mathcal{D}_{I}^{N} \\ & +2 c \widehat{\operatorname{msep}}_{C_{i, J}^{1(1)} \mid \mathcal{D}_{I}^{N}} \widehat{\operatorname{msep}}_{C_{i, J}^{(2)} \mid \mathcal{D}_{I}^{N}}^{1 / 2} \end{aligned} \tag{73}

with c = 0 and c = 1, respectively. Except for accident year 3, for all single accident years and aggregated accident years, we observe that the estimates in the third column are between the ones assuming no correlation and perfect positive correlation. Note that accounting for the correlation between subportfolios adds about 9% to the estimated prediction standard error for the entire portfolio (295,038 vs. 271,015).