1. Introduction

NCCI has modified the methodology used to determine a state’s overall average loss cost or rate level indication for workers compensation insurance to improve the treatment of large individual claims, and catastrophic, multiclaim events related to the perils of industrial accidents, earthquake, and terrorism.

This paper describes the new methodology NCCI has filed in many states. It discusses the changes to the traditional methods for aggregate ratemaking and the advanced modeling techniques that were used to quantify loss cost provisions by state for these perils. The new large loss ratemaking procedure uses reported losses capped at a given dollar threshold and adds a provision for expected losses excess of this threshold. The details underlying this approach and the decision-making process are discussed.

2. Background and methods

Prior to the 1970s, workers compensation rates promulgated by NCCI included a 1-cent catastrophe provision in every rate. This provision was eventually removed from ratemaking.

The events of September 11, 2001, which caused the greatest insured loss in property-casualty history to date (although it may have subsequently been exceeded by Hurricane Katrina), brought into focus the potential impact of large events on workers compensation. NCCI estimates the insured loss for the workers compensation line of insurance due to the events of September 11 are in the range $1.3 to $2.0 billion on a direct (of reinsurance) basis. The events of that day created a compelling reason to take a fresh look at how workers compensation ratemaking could fund such large, infrequent events prospectively. It became clear that funding large events is an issue in workers compensation, and is no longer just an issue confined to personal and commercial property insurance.

2.1. Overview of the methodology change

NCCI revised its aggregate ratemaking approach in 2004. First, limited losses are calculated by subtracting the actual loss dollars for each claim that are excess of a given dollar threshold, from the aggregate unlimited losses for a state. Next, the limited aggregate losses are multiplied by limited loss development factors (discussed later) to obtain ultimate limited losses. Loss ratio and severity trends are then calculated using these limited losses, and benefit changes are applied (to the limited base of losses).

Finally, the trended ultimate limited losses are divided by a factor (1 – XS), where XS is the Excess Ratio (described later) for the appropriate dollar threshold, resulting in total projected ultimate losses, for use in ratemaking.

2.2. How were large events handled in the past?

Historically, NCCI actuaries occasionally encountered one or more large individual or multiclaim occurrences in past loss cost and rate filings that impacted a state’s overall loss cost or rate level indication. The methods of handling these claims varied from state to state, and included the following ad hoc approaches:

-

Making no adjustment to the reported experience for the state

-

Selecting a longer experience period (for example, three policy years in lieu of two years)

-

Allowing the large claim(s) to remain in the base losses, without applying loss development factors to the specific large losses

-

Removing the large claim completely from the experience period, without building back any excess provision

Similar decisions were made for loss development and loss ratio trend selections. It made sense for NCCI to develop an approach that was standardized and uniformly applicable across its states.

2.3. Goals and objectives

The goal of this research was to develop an aggregate ratemaking methodology, which would provide long-term adequacy of loss costs, rates, and rating values while recognizing the need for rate stability, particularly at a state level. It also aided in standardizing the methodology for handling individual large claims in aggregate ratemaking.

2.4. Defining a large event

Beginning in 2002, NCCI began working with EQECAT, a division of ABS Consulting. EQECAT is a modeling firm that has performed modeling for the California Earthquake Authority, a large earthquake pool, and has performed modeling extensively used in windstorm filings. The perils EQECAT modeled specifically for NCCI included the following:

-

Terrorism

-

Earthquake

-

Catastrophic industrial accidents

Naturally, only injuries and losses resulting from the simulated events that related to workers compensation were a priority from NCCI’s perspective.

It soon became clear to NCCI actuaries that the most practical approach for treating large catastrophic events in a ratemaking context was to exclude entirely from the NCCI ratemaking data any actual catastrophic events that occurred in the past due to these perils. The reasons for doing this included:

-

Actual catastrophic events of this nature that impact the workers compensation line of insurance have rarely occurred. Thus, they would not be predictive by their nature.

-

Actual catastrophic events would create volatility for a state’s loss cost structure.

-

Direct carriers cannot put per-claim or per-occurrence limits on workers compensation policies. Therefore, events such as these could not be excluded from workers compensation coverage without statutory actions by legislators.

-

Aggregating information from such large events would create difficult data reporting issues, conceivably involving multiple employers and multiple insurance carriers.

-

It was easy to remove data for these perils from NCCI’s historical databases, provided the loss limitation dollar amount chosen was sufficiently large.

-

State-of-the-art modeling techniques could be used to better estimate the cost of large events directly caused by one of the named perils.

After much discussion, both internally at NCCI and with external parties including carrier representatives and regulatory authorities, NCCI selected a threshold of $50 million for the specific perils of terrorism, earthquake, or catastrophic multiclaim occurrences. This threshold applies per occurrence, across all states for which claims arise from a single occurrence.

The entire ground-up amount of losses generated from a catastrophic multiclaim event is removed from the ratemaking data, not just the portion excess of $50 million. The loss costs derived from the modeling for the named perils include the cost of the first $50 million layer, as well as the excess.

NCCI removes the catastrophic occurrences first, and then caps individual claims. Large individual claims are capped at amounts that depend upon (1) the premium volume written in the state, and (2) the accident date of the claim. This procedure is described in more detail under the section entitled “Selecting a Threshold by State.”

2.4.1. Capturing the detail on large individual claims and events

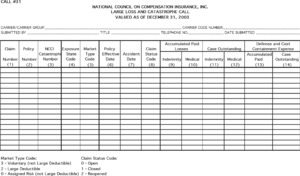

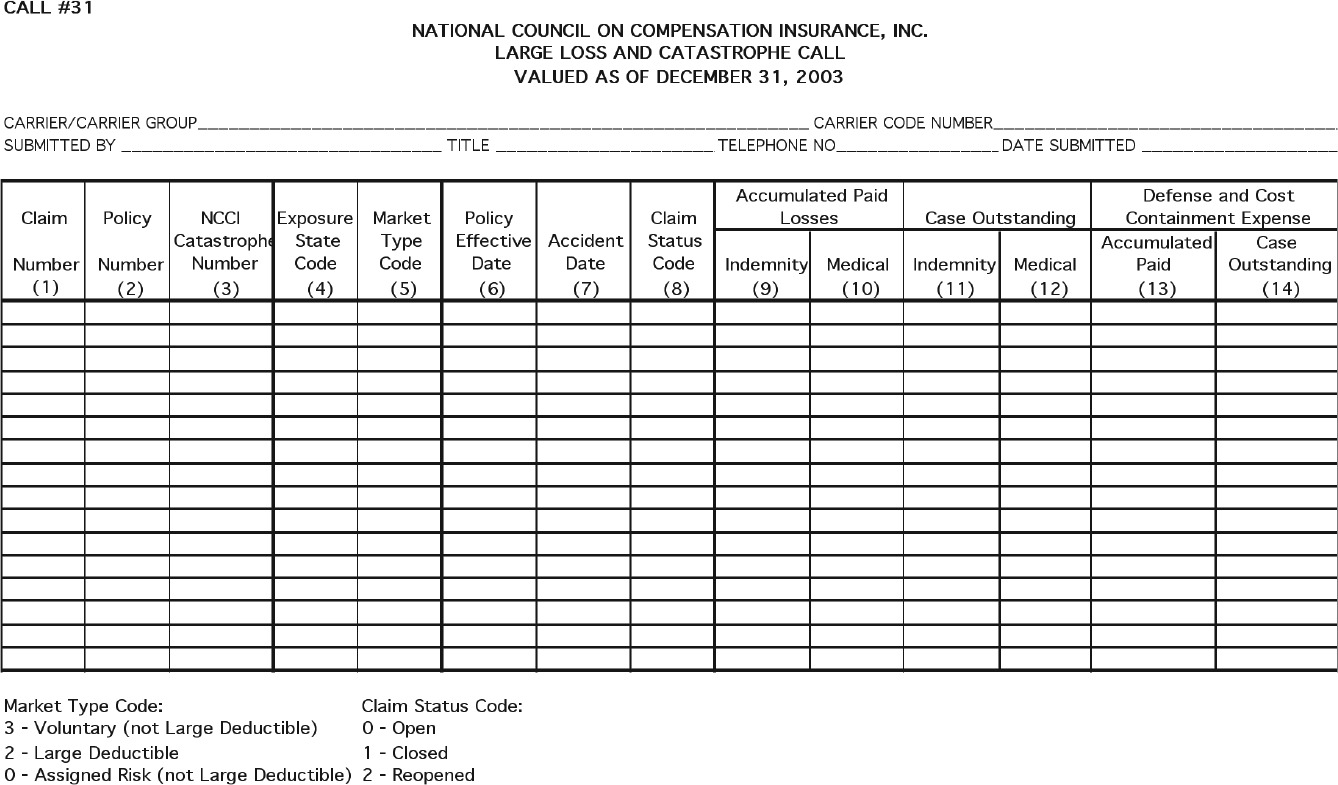

For use in workers compensation ratemaking, NCCI collects the Policy Year Call (#3) and Calendar-Accident Year Call (#5), among other calls. The data calls are due by April 1 each year, and provide a year-end snapshot of 20 individual years of cumulative data and certain aggregate data on prior years. NCCI collects the data by carrier and by state, and it is reconciled to each carrier’s Annual Statement. Because this data is reported on a summarized basis, large individual claims are not identified. Thus, a new call would be required to provide the information needed to implement the new large loss procedure.

NCCI designed Call #31, considering input from NCCI’s Actuarial Committee and Data Collection Procedures Subcommittee, to capture detail on large individual claims greater than $500,000 and multiclaim occurrences from large catastrophic events. Extraordinary loss events that may involve multiple insurance lines of business, states, or data collection organizations are synchronized with the already existing catastrophe numbering system administered by the Insurance Services Office (ISO) for the property casualty industry. At the inception of collecting data via Call #31, carriers reported to NCCI five annual evaluations of the data from year-end 1998 through year-end 2002. It is now reported to NCCI once per year at year-end evaluations. A copy of Call #31 is included in Appendix A.

2.4.2. Selecting a threshold by state

In order to perform the large loss limitation procedure in aggregate ratemaking, a threshold is needed at which individual claims will be limited.

Thresholds are state-specific. They were initially calculated based on a given state’s on-leveled and developed experience period Designated Statistical Reporting (DSR) level premium from the previous year’s filing. The initial dollar threshold is calculated as one percent of this premium figure—after all currently approved expense provisions are removed. As an example, in a full rate state, this would mean standard premium at DSR level less all expenses multiplied by 0.01. This includes all policy (or accident) years in the experience period used in the most recent previous filing. Essentially, this approach defines a large individual claim as one for which the impact of the claim under the prior methodology would result in an overall average statewide loss cost level change of at least one percent. Depending on the state, two or three years of experience will generally be used for the experience period. The advantages of this approach are that loss limitation thresholds

-

reflect the actual loss volume in each state;

-

are inflation sensitive;

-

temper the impact that one large claim may have on the overall statewide loss cost level indication;

-

install a standardized approach across states.

A lower threshold results in more claims being limited (and losses removed), but also results in a greater expected excess factor being applied. Conversely, if a larger threshold is selected, fewer losses are limited and removed from ratemaking, but the magnitude of the expected excess factor is smaller. NCCI had considered a two percent of DSR pure premium threshold, but after observing the hypothetical results of previous loss cost filings for many states under both thresholds, a one-percent threshold was chosen. The main reason for selecting the one-percent threshold was to provide stability.

2.5. Limited loss development

Historically, NCCI workers compensation aggregate ratemaking was based on unlimited loss development factors applied to unlimited losses. The new methodology revises the loss development procedure to use limited loss development factors applied to limited base losses from the state’s experience period. Thus, the ultimate losses derived are limited to a given threshold, analogous to the concept of basic limits losses commonly found in other property-casualty lines of insurance. In other lines of insurance, the insured makes the decision as to how much coverage to purchase, and increased limit factors are computed and applied to derive the proper loss estimate for the limit sold on the policy.

The important difference that separates the workers compensation line of business from those other lines of insurance is that the benefits the coverage provides is based on statutory provisions, and essentially workers compensation provides unlimited medical benefits. In some jurisdictions, wage replacement benefits are also unlimited as to their duration. Therefore, the unique coverage difference that workers compensation presents for NCCI actuaries is that the limited ultimate losses must be brought to an unlimited ultimate basis. This is addressed by the application of the excess ratio, which will be discussed in a later section of the paper.

NCCI computes loss development factors separately for indemnity and medical benefits. Therefore, by limiting individual claims, a procedure had to be determined for capping both components. The procedure NCCI uses to cap individual claims is discussed in a later section of this paper.

A difficult hurdle the NCCI actuaries faced in implementing a new methodology based on limited loss development was how to handle the workers compensation tail factor, which is a 19th report to ultimate factor based on incurred losses including IBNR. In addition to the difficult challenges that exist estimating the tail factor in workers compensation was the challenge of answering the question, “How does one cap a bulk reserve?” A subsequent section of this paper is devoted to the details underlying the modifications made to the NCCI tail factor methodology.

2.5.1. De-trending loss thresholds for loss development

The accident date of a claim is considered in the loss limitation that is applied. This is achieved through a process NCCI calls de-trending. De-trending is a procedure that progressively reduces the thresholds in historical periods to remove the distortion inflation has on loss development triangles. Thresholds are de-trended each year by the corresponding change in the annual, state-specific, Current Population Survey (CPS) wage index. This procedure was chosen for the following reasons:

-

State-specific wage changes reflect indemnity inflation, and, as verified through actual testing, provide a very reasonable proxy for medical inflation over a long period.

-

Annual state-specific wage information is already used in other areas of the filing such as the wage adjustment used in loss ratio trend calculations.

-

The medical consumer price index (CPI) commonly used to approximate medical inflation is only available on a countrywide or regional basis rather than a state-specific basis.

NCCI performed actual data testing of the differences that would result in thresholds based on de-trend factors using annual medical CPI percentage changes in lieu of de-trend factors using CPS wage changes. The overall differences in loss cost level indications that resulted by state between the two de-trending approaches tested were hardly discernible. Thus, it was not clear that the countrywide medical CPI would better represent state-specific medical inflation than the state-specific CPS wage index.

The de-trending percentage represents an inflationary amount to recognize the change in the average nominal costs of a claim over time. It does not represent the loss severity trend that occurred from year to year. A loss severity trend in workers compensation measures much more than inflation. It measures such changes as the following:

-

Changes in the utilization of benefits, such as longer or shorter claim durations, or the propensity of claimants to return to work sooner or later than in the past

-

Changes in medical utilization, such as increased usage of more expensive treatments, medical procedures, and pharmaceuticals with no generic equivalents, etc.

-

Changes to a state’s administration of its workers compensation system, which may increase or reduce adjudication delays, alter dispute resolution processes, or increase or decrease attorney involvement, etc.

If the de-trend percentage selected was the total loss severity trend that was incurred (which is very difficult to isolate and quantify), then it would be difficult for NCCI to accurately forecast loss costs, which would become inaccurate by some implicit amount. Actuaries at NCCI tested two possible indices for de-trending, namely, CPI inflation and changes in total claim severity. By developing simple models, it was demonstrated that the de-trending index should be based on inflation because it produces more predictive loss development factors than using claim severity for the de-trending index.

Using the simple models, NCCI separately tested the impact on loss development factors and resulting ultimate losses of de-trending the cap using both an inflation index and a severity index. De-trending by an inflation index preserves the value of the age-to-age link ratios when average claim size is increasing due to inflation, which is what one would expect. When severity increases due to changes in claim duration, using inflation to de-trend preserves the value of the age-to-age link ratios for early reports, but link ratios for later reports need to be adjusted to reflect lengthening durations. The alternative de-trending index, claim severity, results in distorted age-to-age link ratios at every age, making the resulting ultimate losses less predictive. In conclusion, the resulting ultimate losses were more predictive using an inflation index to de-trend large loss thresholds.

Because NCCI actuaries develop a range of indications using policy year and calendar/accident year data, NCCI must de-trend large loss thresholds applicable to both sets of data. NCCI calculates the accident year de-trended thresholds first, and then calculates the de-trended policy year thresholds. This is accomplished by weighting together two adjacent calendar/accident year thresholds using the state-specific distributions of premium writings by month. The reason for de-trending accident year thresholds first is that the CPS wage changes are on a calendar year basis, which is a better match with calendar/accident year data.

NCCI uses the same threshold and excess ratio for loss cost level indications based on paid and “paid + case” losses. Since large losses are reported to NCCI only for those claims with “paid + case” loss amounts greater than $500,000, the minimum de-trended threshold used in a state is $500,000, despite the fact that de-trending could generate a lower threshold.

Due to the size of DSR pure premium in the states of Florida and Illinois, and hence, the very large indicated threshold, the large loss procedure was not filed in those jurisdictions.

2.5.2. Applying the loss limitations (i.e., caps) to individual claims

In workers compensation ratemaking, indemnity and medical losses are analyzed separately. The traditional chain-ladder loss development techniques project ultimate losses using cumulative paid losses as the base (i.e., “paid” methods), as well as cumulative paid losses plus case reserve amounts (i.e., “paid + case” methods). In a given state, the NCCI actuaries review a range of indications based on both “paid” and “paid + case” methodologies. Therefore, capping large claims was more challenging than expected.

After reviewing several loss limitation possibilities, NCCI selected a methodology that limited payments first, followed by limiting the case reserves. The capping would be applied to individual claims within the experience period as well as within the historical loss development triangles. The myriad of other options considered by NCCI for capping claims is not included in this paper for sake of brevity.

NCCI uses proportional capping to allocate limited claim amounts. Limited loss amounts for claims above the threshold will be allocated to layers and to indemnity and medical in the proportion that their values contribute to the total value of the claim and the threshold. NCCI limits paid losses first, then limits the case reserves until the per claim threshold is reached. The remaining excess losses are subtracted from the aggregate unlimited losses in order to calculate limited losses for use in ratemaking. In order to understand the mechanics of how claims are limited, hypothetical illustrative examples follow.

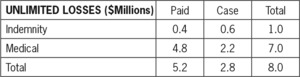

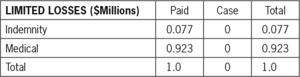

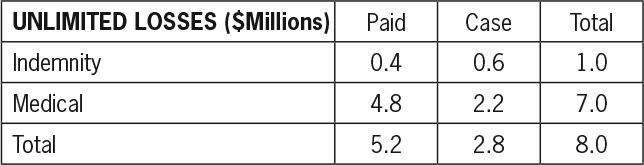

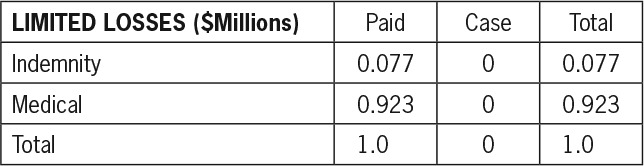

Illustration 1. For claims that have pierced the threshold on a “paid” basis; State threshold = $1M:

In this situation, the resultant limited amounts are as follows:

The formula for deriving the limited paid amounts for indemnity and medical is

(Indemnity paid/total paid)×threshold=(0.4/5.2)×1.0=0.077(Medical paid/total paid)×threshold=(4.8/5.2)×1.0=0.923

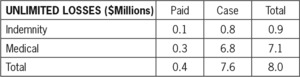

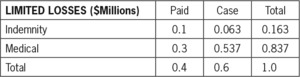

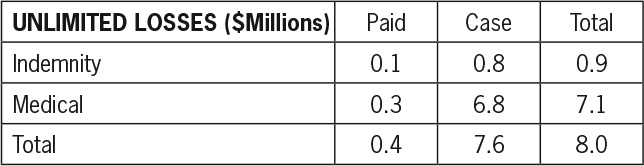

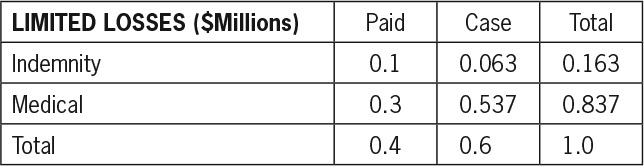

Illustration 2. A claim has not pierced the threshold on a “paid” basis, but has pierced the threshold on a “paid + case” basis; State threshold = $1M:

In this situation, the resultant limited amounts are as follows:

In Illustration 2, the limited paid amounts are identical to the unlimited paid amounts. The “remainder of threshold” is computed as follows:

“remainder of threshold"=(threshold−total paid)=(1.0−0.4)=0.6

The formula for limited case reserve amounts for indemnity and medical:

(Indemnity reserve/total reserve)×"remainder of threshold" =(0.8/7.6)×0.6=0.063(Medical reserve/total reserve)×"remainder of threshold" =(6.8/7.6)×0.6=0.537

2.5.3. Tail factor adjustment

A limited tail factor (referred to as a capped tail factor in the terminology that is being introduced in this section) is needed to properly develop capped “paid” and “paid + case” losses to an ultimate basis. The previous NCCI tail methodology generates uncapped (i.e., unlimited) tail factors. Because claims with accident dates prior to 1984 are not reported on Call #31 (Large Loss and Catastrophe Call), it is not possible to adjust the state uncapped tail to a capped tail by removing the effect of losses excess of the state threshold. In order to convert the uncapped “paid + case” tail factor to a capped “paid + case” tail factor, we use a tail adjustment.

The tail adjustment considers the relationship between a countrywide capped “paid + case” tail factor and a countrywide uncapped “paid + case” tail factor, and applies that relationship to individual state uncapped “paid + case” tail factors to generate state-specific capped “paid + case” tail factors.

First, a countrywide capped tail factor CLDFT is derived for the threshold T from countrywide uncapped tail factors, countrywide excess tail factors, and countrywide excess ratios, using the formula

CLDFT=1−XST(1ULDF−XSTELDFT)

where

CLDFT = Capped “paid + case” tail factor, 19th - to - ultimate, for threshold T,

ULDF = Uncapped “paid + case” tail factor, 19th - to - ultimate, XST = Excess ratio for threshold T, i.e., the ratio of losses excess of T to total losses at an ultimate report,

ELDFT = Excess “paid + case” tail factor, 19 - to - ultimate, for threshold T.

All of the above factors are on a countrywide basis for medical and indemnity benefits combined, across all injury types. Thresholds are de-trended to the 19th prior report.

The numerator of the right-hand side of (2.1), 1 –XST, is the proportion of total ultimate losses that are below the dollar threshold T. The denominator is the proportion of total ultimate losses below the threshold T reported at 19 years of maturity. To see this, note that 1/ ULDF is the proportion of total unlimited losses reported at 19 years, and XST / ELDFT is the proportion of total losses that are excess losses reported at 19 years. The difference is the proportion of total losses less than the threshold reported at 19 years. The ratio of the numerator and denominator is the loss development factor. The countrywide adjustment factor FT is

FT=CLDFT−1ULDF−1,

where CLDFT and ULDF are as described above. The state capped tail factor is derived as follows:

SCLDFT=1+FT(SULDF−1)

where

SCLDFT = State-specific capped “paid + case” tail factor, 19th - to - ultimate, for threshold T,

SULDF = State-specific uncapped “paid + case” tail factor, 19th - to - ultimate.

SULDF is the state uncapped incurred (including IBNR) tail factor times the ratio of uncapped incurred (including IBNR) at 19th report to uncapped “paid + case” at 19th report. This is computed separately for medical and indemnity losses.

In practice, the factor FT is applied to the uncapped medical and indemnity “paid + case” tail factors separately, to produce separate capped “paid + case” medical and indemnity tail factors.

An additional step is necessary to convert to a state-specific paid tail factor on a capped basis. The state-specific capped “paid + case” tail factor, SCLDFT, is divided by the ratio of capped “paid” losses to capped “paid + case” losses at 19th report, separately for medical and indemnity losses. The de-trended dollar thresholds are used in the calculations of the “paid” to “paid + case” ratio for each state.

Unlimited “paid + case” tail factors, SULDF, will not be adjusted (i.e., reduced) if the unlimited “paid + case” tail factor is less than or equal to 1.000.

NCCI used Reinsurance Association of America (RAA) data (RAA 2003) to calculate countrywide excess loss development factors (ELDFs). Data is submitted to RAA by reinsurers on an accident year de-trended basis. The RAA excess loss development factors are available only for combined “paid + case” losses (not “paid” losses) for five attachment point ranges (in thousands of dollars: $1–150, $151–350, $351–1500, $1500–4000, $4001 and greater) through an 18th report. NCCI fit curves through average period-to-period development factors for the lowest four ranges to extrapolate 19th-to-ultimate tail factors for each of the ranges. Reported development for the highest range was deemed too volatile to provide a reliable base for extrapolation. A curve was fit through these four tail factors to extrapolate tail factors for higher attachment points. RAA produces excess loss development data every two years, which will allow NCCI to update the underlying factors periodically.

2.6. Application of the excess ratios

Excess losses are defined as the sum of the excess portion of claims above a given threshold. NCCI produces excess ratios with each loss cost or rate filing. NCCI redefined its excess ratios in 2004 to exclude the cost of events $50M or greater, the new large events threshold. For more detail, see Corro and Engl (2006).

The excess ratio, XST, for a given threshold T, T < $50M, is defined as

XST= Expected Excess Losses Between Threshold T and $50M Expected Total Losses Below $50M

The ratio of excess losses to total losses is at an ultimate value. The excess ratio applied in the large loss procedure is on a per-claim basis and varies by state as well as by threshold. This differs from an excess loss factor as excess loss factors are on a per occurrence basis, and also may include a provision for expenses.

In a given loss cost filing, the same excess ratio is applied to each year in the experience period. This is due to the fact that the dollar thresholds applicable to historical years are de-trended. By de-trending the threshold in the loss development and trend calculations, the proportion of losses above the threshold is preserved. Consider the following simple example. If a state’s threshold is $5.0M in 2005, and that corresponds to a 2.0% excess ratio, then a $4.8M threshold in 2004 would also correspond to a 2.0% excess ratio, assuming that the 1.042 (1.042 = $5.0M/$4.8M) change in threshold values is solely due to inflation and correctly measures the actual rate of claim inflation in the state.

The adjusted, per claim excess ratio is applied as a factor, 1/(1 – XS), to limited ultimate losses that have been on-leveled and trended to the midpoint of the proposed filing effective period. Similarly, the excess ratio applied has also been trended to the midpoint of the proposed filing effective period. Each policy period in the experience period has the same 1/(1 – XS) factor applied to both indemnity and medical losses, since the size-of-loss distributions are on a combined indemnity and medical basis. The excess ratios for aggregate ratemaking are consistent with the values contained in the state’s latest approved filing.

2.7. Loss ratio trend

Indicated exponential loss ratio and loss severity trends, as well as trends based upon statistical modeling, are based on ultimate limited losses, where the limit is determined using the same thresholds by year as those used for loss development. This is consistent with the approach of the ratemaking analysis (which is done on a limited basis), and is consistent with the fact that the excess ratio used in the filing implicitly contains inflationary trend over time.

2.8. Defense, cost containment and adjusting and other expenses (formerly loss adjustment expenses)

No changes to the calculation of Loss Adjustment Expense (LAE) factors were made as a result of using the large loss procedure. This is a potential area of future study.

2.9. Summary of filing results for the large loss methodology

NCCI filed the large loss procedure for the first time in loss cost filings with effective dates from October 1, 2004 through July 1, 2005. The new procedure was filed in 33 states and it coincided with NCCI’s revised excess loss factor procedure (Corro and Engl 2006). Most state regulatory officials were satisfied with the implementation of NCCI’s new methodology and its long-term advantages, and NCCI staff tracked results for each state on both an “unlimited” basis (i.e., the prior methodology) and using the newly filed large loss procedure.

NCCI has measured its impact by taking the ratio of the large loss methodology and the prior methodology’s indicated loss cost/rate level changes (i.e., new/prior) across the states. In the first year, this range was [.973, 1.028], and the range across states has consistently hovered around [.96, 1.04] in each subsequent filing season. Thus, the methodology change was relatively modest and generally symmetric around 1.00. These results exclude the additional premium collected by carriers via the two catastrophic loss cost provisions, described later.

In summary, as of June 2010, the large loss methodology was adopted in 31 of the 33 states where it was filed. Colorado and Virginia have not adopted the change in methodology, and it has not been filed in West Virginia, Nevada, Illinois, or Florida.

3. The use of catastrophe modeling in workers compensation

Another goal of this paper is to discuss how modeling was used to derive loss cost provisions for catastrophic events due to terrorism, earthquake, and industrial accidents. Beginning in 2002, NCCI began working with EQECAT, a division of ABS Consulting. EQECAT is a modeling firm that performs modeling for the California Earthquake Authority, a large earthquake pool, and performs modeling extensively used in windstorm filings. Serving the global property and casualty industry, EQECAT is known as a technical leader and innovator in the development of analysis tools and methodologies to quantify insured exposure to natural and man-made catastrophic risk. EQECAT developed three models for NCCI. These models address the potential exposure to workers compensation for terrorism, earthquake, and catastrophic industrial accidents. The models are described in detail in the following sections.

In late 2002, NCCI filed Item B-1383, which was a national item filing proposing new loss cost/rate provisions by state for events that result from acts of foreign terrorism. This filing was designed to align with conditions of the Terrorism Risk Insurance Act (TRIA) passed by Congress in 2002.

In 2004, NCCI filed Item B-1393, which was a national item filing proposing new loss cost/rate provisions by state for events that result from the following perils: acts of domestic terrorism, earthquake (and tsunami, in certain states), and catastrophic industrial accidents.

On December 26, 2007, Congress passed the Terrorism Risk Insurance Program Reauthorization Act (TRIPRA). In May, 2008, NCCI filed Item B-1407, which regrouped the perils and loss cost provisions NCCI identified in Item B-1393 into “Certified Acts of Terrorism” and “Catastrophes (other than Certified Acts of Terrorism).”

Almost all states approved the voluntary loss cost and assigned risk rate provisions that NCCI filed, and many workers compensation insurers now apply these values to payroll in hundreds of dollars to determine the premium it generates. This premium is added after standard premium is determined, and is not subject to any other modifications, including, but not limited to, premium discounts, experience rating, retrospective rating, and schedule rating. It is added onto a policy’s estimated annual premium initially charged to an employer, which is subject to a final audit when payroll is finalized at policy expiration.

3.1. Definition of the perils

Terrorism, earthquakes, and catastrophic industrial accidents can result in losses of extraordinary magnitude for workers compensation. While the exposure is real, the absence of a large event in recent history within the data means that the current loss costs and rates do not provide for this type of exposure. NCCI’s new approach is to exclude losses resulting from these major catastrophes once a provision for their exposure is contained in the loss costs and rates. The threshold for each of these exposures is $50 million. The modeling results described below assume that all events exceeding $50 million of loss for workers compensation would be removed from ratemaking on a first-dollar basis.

For purposes of the modeling, the following definitions apply:

Certified Acts of Terrorism.

All acts of terrorism as certified by the Secretary of the Treasury pursuant to TRIA (as amended) with aggregate workers compensation losses in excess of $50 million. This is defined as an act that

a. is violent or dangerous to human life, property, or infrastructure,

b. results in damage within the United States, and

c. has been committed by an individual or individuals as part of an effort to coerce the civilian population of the United States or to influence the policy or affect the conduct of the U.S. government by coercion.

Earthquake.

The shaking and vibration at the surface of the earth resulting from underground movement along a fault plane or volcanic activity where the aggregate workers compensation losses from the single event are in excess of $50 million.

Catastrophic Industrial Accident.

Any single event other than an act of terrorism or an earthquake resulting in workers compensation losses in excess of $50 million.

For workers compensation, obligations to pay benefits are dictated by state law, and exclusions of these perils are not possible without statutory changes. Because TRIPRA has a unique mechanism for triggering federal reinsurance, separate statistical codes were created to capture premium credits or debits reported to NCCI for the Terrorism provision and the catastrophe provision covering the other perils, commonly referred to as “Catastrophe (Other than Certified Acts of Terrorism).”

3.2. Modeling the three perils: Terrorism, industrial accidents, and earthquake

Separate EQECAT models have been utilized to provide estimates of the risks to workers compensation insurers due to the three perils.

Events are simulated for specific states using qualitatively defined thresholds. Some events modeled may actually result in no losses. The qualitative thresholds used by peril are:

-

Large industrial accidents likely to cause at least two worker fatalities or at least ten worker hospitalizations,

-

Terrorist attacks with the potential to cause at least $25M in workers compensation losses according to the magnitude of physical event, and

-

All possible earthquakes.

All three models consist of the following primary components:

-

Definition of the portfolio exposures,

-

Definition of the peril hazards,

-

Definition of the casualty vulnerability, and

-

Calculation of loss due to casualty.

Each of the above components is described separately below.

3.3. Portfolio exposures within the models

The location, number, and types of employees are needed to characterize the risk exposures to all three perils. Business information databases were used to obtain the addresses of businesses and the estimated number of employees assigned to each location. For terrorism events and industrial accidents, the exposures were aggregated to the census block level (typically a city block). This aggregation level was suitable for terrorist events and industrial accidents that span hundreds of meters. The number of workers at each aggregate level (census block or work site) was prorated to approximately account for part-time workers, workers absent for various reasons, and self-employed workers. The workers were then grouped into five NCCI industry groupings: Manufacturing, Contracting, Office & Clerical, Goods & Services, and All Others. Certain government classifications not covered by workers compensation were excluded.

In addition to employee information, required exposure data for the earthquake peril include information on the buildings where the employees are located. Building information consists of the structure type and age.

Furthermore, the number of employees used for the earthquake peril was defined for four different work shifts: day; swing shift; night; and weekends and holidays.

Since the number of casualties varies depending on the time of day and day of the week when an earthquake strikes, it is necessary to determine the number of employees for each of the different work shifts. The day shift accounts for most of the workers compensation exposure.

The definition of exposure by work shift was only performed for the earthquake peril. Earthquakes are natural disasters and can occur at any time. Therefore, it is considered important to average the losses from all possible outcomes. Conversely, terrorism events and industrial accidents can be considered most likely to occur during the day shifts when there are more people and activities. Terrorism events are planned to inflict maximum casualties, and industrial accidents are more prone to occur during the peak hours of activities.

3.4. Peril hazards within the models

3.4.1. Peril hazards for terrorism events

EQECAT assembled data on the insurers’ exposure and subjected that exposure to a large number of simulated terrorist events. These consisted of three primary elements:

-

Weapon types

-

Target selection

-

Frequencies of weapon attacks

A brief description of each element follows.

Weapon types.

Specific weapons were selected from the range of known or hypothesized terrorist weapons. The selection process considered weapons that have been previously employed, weapons that could cause large numbers of casualties, or weapons that would be more readily available. Some of the selected modes of attack are listed below.

a. Blast/explosion

b. Chemical

c. Biological

d. Radiological

e. Other

Target selection.

A target is the location of a terrorist attack and, in the model, represents the locus of a casualty footprint. An inventory of targets was created, with targets having characteristics such as:

-

Tall buildings—10 stories and higher

-

Government buildings with a large number of employees or serving a critical or sensitive function (e.g., FBI office)

-

Airports

-

Ports

-

Military bases—U.S. armed forces

-

Prominent locations—capitol buildings, major amusement parks, etc.

-

Nuclear power plants

-

Railroads, railroad yards and stations—freight lines for railroad cars carrying chemicals,

-

Large dams near urban areas

-

Chemical facilities, particularly those with chlorine and ammonia on-site

Frequency of weapon attack.

The relative likelihood of a type of attack occurring at a target location is represented by an assigned (annual) frequency. Attack frequency is based on the following considerations:

-

Availability of weapons

-

Attractiveness of target

-

Attractiveness of the region relative to other regions based on various theories

For attacks that involve atmospheric releases of chemical, biological, and radiological agents, wind direction affects the assigned frequency.

Nationwide results assume that there is, on average, one terrorist event per year as a default. The results are scalable based on different frequency assumptions. A range of 0.25 to 3 terrorism events per year was used based on expertise from EQECAT.

3.4.2. Peril hazards for catastrophic industrial accidents

Industrial accidents are characterized by the following elements:

-

Facilities where industrial accidents occur

-

Accident types

-

Frequency of accidents

Facilities.

Facilities vulnerable to large industrial accidents resulting in casualties above a threshold were identified from several public and commercial data sources. The facilities considered as potential sources for large industrial accidents are identified below:

-

Refineries

-

Chemical plants (oil, gas, petrochemical, etc.)

-

Water utilities

-

Power utilities

-

Other manufacturing plants

Accident types.

The perils considered in the study were broadly classified into three categories: chemical releases, large explosions, and all other accidents. Depending on the peril, the atmospheric conditions, the plant configuration and location, etc., the footprint of an accident could reach beyond the plant boundaries and affect workers in adjacent facilities and beyond.

Frequencies of accidents.

The frequencies of large industrial accidents in each of the modeled states were derived based on historical fatality and injury data available from BLS, OSHA, and other sources. Frequencies of extreme events, which are very large and very rare, were based on ABS Consulting expert opinion. The consequences of such events were benchmarked to a Bhopal-type event.

3.4.3. Peril hazards for earthquakes

Regional hazard.

The calculation of annualized losses requires a probabilistic representation of the location, frequency, and anticipated severity of all earthquakes that can be expected to occur in the region. Earthquake source zones are identified from information on the geology, tectonics, and historical seismicity of the region. Each source zone represents a fault or area in which earthquakes are expected to be uniformly distributed with respect to location and size.

For each of the earthquake source zones, an earthquake recurrence relationship is developed. This relationship is developed using an appropriate earthquake catalog, which is a listing of historically recorded earthquakes. The catalog is analyzed for completeness by determining the time period over which all earthquakes of a given magnitude are believed to have been reported.

Faults are modeled by a characteristic earthquake model or a Gutenberg-Richter recurrence relationship, or both, depending upon the available geologic information. The characteristic earthquake model assumes that earthquakes of about the same magnitude occur at quasi-periodic intervals on the fault. The maximum magnitude for each earthquake source zone is estimated from the published literature, from comparisons with similar tectonic regimes, from historical seismicity, and from the dimensions of mapped faults.

The seismic hazard model simulates approximately 2,000,000 stochastic events across the United States.

Site hazard severity.

Attenuation relationships are used to predict the expected amplitude of ground shaking at a site of interest knowing an earthquake’s magnitude and the distance from the fault to the site. The ground shaking is characterized by one or more ground-shaking parameters.

Soil amplification factors are used to modify the ground-shaking parameter calculated for the specific soil conditions at the site of interest. These factors are different for each ground-shaking parameter.

The effect of local soil conditions within each individual zip code was taken into account. In general, soft soil sites will experience higher earthquake motions than firm soil or rock sites for comparable locations relative to the earthquake fault rupture zone, thereby increasing the likelihood of damage to buildings on soft soil for a given earthquake.

3.5. Casualty vulnerability

Casualty vulnerability establishes the casualty levels to various peril event magnitudes. While the casualty vulnerability for terrorism events and industrial accidents are similar, the casualty vulnerability for earthquakes is established differently.

3.5.1. Casualty vulnerability for terrorism events

The casualty footprint of a weapon is a measure of the physical distribution of the intensity of the agent as it spreads out from its initial target. The effects of each type of weapon will vary with the size of the weapon, with atmospheric conditions, and in some cases with local terrain. In a large-scale nationwide analysis with millions of simulated events, where local atmospheric conditions and terrain are only generally known, a simpler, more generalized simulation is necessary. The simplifications necessary to efficiently model footprints of weapons effects are described below.

For conventional blast loading, blast simulation software is used to estimate casualties in various urban settings where the geometry and height of the buildings are varied. The results of these detailed simulations are used to develop simplified blast attenuation functions that vary with distance and with the general terrain. For conventional blast loading, the footprint is defined as a decreasing function of distance from the source of the blast.

The casualties for a nuclear blast can be estimated on the basis of empirical data resulting from wartime and nuclear test experience. Casualties are assumed to be a function of distance from ground zero with the source located either at ground level or at a relatively low altitude. A simplified, conservative casualty footprint was created to encompass the range of conditions that could exist. Long-term radiation effects were not considered.

For chemical, biological, and radiological agent releases, a plume is formed that is influenced by atmospheric conditions and by the terrain. The footprint of the cumulative dose that is deposited by a plume over time was calculated using the simulation software, MIDAS-AT (Meteorological Information and Dispersion Assessment System—Anti-Terrorism). The plume footprint was calculated for low, medium, and high wind speeds and for three different atmospheric turbulence conditions. Any of the footprints could then be oriented in each of eight compass directions. Most of the footprints were truncated after an elapsed time of about two hours to account for successful evacuation.

The analysis methodology is to apply a casualty footprint to an assigned target and to calculate the extent of casualties to the covered workers within the footprint. For chemical, biological, and radiological footprints, the dose to each employee is calculated, and a conversion is made to the degree of casualty (outpatient treatment, minor/temporary disability, major/permanent disability, and death). Degree of casualty is then converted to loss based upon the average costs by injury type provided by NCCI. The average costs provided vary by state.

3.5.2. Casualty vulnerability for industrial accidents

Three accident types were considered in the Industrial Accidents study: chemical releases, large explosions, and all other accidents. The latter category includes a variety of accidents that are localized in nature and affect workers within a small perimeter, the size of a building. These smaller-scale accidents were simulated as small blasts.

The methodology used to model chemical releases and blasts is the same as in the terrorism model described earlier.

3.5.3. Casualty vulnerability for earthquakes

Workers’ casualties due to earthquakes are directly correlated to the damage incurred by the buildings in which they work. Therefore, casualties due to earthquakes are estimated in two sequential stages, estimation of building damage, and estimation of worker casualties based on the building damage.

Building damage at the workplace.

Individual building vulnerability functions, that is, the probability of building damage given a level of ground shaking at the site, depends on the structure type, the age of construction, and the building height. Vulnerability functions account for variability by assigning a probability distribution bounded by 0% and 100% with a prescribed mean value and standard deviation. The vulnerability functions were based on historical damage data and insurance claims data—including the analysis of over 50,000 claims from the Northridge and other earthquakes.

The probability distributions of ground shaking at the site and vulnerability functions are combined to estimate the probability of building damage for each earthquake event. The probability of damage at the site level is also combined probabilistically, accounting for correlation in ground shaking between zip codes and in damage level between the same and different structure types within and between zip codes.

Note that considerable randomness exists in earthquake damage patterns where randomness denotes the irreducible variability associated with the earthquake event. Randomness is characterized by the following parameters:

-

Ground shaking

-

Damage to the average structure of a given class at a given level of ground shaking

-

Each structure’s seismic vulnerability relative to the average structure of its class

Modeling uncertainty, the lack of knowledge in characterizing each element of the model, is statistically combined with randomness and correlation to estimate overall variability in damage and loss to the entire portfolio.

Casualties due to building damage.

Workers’ casualty data resulting from earthquakes is very scarce in the United States. EQECAT is constantly using data from the most recent earthquakes worldwide to update its casualty functions, which correlate building damage to casualties. Because of differences in building design codes and construction practices, data from earthquakes outside the United States are adapted to local U.S. conditions. This adaptation takes into consideration building damage, the state, and its resulting casualties. To illustrate this concept, assume a reinforced concrete building in Country X sustains 50% damage, and 15% of its workers are injured. EQECAT assumes a similar building in California, suffering 50% damage will have 15% casualties. However, due to better building design and construction practices in California compared to Country X, the 50% damage and 15% casualties would occur in California only under a condition of twice the earthquake acceleration of Country X. The casualty rate functions were developed using earthquake casualty data from Japan, Turkey, and Taiwan, and are defined for four injury types: death, severe/ major, minor/light, and medical only.

3.6. Calculation of loss due to casualties

Average costs by injury type were provided by NCCI and used in calculating losses due to workers’ casualties. The same average costs were applied to all three perils.

Earthquake exposures were defined for different work shifts. The number of casualties by work shift for each work site and earthquake event is estimated prior to the application of the average costs.

3.7. Deriving loss costs from the modeling

Expected annual losses (EAL) were calculated for every state and peril analyzed. These losses were obtained using the casualty counts generated from the simulated events and by using state-specific benefit payments by injury type by state provided by NCCI. The losses include self-insured employers.

Using the loss exceedance distribution underlying the EAL estimates, NCCI actuaries remove from the distribution events that do not exceed the selected dollar threshold of $50 million. A detailed hypothetical illustration of this process is provided in the next section.

The modified EAL was divided by the number of full-time-equivalent (FTE) employees and divided by the annual wage per employee based on Current Population Survey (CPS) to derive a pure loss cost per $100 of payroll. Note the number of employees also includes self-insured employers.

This was computed by peril and summed to determine two catastrophic loss cost/rate provisions: one for “Certified Acts of Terrorism” and one for “Catastrophes (other than Certified Acts of Terrorism).” (Note: the “Certified Acts of Terrorism” provision was computed using a final adjustment to remove the portion of losses from events that exceeded the federal backstop provided under TRIPRA.)

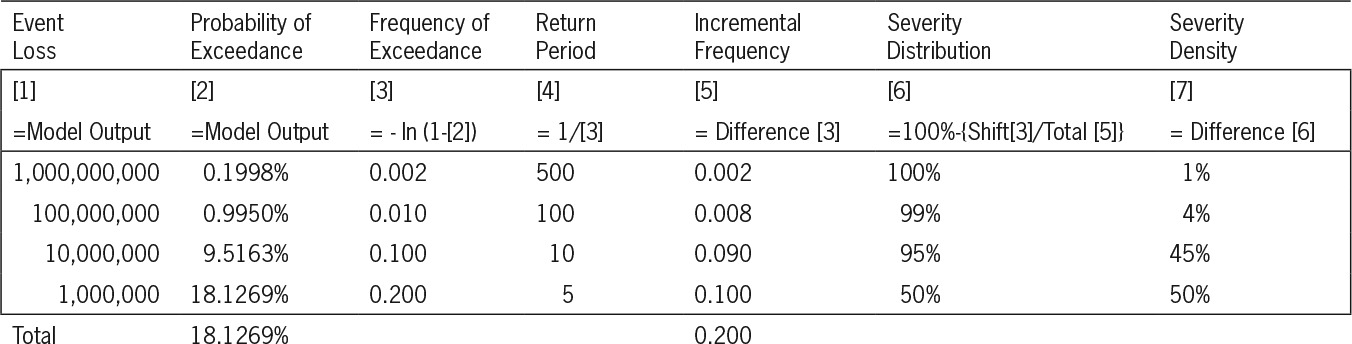

3.8. Loss exceedance curves and the catastrophic event threshold

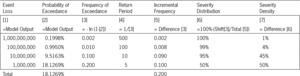

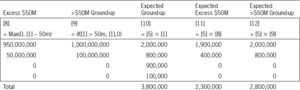

Loss exceedance curves are a standard output format from catastrophe models. Illustration 3 shows a hypothetical example of output from a catastrophe model. For illustration purposes, only four points on the loss exceedance curve are shown. Typically, loss exceedance curves will consist of several hundred points. The curve is represented by loss amounts sorted in descending order along with associated probabilities. The probability of exceedance of a given loss amount is the probability that at least one event causing at least that loss amount will occur in a single year. The loss exceedance curve is assumed to result from an underlying collective risk model with a Poisson frequency distribution. Based on this assumption, frequencies (exceedance and incremental), return periods, and the severity density can be derived easily.

Illustration 3. Hypothetical example of loss exceedance curve components

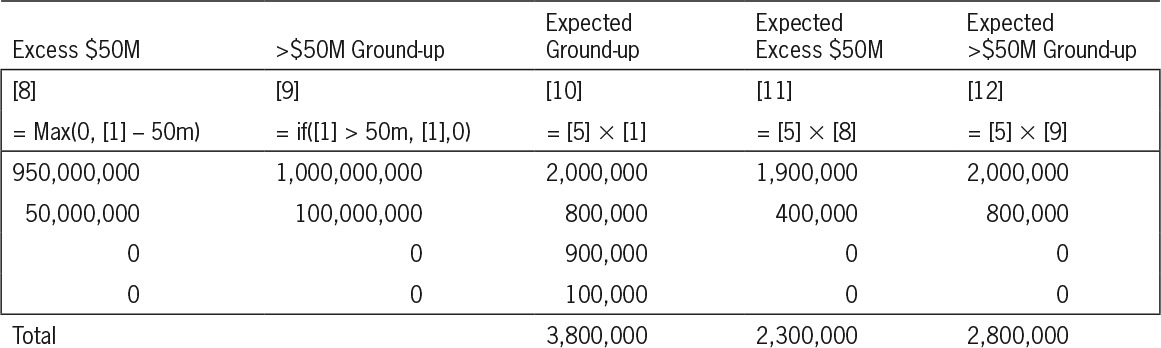

For NCCI’s large loss procedure, catastrophic losses from events exceeding $50 million dollars are completely excluded from experience used for ratemaking. Illustration 4 shows the quantitative exclusion of losses exceeding $50 million, on both excess and ground-up bases, from the exceedance curve shown in Illustration 3. Expected values were calculated for the two types of exclusions. Column [12] is used in the derivation of the catastrophe provisions. Although it is not used, column [11] is the type of calculation that would apply if events greater than $50 million were simply capped, as is done in the large loss procedure with large individual claims exceeding the state’s per claim threshold.

Illustration 4. Exclusion of losses exceeding a $50 M event (from Illustration 3)

Illustration 5. NCCI’s formula for the calculation of a catastrophe peril’s loss cost:

Catastrophe Pure Loss Cost(per $100 of limited payroll)=100×Catastrophe Expected Losses/(#Workers×Limited Average Annual Wage)

So, if the prior loss exceedance curve applied to industrial accidents, and was based on a modeling assumption of 1,000,000 workers, and the average annual wage was $40,000, the provision for the excluded large event losses would be:

100×$2,800,000/(1,000,000×$40,000)=0.007

To derive the “Catastrophe (other than Certified Acts of Terrorism)” loss cost provision, a similar provision would be computed for the earthquake peril (and tsunami peril in certain states) and added to the 0.007 for industrial accidents. The sum would then be multiplied by a factor to account for loss based expenses (or fully loaded expenses in administered pricing jurisdictions) and then rounded to the nearest penny to produce an additive provision for loss costs/rates.

3.9. Summary of results for the catastrophic loss cost provisions

As of June 2010, the provision for “Certified Acts of Terrorism” was approved in all 37 states in which it was filed by NCCI. The loss cost/rate per $100 of payroll ranges from $0.01 to $0.05 across the 37 states.

For “Catastrophes (Other than Certified Acts of Terrorism),” the provision was approved in 32 of the 37 states in which it was filed by NCCI. It ranges from $0.01 to $0.02 across the 32 states.

3.10. The pros and cons of using catastrophe modeling in workers compensation

Catastrophic events are a low-frequency occurrence with very high severity, and cannot be adequately addressed through standard actuarial techniques. The data on such events is limited to a very small number of historical events—often without an event having been observed in a state.

Used in conjunction with the actual historical data, stochastic simulations were used in the modeling to provide additional data points. Repeat simulations of an event provide a broader perspective of the possible outcomes. Variations of parameters are also modeled and result in a comprehensive stochastic event set.

Modeling is being used extensively in the insurance and reinsurance industries. State regulators are scrutinizing the models, more fully understanding how they operate, and asking better questions to learn more and more. Over time, there has been a wider acceptance of catastrophe modeling by regulatory officials.

As for disadvantages, there are several parameters with varying levels of uncertainty involved in each of the hazard, vulnerability, casualty, and loss modules which are integrated in these complex models. These uncertainties lead to differences between models and raise questions among regulators who have to determine the validity of these tools which are becoming increasingly used in rate making.

3.11. Possible future enhancements to catastrophe modeling

Catastrophe models rely heavily on underlying databases which contain information on the different parameters used in the analysis. To the extent that the refinement and quality of these databases increases, the result may be a reduction in the margin of uncertainty in the final results.

An enhancement to the workers compensation models would result if a database containing employment data at each business location and for each work shift were updated regularly. This would improve the estimates of the numbers of workplace injuries and the subsequent modeled loss estimates resulting from events emanating from terrorism, earthquake, and catastrophic industrial accidents.

Some other examples of information or databases which might improve the estimation of the workers compensation loss estimates follow, organized by peril.

3.11.1. Earthquake peril

A more refined soil database would be a possible enhancement if used in the earthquake model for workers compensation. It could allow for better estimation of the site amplification of the ground motion, which in turn is used to calculate the building damage, and, hence, the resulting casualties among its occupants.

More accurately defined building structure information would allow for a more fine-tuned building vulnerability function. In its absence, assumptions are generally made based on information that could possibly be dated.

The casualty rate functions allow the estimation of the casualties by injury type in different building structures. These functions are developed from limited earthquake casualty data and, as more data is collected from future occurrences, loss estimates could be improved as the estimation of casualties improved.

3.11.2. Catastrophic industrial accidents and terrorism perils

The potential for extreme industrial events needs to be constantly reviewed based on safety regulations and their enforcement, emergency planning, and medical emergency care. These conditions may vary greatly over time and across facilities. This type of information directly impacts the frequency assumption underlying the loss cost. As this information becomes more refined, one should be better able to target the frequency assumption.

Other areas of possible enhancement include obtaining more refined information on the potential target sites. In particular, those sites storing toxic chemicals need to be constantly updated as some plants open or close or change their product lines. The nature and quantities of the toxic chemicals must also be kept current.

For terrorism, the statements above apply with respect to the potential target sites. Also, event frequencies need to be regularly evaluated based on current conditions and the possible threats they may generate. The frequency assumption, as always, is very important to determining the appropriate loss cost levels for all perils.

3.12. Using models outside the actuary’s expertise

The author relied upon the expertise of other NCCI actuaries, whose work product has been described in parts of the modeling discussion presented. Such information has been documented in accordance with ASOP No. 38.

The NCCI actuaries relied upon simulation models supplied by EQECAT for calculating expected losses due to the earthquake perils. The accuracy of these models heavily depends upon the accuracy of seismological and engineering assumptions included.

The NCCI actuaries also relied upon simulation models supplied by EQECAT for calculating expected losses due to terrorism and catastrophic industrial accidents. The models produce estimated losses due to physical, chemical, and biological terrorist acts. They also produce estimated losses due to chemical releases and explosions at industrial plants, and both perils include the input and opinions from experts in related fields and experts at ABS Consulting. The accuracy of these models heavily depends upon the accuracy of meteorological, engineering, and expert claim frequency assumptions.

4. Conclusions

This paper documents several important changes that have been implemented in the aggregate rate-making process used to determine indicated workers compensation loss cost and rate changes by state. The changes NCCI implemented support the long-term goals of adequacy and stability of loss costs and rates based on the explicit consideration of how to treat large events consistently from state to state in the ratemaking methodology.

This paper also serves to document for the first time in CAS literature how computer modeling is used in workers compensation.

Acknowledgments

The author acknowledges the work of John Robertson, Ia Hauck, John Deacon, and other NCCI staff members, whose efforts allowed NCCI to complete the aggregate ratemaking research and testing for the implementation of the large loss methodology. We acknowledge the contribution by Jon Evans for the loss exceedance illustrations, and Barry Lipton, whose work with Jon Evans and EQECAT allowed NCCI to produce the loss cost provisions for catastrophic events. We would also like to thank EQECAT for allowing NCCI to disclose an overview of their modeling techniques, much of which is proprietary, and specifically Omar Khemici, Andrew Cowell, and Ken Campbell of EQECAT, whose insights and work on performing the modeling was the basis for the results shown in the paper. Finally, we would like to thank the members of the Catastrophic Loss Subcommittee of NCCI’s Actuarial Committee for their input.

Abbreviations and Notations

-

AY—accident year

-

BLS—Bureau of Labor Statistics

-

CAS—Casualty Actuarial Society

-

CLDFT—Capped “paid + case” tail factor, 19th to ultimate, for threshold T.

-

CPI—consumer price index

-

CPS—Current Population Survey

-

CY—calendar year

-

DSR—Designated Statistical Reporting level of NCCI

-

EAL—expected annual loss

-

ELDFT—Excess “paid+case” tail factor, 19th to ultimate, for threshold T.

-

EQECAT—modeling company, a division of ABS Consulting Group

-

FT—Factor to apply to state-specific ULDF to get state-specific CLDFT for threshold T.

-

FTE—full-time equivalents

-

ISO—Insurance Services Office

-

LAE—loss adjustment expense

-

M—$millions

-

MIDAS-AT—Meteorological Information and Dispersion Assessment System-Anti-TerrorismTM

-

NCCI—National Council on Compensation Insurance, Inc.

-

OSHA—Occupational Safety and Hazard Administration

-

PY—policy year

-

RAA—Reinsurance Association of America

-

SCLDFT—State-specific capped “paid+case” tail factor, 19th to ultimate, for threshold T.

-

SULDFT—State-specific uncapped “paid+case” tail factor, 19th to ultimate, for threshold T.

-

TRIA—Terrorism Risk Insurance Act of 2002

-

TRIPRA—Terrorism Risk Insurance Program Reauthorization Act

-

ULDF—Uncapped “paid+case” tail factor, 19th to ultimate

-

US—United States

-

USGS—United States Geological Survey

-

WCSP—NCCI’s Workers Compensation Statistical Plan

-

XST—Per Claim adjusted excess ratio at threshold T